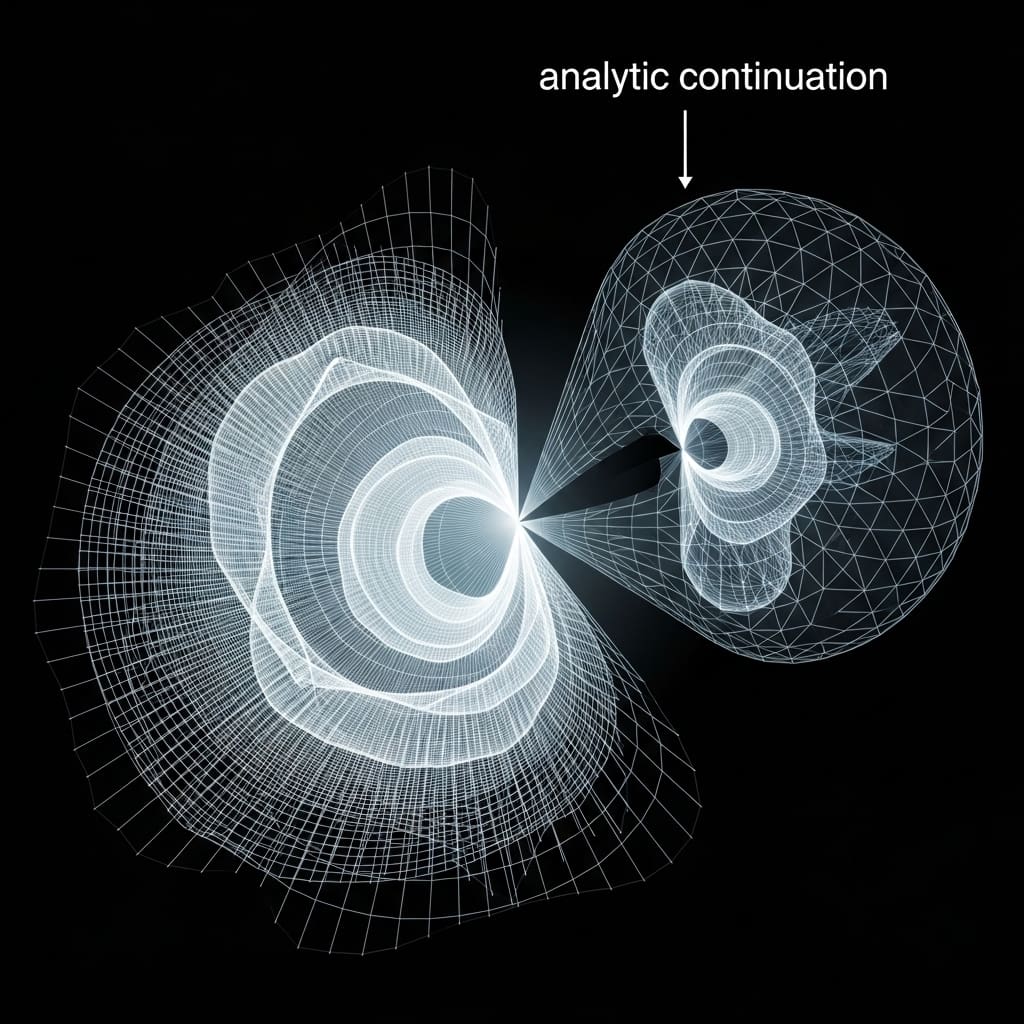

Extracting meaningful dynamic properties from complex quantum Monte Carlo simulations presents a significant challenge, largely due to the need to solve the analytic continuation problem. Thomas Chuna, Nicholas Barnfield, and Paul Hamann, working at Helmholtz-Zentrum Dresden-Rossendorf and the Center for Advanced Systems Understanding, alongside Sebastian Schwalbe, Michael P Friedlander, and Tobias Dornheim, now demonstrate the conditions under which the widely used maximum entropy method provides an acceptable solution. Their research reveals that stochastic sampling algorithms effectively reduce to entropy maximization when the initial Bayesian prior closely approximates the true solution, and importantly, that Bryan’s algorithm for entropy maximization is appropriate either with minimal noise or a well-informed prior. This work establishes a clearer understanding of the method’s limitations and highlights the benefits of data-driven Bayesian priors, achieving improved scaling of mean squared error and offering a pathway to more accurate results from complex simulations.

Maximum Entropy and Analytic Continuation Methods

This compilation details references concerning analytic continuation, Quantum Monte Carlo (QMC), and related computational techniques. The collection focuses on extending functions defined on limited domains, often imaginary frequencies from QMC simulations, to the entire complex plane. A prominent technique explored is the Maximum Entropy Method (MEM), with numerous references detailing its implementation, variations, and potential challenges. Researchers investigate appropriate regularization and search spaces for MEM to optimize its performance. Other methods include Stochastic Analytic Continuation (SAC), employing stochastic approaches to enhance stability and accuracy, and Nevanlinna Analytic Continuation, a more recent technique gaining prominence.

Sparse modeling and kernel methods also offer alternative approaches to analytic continuation. The collection also includes references to specialized tools like ACflow and optimized maximum entropy algorithms. Crucially, the importance of diagnostic tools and validation techniques for assessing the reliability of analytic continuation results is emphasized, with a focus on understanding and minimizing bias and variance. Many references relate to generating data that requires analytic continuation. A significant focus lies on studying the properties of the warm dense electron gas using QMC and analytic continuation, with particular attention paid to accurately representing the Local Field Correction (LFC), a crucial component in electron gas calculations. The collection also provides broader statistical context for understanding and improving analytic continuation. Bias-variance decomposition helps minimize errors, while Markov Chain Monte Carlo (MCMC) provides a foundation for the statistical methods used in QMC and some analytic continuation techniques.

References to time series analysis suggest techniques for data smoothing and analysis, and regularization is a common theme in many analytic continuation methods to prevent overfitting. Several software packages facilitate these techniques, including the Python packages ana cont, Pylit, and ACflow. A central application driving much of this research is the electron gas. The emphasis throughout is on developing methods that produce accurate and reliable results, with careful attention to error analysis and validation. Improving the speed and efficiency of both QMC simulations and analytic continuation algorithms is also a key goal, and the development of open-source software packages fosters research and collaboration. While QMC methods excel at providing accurate simulations of interacting quantum systems, determining dynamic properties requires solving the analytic continuation (AC) problem, which involves inverting equations relating imaginary-time correlation functions to real-frequency spectral functions. Researchers developed a rigorous approach to assess the validity of entropy maximization techniques, specifically the maximum entropy method (MEM), for tackling this complex inversion. Their methodology analyzes the conditions under which stochastic sampling algorithms effectively reduce to entropy maximization.

The team demonstrates that the accuracy of MEM hinges critically on the relationship between the Bayesian prior used in the algorithm and the true solution to the AC problem. They rigorously investigated scenarios where Bryan’s optimization algorithm, a widely used method for entropy maximization, delivers reliable results, finding it appropriate when noise levels are minimal or when the Bayesian prior closely approximates the true solution. Researchers quantified the performance of these methods using the mean squared error, revealing that better scaling is achieved when the Bayesian prior is near the true solution compared to scenarios where noise is minimized. This finding underscores the importance of informed prior selection in AC algorithms.

The team validated these theoretical results by solving the double Gaussian problem, employing both Bryan’s algorithm and a newly formulated dual approach to entropy maximization. This computational work provides concrete evidence supporting the link between prior accuracy and the reliability of the AC process, advancing the field’s ability to accurately determine dynamic properties from QMC simulations. This work addresses a long-standing challenge in fields like quantum chemistry, material science, and physics, where QMC methods provide highly accurate simulations but require complex mathematical procedures to obtain real-time information from imaginary-time data. The research focuses on determining when the maximum entropy method (MEM), a widely used analytic continuation algorithm, provides acceptable results compared to more computationally intensive stochastic methods. The team demonstrated that when the Bayesian prior used in the MEM closely approximates the true solution, a key condition for the validity of a simplified mathematical approximation known as Beach’s mean-field approximation is fulfilled.

This is the first demonstration of this condition being satisfied in a practical scenario, validating a theoretical connection between the prior and the accuracy of the method. Furthermore, the study establishes that Bryan’s algorithm, a controversial optimization technique used to solve the maximum entropy method, is appropriate when either the noise in the data is minimal or the Bayesian prior is close to the true solution. Detailed analysis revealed that the estimator used in Bryan’s algorithm behaves linearly under these conditions, justifying its use and addressing previous concerns about its reliance on potentially flawed assumptions. Crucially, the team developed formulas for the mean squared error (MSE) of the method, demonstrating that improving the Bayesian prior yields a greater reduction in error than simply reducing noise in the data. This finding provides valuable guidance for practitioners, suggesting that focusing on refining the initial assumptions used in the algorithm offers a more effective path to accurate results. The research supports these findings by successfully solving the double Gaussian problem using both Bryan’s algorithm and a newly formulated dual approach to entropy maximization, confirming the validity of the theoretical predictions.

Entropy Maximization Improves Simulation Accuracy

This research successfully establishes conditions under which entropy maximization accurately represents stochastic sampling algorithms, a crucial step for extracting dynamic properties from Monte Carlo simulations. The team demonstrates that when noise is minimal or the initial Bayesian prior closely approximates the true solution, the application of Bryan’s algorithm, a method for entropy maximization, is justified. Importantly, the study reveals that prioritizing an improved Bayesian prior yields a more favourable scaling of the mean squared error compared to minimizing noise alone, suggesting a pathway to enhance the accuracy of these simulations. The findings are supported by analysis of the double Gaussian problem, solved using both Bryan’s algorithm and a newly formulated dual approach to entropy maximization, confirming the theoretical predictions. While acknowledging the limitations inherent in the linearization and approximations used in their analysis, the authors highlight the potential for further improvements through refined data-driven Bayesian priors. Future work, they suggest, could focus on developing these priors to further minimize.

👉 More information

🗞 The noiseless limit and improved-prior limit of the maximum entropy method and their implications for the analytic continuation problem

🧠 ArXiv: https://arxiv.org/abs/2511.06915