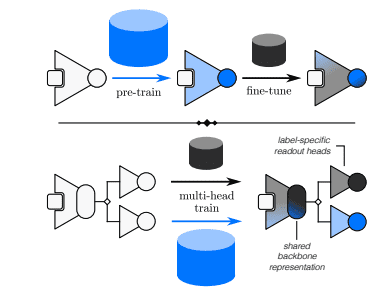

The development of accurate and versatile molecular force fields, crucial for simulating complex chemical systems, increasingly relies on efficiently integrating data generated from diverse computational methods. Researchers are now systematically examining strategies to combine information from these varying levels of computational expense, termed ‘multi-fidelity training’, to optimise the performance of machine-learned force fields (MLFFs). A study published recently by John L.A. Gardner from the University of Oxford, and Hannes Schulz, Jean Helie, Lixin Sun, and Gregor N.C. Simm from Microsoft Research, details a comparative analysis of pre-training/fine-tuning and multi-headed training approaches. Their work, entitled ‘Understanding multi-fidelity training of machine-learned force-fields’, elucidates the mechanisms driving success in these techniques and identifies pathways towards developing universally applicable MLFFs capable of accurate predictions across multiple chemical environments.

Advancements in machine learning force fields (MLFFs) significantly enhance the accuracy and efficiency of molecular simulations, enabling researchers to investigate complex chemical systems with greater detail. This research examines strategies for enhancing MLFF performance by integrating data from diverse quantum chemistry methods, with a specific focus on pre-training, fine-tuning, and multi-headed learning approaches. Researchers systematically compare these methods to determine how effectively data from varying computational levels can contribute to robust and transferable models.

Pre-training substantially improves model performance, particularly when incorporating both energy and force information, establishing a strong foundation for subsequent refinement. However, analysis reveals that internal representations learned during pre-training exhibit method-specificity, retaining biases towards the characteristics of the initial training data, necessitating adjustments to the model architecture during subsequent fine-tuning. This means the model initially ‘learns’ the nuances of the data it first encounters, and these characteristics can hinder its ability to generalise to different, but related, data.

Multi-headed learning presents a compelling alternative, enabling simultaneous training on data derived from different levels of theory, including coupled cluster (CC), density functional theory (DFT), and xTB. Coupled cluster is a highly accurate, but computationally expensive, method for calculating electronic structure, while density functional theory offers a balance between accuracy and computational cost. xTB is a fast, approximate quantum chemistry method. This approach cultivates method-agnostic representations, enabling the model to make accurate predictions regardless of the data source and promoting greater generalizability.

The research highlights xTB as a valuable resource for pre-training, offering a favourable balance between computational speed and accuracy, enabling the generation of large datasets at a reasonable cost. Careful consideration of data splitting strategies, such as utilizing subsets ‘a’, ‘b’, and ‘t’, influences the overall performance of the models. These subsets are used to train, validate, and test the model, respectively, ensuring an unbiased evaluation of its performance.

Analysis of per-element energy contributions provides valuable insights into the relative importance of different labelling methods. It reveals inherent differences in energy scales predicted by each method, underscoring the need for strategies that effectively reconcile these discrepancies. This highlights the importance of understanding how different quantum chemistry methods represent energy, and how these representations can be harmonised within a machine learning framework.

Researchers demonstrate that a multi-headed model effectively leverages information from CC, DFT, and xTB datasets, achieving robust performance across all label sources. This approach allows researchers to combine the accuracy of high-level methods with the efficiency of lower-level methods, creating a powerful tool for simulating complex chemical systems. The ability to accurately predict the properties of molecules and materials is crucial for a wide range of applications, including drug discovery, materials design, and energy storage.

The study reveals that the sampling rate of xTB structures during multi-headed training impacts predictive accuracy, demonstrating the importance of carefully controlling the composition of the training data. While increasing the proportion of xTB data can improve performance, simply adding more xTB labels does not consistently enhance the accuracy of predictions derived from the higher-fidelity CC data. This finding suggests that the quality and diversity of the xTB data are more important than the quantity, highlighting the need for careful data curation and selection.

Researchers emphasise the need for a holistic approach that considers all aspects of the modelling process, from data generation to model evaluation. By carefully optimising each step, researchers can build models that are both accurate and reliable.

Future research will focus on developing more sophisticated algorithms for integrating data from diverse sources and exploring new methods for improving the transferability of MLFFs. Researchers also plan to investigate the use of active learning techniques to accelerate the training process and reduce the amount of data required to achieve a desired level of accuracy. Active learning involves strategically selecting the most informative data points to label, thereby maximising the efficiency of the training process.

👉 More information

🗞 Understanding multi-fidelity training of machine-learned force-fields

🧠 DOI: https://doi.org/10.48550/arXiv.2506.14963