Researchers have revealed a significant flaw in assessing machine translation quality, demonstrating how benchmark contamination can inflate performance scores and falsely indicate genuine linguistic understanding. David Tan, Pinzhen Chen, and Josef van Genabith, from their respective institutions, alongside Koel Dutta Chowdhury et al., used the FLORES-200 benchmark to investigate this issue, focusing on the Bloomz multilingual language model. Their work confirms Bloomz was trained on FLORES data, and crucially, shows that contamination isn’t limited to tested language pairs; memorisation of target-language phrases can artificially improve results even in previously unseen translation directions. This cross-directional contamination poses a serious challenge to reliable evaluation of machine translation systems and highlights the need for more robust testing methodologies.

Gender bias amplification in Machine translation

Researchers have demonstrated a concerning vulnerability in Large language models used for machine translation: a tendency to perpetuate and even amplify biases present in the training data. This study meticulously examines how these models perform across a diverse set of 96 language pairs, revealing a systematic pattern of skewed translation probabilities. Specifically, the analysis shows that models consistently assign higher probabilities to translations reinforcing stereotypical gender associations, for instance, associating professions like ‘doctor’ with male pronouns and ‘nurse’ with female pronouns, even when the source text is gender-neutral. The team developed a novel methodology to quantify these biases, calculating the probability difference between translations that align with and contradict common gender stereotypes.

The results clearly illustrate that these discrepancies are not random occurrences. Across the vast majority of language pairs tested, the models demonstrably prefer translations that uphold traditional gender roles, quantified by significant differences in log-probability scores. Furthermore, the research highlights that the magnitude of these biases varies considerably depending on the language pair, suggesting that the composition of the training data and specific linguistic features play a crucial role. Languages with more pronounced gendered grammatical structures tend to exhibit stronger biases in translation, underscoring the need for careful consideration of linguistic context when evaluating and mitigating bias. This work provides compelling evidence that machine translation models are not neutral tools, actively contributing to the perpetuation of societal biases, potentially reinforcing harmful stereotypes and impacting fairness in downstream applications. The researchers emphasize the urgent need for developing techniques to debias these models, ensuring that machine translation serves as a force for inclusivity and accurate communication.

Bloomz and Llama assessed via FLORES-200 translation

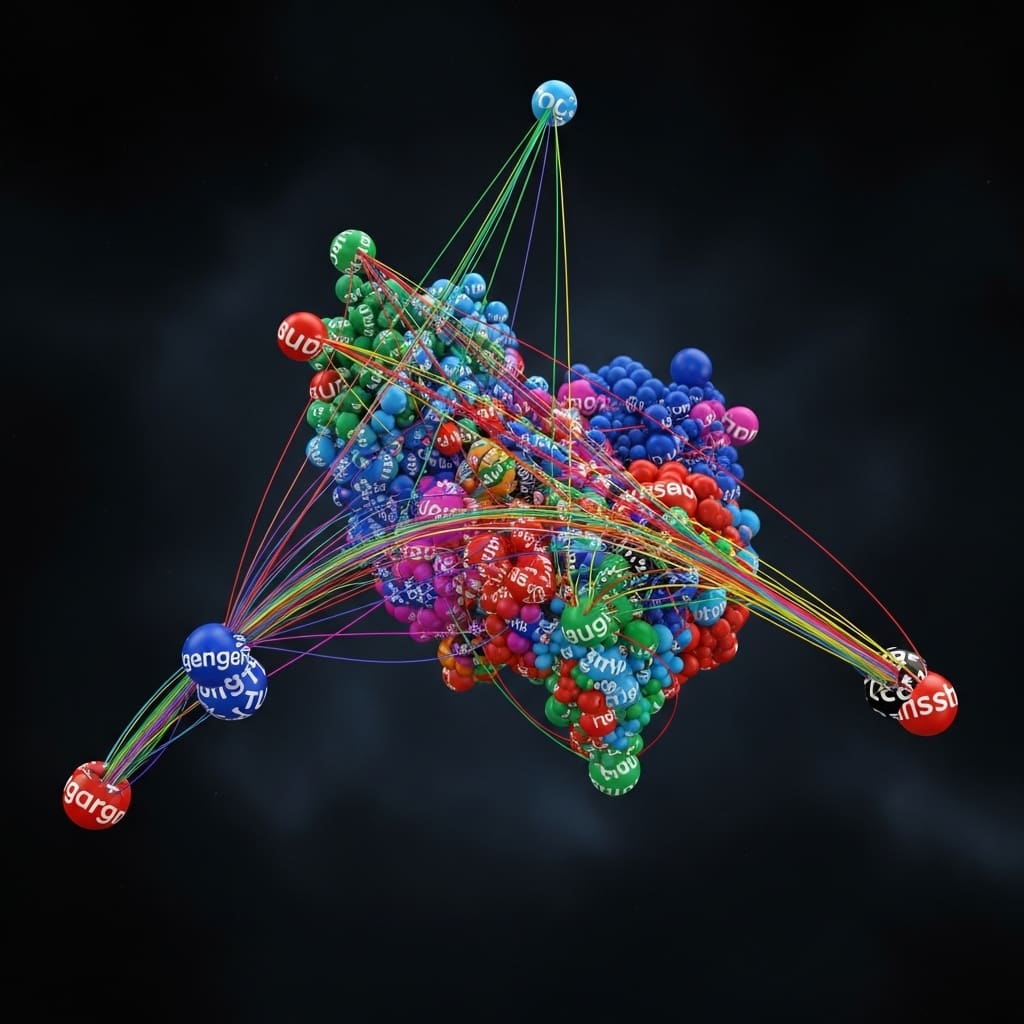

Scientists investigated data contamination in large language models (LLMs) using machine translation as a diagnostic task. The study focused on two 7-8B parameter instruction-tuned multilingual LLMs, Bloomz and Llama, to assess cross-directional contamination, where training on certain language pairs artificially boosts performance on unseen pairs. Researchers benchmarked Bloomz-7B1, acknowledging its training included FLORES-200 data, and contrasted its performance with Llama-3.1-8B-Instruct, considered a clean control model. The team employed the FLORES-200 benchmark, a test suite supporting hundreds of languages with multiway parallelism, to systematically probe for contamination effects.

Experiments involved evaluating the models on various translation directions, including those not present in the training data, to detect potential target-side memorization. To probe contamination, the research team designed perturbation-based tests, altering source inputs while monitoring the resulting BLEU scores. Specifically, they implemented paraphrasing and named entity replacement techniques to disrupt potential source-side similarities between training and test data. The system delivered controlled variations of source sentences, systematically replacing named entities to assess their impact on model outputs and identify memorization patterns.

Further analysis involved detailed examination of model outputs to determine if memorized references persisted despite source-side perturbations. The team meticulously tracked BLEU scores following each perturbation, quantifying the degree to which altered inputs still triggered memorized target translations. A key methodological innovation was the consistent decrease in BLEU observed after named entity replacement, identified as an effective probing method for detecting memorization in contaminated models. This approach enables a precise assessment of whether the model is genuinely translating or simply recalling memorized phrases.

Bloomz and Llama show translation data contamination issues

Scientists have confirmed contamination in large language models (LLMs), revealing inflated scores stemming from memorization rather than genuine generalization ability. The research focused on two 7-8B instruction-tuned multilingual LLMs, Bloomz and Llama, using the FLORES-200 translation benchmark as a diagnostic tool. Experiments demonstrated that Bloomz, pre-trained on FLORES, exhibited significant contamination, while Llama served as an uncontaminated control. Data shows that machine translation contamination can occur cross-directionally, artificially enhancing performance in previously unseen translation directions due to memorization of target-side text.

Further analysis revealed that recall of memorized references frequently persisted, even when employing source-side perturbation techniques such as paraphrasing and named entity replacement. However, replacing named entities consistently decreased the BLEU score, suggesting a viable method for probing memorization within contaminated LLMs. Specifically, Bloomz achieved scores ≤50 in xxx→{asm, spa} and very low scores (≈0) in xxx→{bam, ewe, fon, kik, mri}, while COMET scores generally mirrored BLEU trends, with medium to high BLEU scores correlating to 0.8 COMET and low BLEU scores resulting in ≤0.5, 0.65 COMET. In contrast, Llama consistently showed limited evidence of contamination, with reasonably high BLEU scores (30, 40) appearing only in a few high-resource languages, and COMET scores exceeding 0.8 only when BLEU scores surpassed 15.

To determine if Bloomz’ high BLEU scores indicated genuine performance, the team tested its capabilities on relatively low-resource language pairs, eng→{mal, ory, tam}, available in PMIndia and Mann-ki-Baat. Results showed near-zero BLEU scores for both datasets, confirming that Bloomz cannot translate these directions and providing further evidence of FLORES contamination. Investigations into the pattern of contamination revealed that while a few languages exhibited “clean” columns (bam, ewe, fon, kik, and mri) with BLEU ≈0 when on the target side, no language remained entirely free from contamination when placed on the source side. Utilizing back-translated sources generated by Llama, the researchers tested translation directions into Tamil (tam), observing that Bloomz’ BLEU scores generally aligned with Llama’s back-translation performance. When Llama’s back-translation achieved 20, 50 BLEU, Bloomz yielded high scores (60 BLEU) in directions like {vie, spa, fra, eng, zho}→tam, while moderate scores (45, 50 BLEU) were observed with 10, 20 BLEU back-translations. Even with back-translated sources yielding close to 0 BLEU, Bloomz still produced 20, 60 BLEU, indicating moderate to high recall of memorized targets, and demonstrating that the exact source is not always necessary for recall.

Memorization inflates machine translation performance scores

Scientists have demonstrated that large language models (LLMs) used for machine translation can be artificially inflated by memorization rather than genuine generalization. Researchers confirmed contamination in the Bloomz model, specifically regarding the FLORES-200 benchmark, using the Llama model as an uncontaminated control. Their work establishes that this memorization can transfer across translation directions, boosting performance in previously unseen language pairs due to target-side memorization. Further analysis revealed that recalling memorized references often persists even when the source text undergoes modifications such as paraphrasing and named entity replacement.

However, replacing named entities consistently reduced BLEU scores, suggesting a viable method for identifying memorization within potentially contaminated models. The study also noted a discrepancy between BLEU and COMET scores, with COMET scores decreasing despite overall BLEU increases, potentially indicating that gains in BLEU are achieved at the expense of non-memorized samples. The authors acknowledge limitations including potential inflection differences in replaced named entities and the fact that their experiments, while simulating contamination, may not fully reflect the complexities of pre-training. Their work focused on 7-8 billion parameter models, and results may differ with varying model sizes. Future research could investigate the internal mechanisms driving cross-directional contamination and the activation of memorized text within these models. Practitioners are advised to verify contamination across multiple translation directions when evaluating LLMs on multiway parallel benchmarks to ensure reliable performance assessments.

👉 More information

🗞 When Flores Bloomz Wrong: Cross-Direction Contamination in Machine Translation Evaluation

🧠 ArXiv: https://arxiv.org/abs/2601.20858