The ability of artificial intelligence to interpret medical images and assist in diagnosis is rapidly evolving, but current evaluation methods often overlook how these systems arrive at their conclusions. Juntao Jiang, Jiangning Zhang, and Yali Bi, from Zhejiang University, alongside colleagues including Jinsheng Bai, Weixuan Liu, and Weiwei Jin from ZJPPH and ECNU, have addressed this critical need with the development of M3CoTBench. This new benchmark specifically assesses Chain-of-Thought (CoT) reasoning in Multimodal Large Language Models (MLLMs) as applied to medical image understanding. M3CoTBench moves beyond simply judging the accuracy of a diagnosis, instead focusing on the correctness, efficiency, impact, and consistency of the reasoning process itself. By providing a diverse and challenging dataset encompassing 24 examination types and 13 tasks, the researchers hope to drive the creation of more transparent, trustworthy, and ultimately, more effective AI diagnostic tools.

Chain-of-Thought (CoT) prompting aligns naturally with clinical thinking processes, yet current benchmarks typically focus solely on the final answer, neglecting the reasoning pathway.

This opacity hinders the establishment of reliable judgement bases and limits the potential for these models to effectively assist doctors in diagnosis. To address this limitation, researchers introduced M3CoTBench, designed to evaluate the correctness, efficiency, impact, and consistency of CoT reasoning in medical image understanding. The benchmark features a diverse, multi-level difficulty dataset covering a range of medical imaging modalities and clinical scenarios, allowing for a granular assessment of how well MLLMs can articulate a logical and clinically sound rationale for their conclusions.

The researchers anticipate that M3CoTBench will serve as a tool for driving progress in the development of more transparent and trustworthy AI systems for medical image analysis.

M3CoTBench Evaluating Medical Image Reasoning Capabilities

Scientists have developed M3CoTBench, a new benchmark designed to rigorously evaluate chain-of-thought (CoT) reasoning within multimodal large language models (MLLMs) applied to medical image understanding. The research introduces a dataset encompassing 1079 image-question pairs, covering 24 examination types and 13 varying-difficulty tasks.

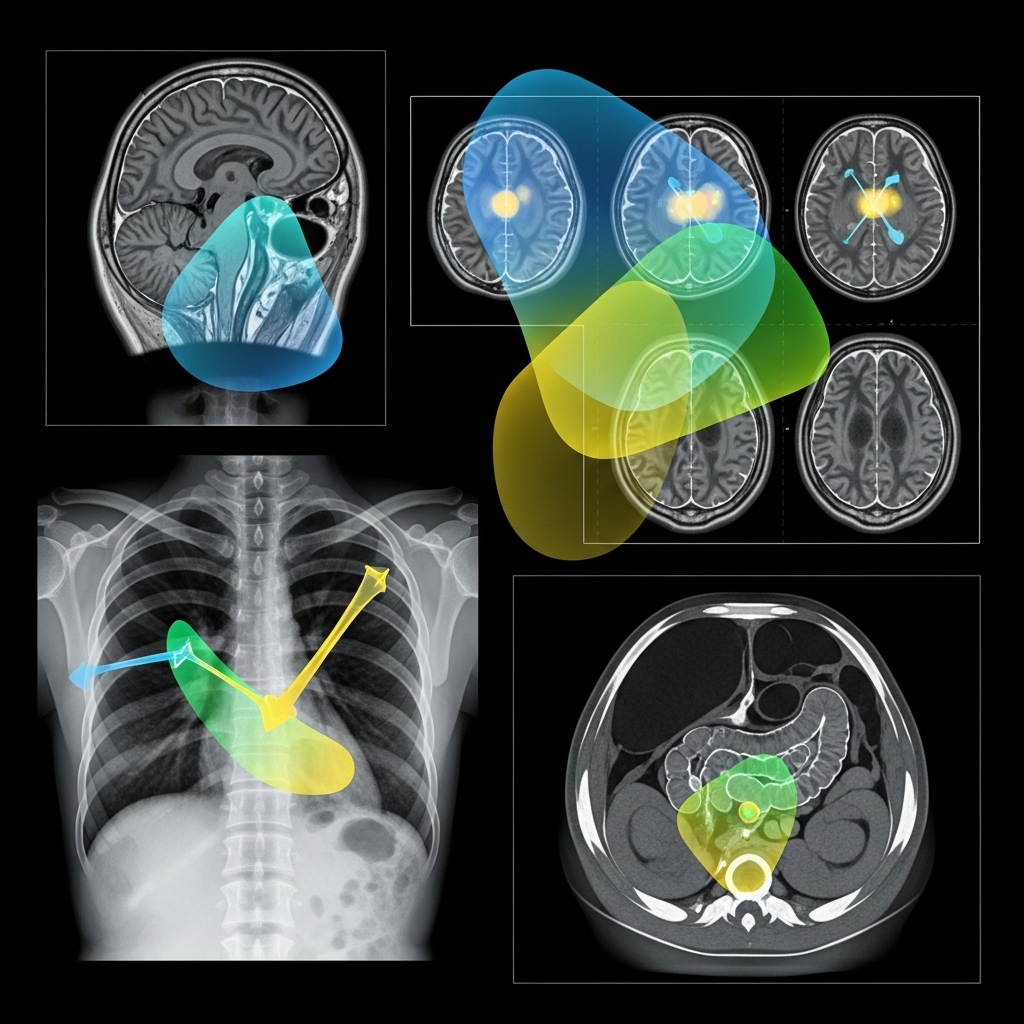

This comprehensive collection allows for systematic assessment of CoT reasoning across a broad spectrum of clinical imaging scenarios, ranging from basic perception to advanced medical reasoning. Experiments revealed that M3CoTBench incorporates four question formats , single-choice, multiple-choice, true/false, and short-answer , to challenge MLLMs’ abilities in diverse ways. The dataset includes modalities such as slit lamp photography, fundus photography, X-ray, computed tomography, ultrasound, and microscopy, representing a wide range of diagnostic applications.

Tasks span from assessing image quality and recognising anatomical features to complex clinical reasoning like diagnosis, grading, and predicting potential outcomes. The team measured CoT reasoning performance using four key metrics: correctness, efficiency, impact, and consistency. Correctness assesses the accuracy of the reasoning steps, while efficiency quantifies the additional inference time required for CoT processing.

Results demonstrate that impact measures the improvement in answer accuracy achieved through reasoning, and consistency evaluates the similarity of reasoning paths for similar tasks. This multifaceted evaluation suite provides a granular understanding of MLLM performance. Data shows that a multi-stage human-AI collaborative verification process was employed to ensure high-quality annotations, establishing a robust framework for evaluating and improving the reliability and trustworthiness of MLLMs in healthcare.

Multimodal Reasoning Evaluation for Medical Imaging

Researchers have introduced M3CoTBench, a new benchmark designed to rigorously evaluate chain-of-thought reasoning within multimodal large language models applied to medical image understanding. This benchmark moves beyond simple question answering by assessing the correctness, efficiency, impact, and consistency of the reasoning process itself, utilising a diverse dataset encompassing 24 examination types and 13 varying difficulty tasks.

The work demonstrates a systematic evaluation of current models, revealing limitations in their ability to generate reliable and clinically interpretable reasoning pathways. The significance of this work lies in its focus on transparency and trustworthiness in medical AI systems. By explicitly evaluating the reasoning steps, rather than solely the final answer, M3CoTBench facilitates the development of models that can better support clinical decision-making and provide a basis for judging diagnostic accuracy.

The authors acknowledge that the current benchmark primarily focuses on image understanding and doesn’t yet incorporate other crucial clinical data, such as patient history or lab results. Future research will concentrate on expanding the benchmark to include these additional modalities and exploring methods to improve the consistency and robustness of reasoning in MLLMs, ultimately aiming for more effective and reliable diagnostic tools.

👉 More information

🗞 M3CoTBench: Benchmark Chain-of-Thought of MLLMs in Medical Image Understanding

🧠 ArXiv: https://arxiv.org/abs/2601.08758