Researchers are tackling the persistent challenge of equipping large language models with the ability to handle complex, multi-turn interactions that demand planning, state tracking and sustained contextual awareness. Amin Rakhsha from the University of Toronto, alongside Thomas Hehn, Pietro Mazzaglia, Fabio Valerio Massoli, Arash Behboodi, Tribhuvanesh Orekondy and colleagues at Qualcomm AI Research, present a novel framework , LUMINA , to pinpoint which of these underlying capabilities most hinders progress. By employing an ‘oracle counterfactual’ approach and a suite of carefully designed, tunable tasks, the team rigorously isolates the impact of perfect planning or state tracking, revealing that while planning consistently boosts performance, the value of other skills varies significantly depending on the environment and the model itself. This work provides crucial insights into the bottlenecks facing interactive agents, offering a roadmap for future development in both language model architecture and training methodologies.

This work provides crucial insights into the bottlenecks facing interactive agents, offering a roadmap for future development in both language model architecture and training methodologies.

LLM Agent Skills Dissected via LUMINA Environments reveal

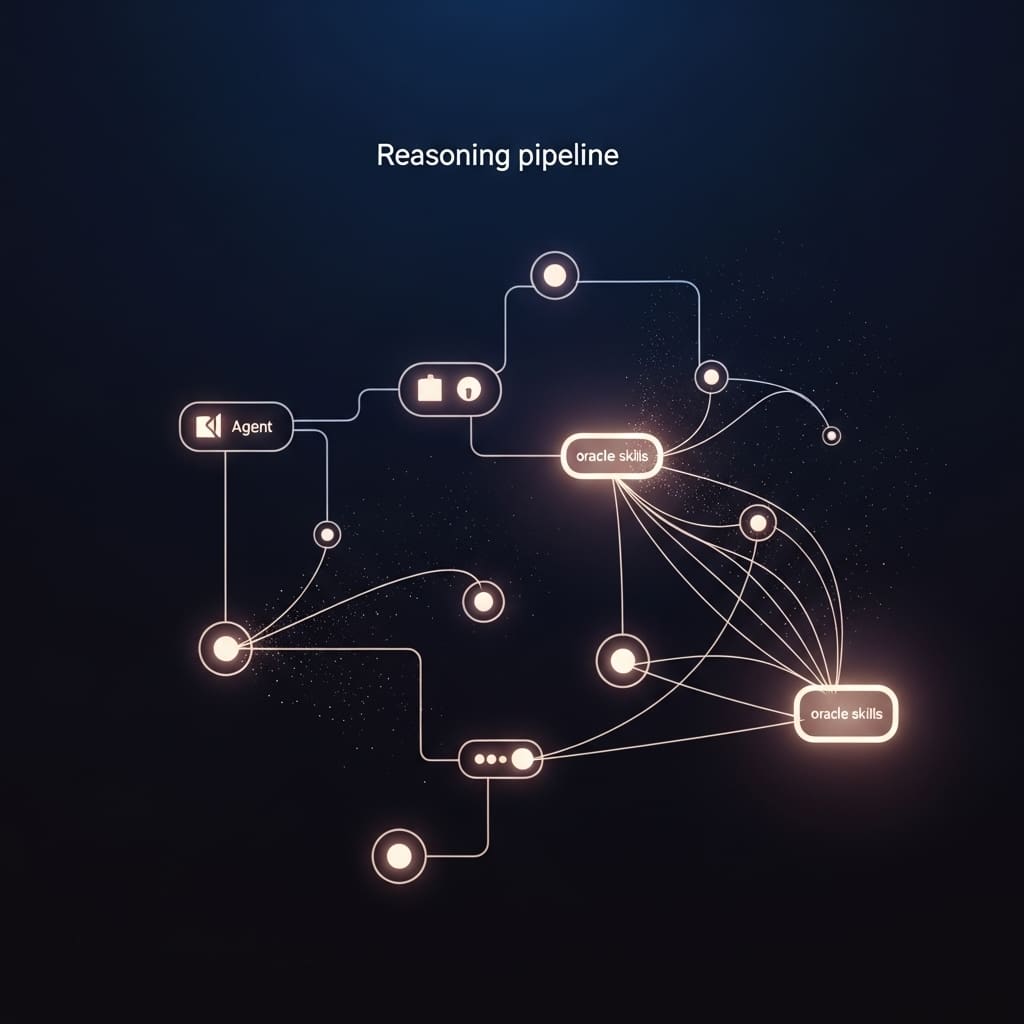

Scientists have demonstrated a novel framework to dissect the core skills required for large language models to excel in complex, multi-turn agentic tasks. This innovative methodology asks a critical question: how would an agent perform if it possessed perfect execution of a specific skill, such as flawless planning? The team achieved a breakthrough by quantifying the impact of these “oracle” skills on agent performance, measuring the change in success rate when an agent is granted perfect assistance in areas like planning or state tracking. These controlled environments, ListWorld, TreeWorld, and GridWorld, enable systematic oracle interventions at any step, providing accurate ground truth for optimal actions and strategies.

This work establishes a crucial understanding of the challenges inherent in multi-turn agentic environments, offering valuable guidance for future development of both AI agents and language models. The research reveals a significant discrepancy between high per-step accuracy and low success rates over long horizons, indicating that compounding errors pose a major obstacle for LLM-based agents. Results demonstrate that oracle interventions generally enhance success rates, but their effectiveness is modulated by model size, with larger models benefiting more from improved skills. Furthermore, the study highlights that different environments exhibit unique characteristics, leading to varying gains from interventions and measurable performance differences. The findings open new avenues for targeted research, focusing on exploiting environment-specific knowledge and tailoring skill development to maximize agent capabilities in complex, interactive scenarios. This research not only advances our understanding of LLM limitations but also provides a robust framework for evaluating and improving the core competencies of future AI agents.,.

Oracle Counterfactuals for Skill Criticality in LLMs reveal

This work investigates how performance improves when models leverage perfect assistance with specific sub-skills, quantifying the criticality of each skill for future advancement. The study pioneered a methodology focused on measuring ‘step accuracy’, defining a step as accurate if the agent’s action is optimal at that specific moment. ListWorld challenges agents to prune a Python list to a target list using only the ‘pop(index)’ action, while TreeWorld requires iterative tree searching to locate a node with a specific value. GridWorld tasks agents with navigating a grid from a start to a goal location within a limited number of turns.

Each environment was constructed as a Partially Observable Markov Decision Process (POMDP), with tasks communicated verbally to the agent. Crucially, the research team ensured no data contamination by generating novel tasks and enabling random re-generation, alongside the ability to construct faithful oracle interventions due to complete knowledge of the underlying process. Experiments were configured with a finite turn budget, Tmax ≥mT ∗+ n, where T ∗ represents the optimal number of actions and n is a buffer. The environments provide minimal feedback, such as ‘move successful’, at each turn, forcing the agent to rely on its reasoning and action selection. This innovative approach allows researchers to pinpoint bottlenecks in multi-turn agents and guide future development of both agents and language models.

Compounding errors limit long-horizon task success, especially in

Experiments revealed a significant discrepancy between high per-step accuracy and low success rates over long trajectories, indicating that compounding errors pose a major challenge for agents. Measurements confirm that this compounding of errors represents a critical bottleneck in achieving success in complex, multi-turn environments. Data shows that the interventions allowed researchers to systematically examine agent capabilities along multiple dimensions, including task complexity and model size. Scientists recorded that even with high accuracy at each step, agents struggled with long-horizon trajectories, highlighting the challenge of maintaining coherence and recovering from errors over multiple turns.

Measurements confirm that the introduction of perfect planning significantly boosted performance, suggesting its crucial role in navigating complex tasks. Furthermore, tests prove that the procedurally generated environments enabled accurate oracle annotations, overcoming the limitations of real-world benchmarks where exhaustive analysis is often impractical. The work sheds light on the interplay between environment characteristics and model capabilities, revealing that different environments exhibit varying gains from oracle interventions and measurable performance discrepancies. Overall, findings present a nuanced picture: improving specific skills generally aids LLM-based agents, but fully bridging the performance gap requires exploiting environment and model-specific understanding. Researchers observed that the success rate on the AppWorld benchmark for LLama3-70B is 7.0%, while GPT-4o achieves 30.2%, demonstrating the current limitations of open-weight models in complex multi-turn scenarios.

Oracle Interventions Reveal LLM Skill Limits, highlighting areas

Researchers introduced a method using “oracle” interventions, simulating perfect performance of specific skills, to measure their criticality for improving LLM performance. Experiments with Gemma 3 and Qwen3 models, alongside GPT-4o, revealed that history pruning, selectively removing irrelevant information, significantly benefits smaller models, but can hinder larger ones. This suggests that the optimal approach to improving LLM agents varies with model size and task characteristics. The research highlights the importance of near-perfect accuracy at each step to prevent compounding errors in multi-turn interactions.

This work represents an initial step towards explaining the performance gap between LLMs on single-turn reasoning versus complex, multi-turn agent tasks. The authors acknowledge limitations stemming from reliance on prompt-based mechanisms to elicit agent behaviour and the imperfect nature of instruction following in LLMs. Furthermore, the analysis was conducted on simplified, programmable environments, making direct application to real-world scenarios challenging. Future research could explore post-training methods to refine prompts and develop an “instruction following” oracle to strengthen the analysis. The team believes such analyses are crucial for mitigating potential risks associated with faulty or malicious autonomous agentic systems.

👉 More information

🗞 LUMINA: Long-horizon Understanding for Multi-turn Interactive Agents

🧠 ArXiv: https://arxiv.org/abs/2601.16649