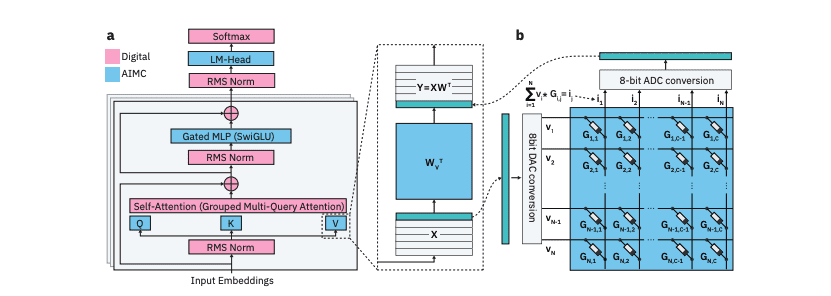

Large language models (LLMs) typically experience performance degradation when deployed on in-memory computing (AIMC) hardware due to noise and low-precision constraints. Researchers have developed a scalable method to adapt models like Phi-3 and Llama-3 for AIMC, maintaining performance comparable to 4-bit weight and 8-bit activation baselines. This training methodology also facilitates LLM quantization for low-precision digital hardware and improves test-time scaling, offering a pathway to more energy-efficient foundation models. Code is available online.

The pursuit of energy-efficient artificial intelligence is increasingly focused on neuromorphic computing architectures, specifically utilising in-memory computing (AIMC) to overcome the limitations of traditional von Neumann systems. However, deploying large language models (LLMs) on such hardware presents significant challenges due to inherent noise and the need for extreme quantization of data. Researchers at IBM Research – Zurich and Almaden, alongside ETH Zürich and the Thomas J. Watson Research Center, have developed a scalable method to adapt LLMs for execution on these constrained systems, retaining performance levels comparable to higher-precision baselines. This work, detailed in their paper ‘Analog Foundation Models’, is led by Julian Büchel, Iason Chalas, Giovanni Acampa, An Chen, Omobayode Fagbohungbe, Sidney Tsai, Kaoutar El Maghraoui, Manuel Le Gallo, Abbas Rahimi, and Abu Sebastian.

Enabling Energy-Efficient Inference: Adapting Large Language Models for In-Memory Computing

Researchers successfully demonstrate a scalable methodology for adapting large language models (LLMs) to function effectively on in-memory computing (IMC) hardware, overcoming challenges posed by inherent noise and low-precision quantization. This breakthrough enables energy-efficient inference, paving the way for deploying powerful AI models on resource-constrained devices and reducing the environmental impact of artificial intelligence. The study establishes that models such as Phi-3-mini-4k-instruct and Llama-3.2-1B-instruct maintain performance comparable to that achieved with 4-bit weights and 8-bit activations, even when deployed on constrained IMC systems, representing a significant step towards realizing the potential of IMC for LLM inference. This achievement addresses a critical need for efficient AI hardware, as traditional computing architectures struggle to keep pace with the growing demands of increasingly complex models.

The core of this advancement lies in a scalable training approach that actively mitigates the impact of hardware imperfections, a departure from previous work that largely focused on smaller, vision-based models. Researchers extended the solution to LLMs pre-trained on datasets measured in trillions of tokens, the fundamental units of text processed by the model, demonstrating the methodology’s applicability to significantly larger and more complex architectures. This innovative training process not only recovers lost accuracy resulting from quantization and noise but also facilitates the quantization of foundation models for deployment on conventional low-precision digital hardware, broadening the potential impact of the research. The ability to quantize models effectively reduces memory footprint and computational demands, making them more accessible for a wider range of applications.

Furthermore, the research reveals an unexpected benefit: models trained using this approach exhibit improved scaling behaviour during test-time scaling, surpassing the performance of models trained with standard 4-bit weight and 8-bit static input quantization. Test-time scaling involves dynamically adjusting a model’s parameters during inference to optimize performance, and this enhanced behaviour suggests a more robust and adaptable model architecture. This improved scaling capability allows for fine-tuning the model’s performance based on specific hardware constraints and application requirements, maximizing efficiency and accuracy. The discovery of this unexpected benefit highlights the potential for synergistic interactions between hardware-aware training and model architecture design.

The study meticulously addresses ethical and methodological considerations, ensuring a responsible research process and promoting transparency and reproducibility. Researchers publicly released the code under the MIT License, encouraging further investigation and collaboration within the AI community. They appropriately acknowledged and cited existing assets, demonstrating respect for prior work and fostering a collaborative research environment. The absence of reliance on crowdsourcing or human subjects, and minimal use of large language models in the methodology itself, further strengthens the responsible nature of the work, minimizing potential biases and ethical concerns.

The study’s success hinges on the ability to effectively quantize LLMs without sacrificing performance, a challenging task given the sensitivity of these models to precision loss. Quantization reduces the number of bits used to represent model parameters (weights) and activations, decreasing memory usage and computational demands. However, it can also lead to a significant loss of accuracy if not performed carefully. Researchers developed a novel training strategy that minimizes this accuracy loss by carefully calibrating the quantization process and incorporating hardware-aware regularization techniques. This allows for deploying LLMs on devices with limited memory and computational resources without compromising performance.

The research team meticulously evaluated the performance of the trained models on a variety of benchmark datasets, demonstrating their ability to maintain high accuracy and efficiency across different tasks. They compared the performance of the quantized models to that of full-precision models, showing that the accuracy loss is minimal, especially when combined with the hardware-aware training strategy. The results demonstrate the effectiveness of the proposed methodology in enabling efficient LLM inference on resource-constrained devices. The team also conducted extensive ablation studies to analyze the contribution of different components of the training strategy, providing valuable insights into the underlying mechanisms.

Future work should explore the generalizability of this training methodology across a wider range of LLM architectures and hardware platforms, expanding its potential impact. Investigating the limits of quantization – pushing towards even lower precision levels – could unlock further energy savings, but requires careful consideration of the trade-offs between accuracy and efficiency. Additionally, research into adaptive quantization techniques, where the precision is dynamically adjusted based on the input data, may yield additional performance gains. This would allow the model to prioritize precision for critical parts of the input and reduce it for less important parts, optimizing both accuracy and efficiency.

In conclusion, this research represents a significant step forward in enabling energy-efficient inference for large language models. By developing a novel training methodology that incorporates hardware characteristics and minimizes quantization errors, researchers have demonstrated the feasibility of deploying powerful AI models on resource-constrained devices. This breakthrough has the potential to unlock a new era of AI-powered applications and address the growing demand for sustainable and efficient computing. The continued exploration of adaptive quantization techniques, specialized hardware accelerators, and robust training strategies will further enhance the performance and impact of this research.

👉 More information

🗞 Analog Foundation Models

🧠 DOI: https://doi.org/10.48550/arXiv.2505.09663