Hyperdimensional computing offers a promising pathway for building efficient artificial intelligence systems for devices with limited resources, but its standard design demands significant memory capacity. Sanggeon Yun from University of California, Irvine, Hyunwoo Oh, and Ryozo Masukawa, alongside colleagues from Intel Corporation and the United States Military Academy, now present LogHD, a novel compression technique that dramatically reduces memory requirements without sacrificing accuracy. This method cleverly replaces individual class representations with compact “bundle hypervectors”, effectively shrinking the model size while maintaining performance, and even improving resilience to data corruption. The team demonstrates that LogHD achieves competitive accuracy with smaller models and exhibits significantly higher tolerance to bit-flip errors, and an initial hardware implementation delivers substantial energy efficiency and speed improvements over existing systems.

High-Dimensional Vectors for Pattern Recognition

This research explores hyperdimensional computing (HDC), a biologically inspired computing method that utilizes high-dimensional vectors to represent and process data. HDC encodes information as distributed patterns across these vectors, determining similarity through cosine similarity and performing computations using vector operations. This approach offers potential benefits in terms of energy efficiency, robustness to noise, and suitability for parallel processing, making it promising for learning and pattern recognition. The team addressed challenges related to scalability, energy efficiency, and reliability in HDC systems, optimizing it for real-world deployment through techniques like compression, quantization, and sparsity.

They also investigated algorithm-hardware co-optimization to maximize performance on specific platforms, exploring sparse vector creation, quantization frameworks, and model compression. The team evaluated their methods on benchmark datasets, including speech recognition data (ISOLET), human activity recognition data (UCI and PAMAP2), and page block classification data. Their work highlights the importance of energy-efficient computing, robustness to noise, scalability, and hardware acceleration in HDC systems, ultimately aiming to enable HDC on resource-constrained edge devices for applications such as classification, pattern recognition, and reasoning.

Compact Hypervectors Preserve Robustness and Performance

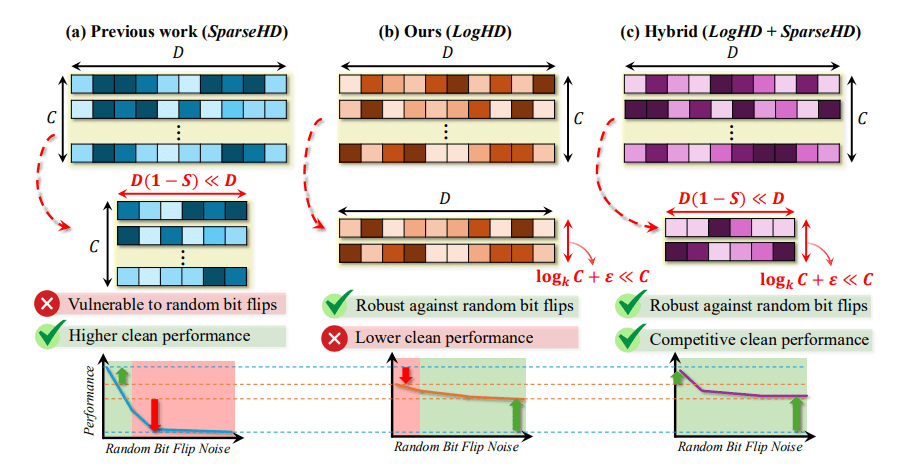

Researchers developed LogHD, a new approach to hyperdimensional computing that significantly reduces memory requirements while maintaining high performance and resilience. LogHD achieves this by replacing traditional per-class hypervectors with compact bundle representations, enabling substantial memory savings without sacrificing accuracy. Experiments demonstrate that LogHD maintains comparable performance to conventional hyperdimensional computing methods, even with reduced dimensionality. A hybrid approach, combining LogHD with sparse hypervectors, offers a tunable balance between performance and resilience.

The researchers implemented LogHD in a dedicated application-specific integrated circuit (ASIC) and compared its efficiency to conventional hyperdimensional computing on a CPU and GPU. The ASIC implementation of LogHD achieved a 4. 06-fold increase in energy efficiency and a 2. 19-fold speedup compared to a sparse hyperdimensional computing ASIC, significantly outperforming both CPU and GPU baselines. The study also explored the effect of alphabet size on performance, demonstrating that increasing the alphabet size improves accuracy, particularly in noisy environments. Reducing dimensionality along the class axis predictably preserves robustness, while reducing along the feature axis introduces uncertainty under faults, suggesting LogHD is a promising approach for building energy-efficient and robust hyperdimensional computing systems.

LogHD Compression Boosts Efficiency and Resilience

The team presents LogHD, a novel compression scheme for hyperdimensional computing that significantly reduces both memory requirements and computational load while maintaining dimensionality. By replacing numerous hypervectors with a more compact representation, LogHD achieves robustness against device faults and quantization errors, offering flexibility even with limited resources. Experiments across diverse datasets demonstrate that LogHD sustains accuracy at bit-flip rates up to three times higher than those achieved with conventional feature-axis compression techniques. Furthermore, the researchers successfully implemented LogHD in an application-specific integrated circuit (ASIC), revealing substantial gains in energy efficiency and speed. This ASIC instantiation delivers up to 498times greater energy efficiency and a 63times speedup compared to central processing unit baselines, establishing class-axis compaction as a scalable and hardware-friendly strategy for robust, memory-centric machine learning. The authors acknowledge that the performance gains are dependent on the specific hardware implementation and may vary with different device technologies, with future work likely focusing on exploring the limits of compression and optimizing the system for even greater efficiency and scalability.

👉 More information

🗞 LogHD: Robust Compression of Hyperdimensional Classifiers via Logarithmic Class-Axis Reduction

🧠 ArXiv: https://arxiv.org/abs/2511.03938