Optimising code performance remains a central challenge in modern software engineering, particularly for large-scale production systems, and recent advances in large language models (LLMs) suggest potential for automated improvement. Xinyi He from Xi’an Jiaotong University, Qian Liu from TikTok, and Mingzhe Du from the National University of Singapore, along with colleagues, present a new investigation into whether LLMs can effectively optimise code at the level of entire software repositories. Their work introduces SWE-Perf, the first benchmark designed to rigorously evaluate LLMs on authentic code performance optimisation tasks, using real-world examples derived from performance-improving contributions to popular GitHub projects. This research reveals a significant gap between the capabilities of current LLMs and the performance achieved by human experts, thereby pinpointing crucial areas for future development in this rapidly evolving field.

LLMs Evaluating and Improving Software Performance

Code performance is critical to modern software, directly impacting system efficiency and user experience. While Large Language Models (LLMs) excel at generating and correcting code, their ability to improve existing code performance, particularly across entire software repositories, remains largely unexplored. Current benchmarks primarily assess code correctness or isolated function optimization, failing to capture the complexities of real-world performance enhancements which often require coordinated changes across multiple files and modules. To address this challenge, researchers have introduced SWE-Perf, a new benchmark specifically designed to assess how well LLMs can optimize code performance within authentic software repositories.

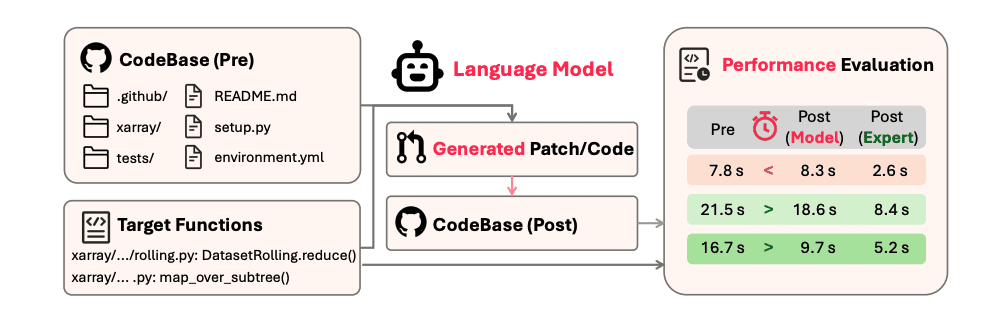

Unlike existing benchmarks, SWE-Perf measures improvements to code that already functions, evaluating how effectively LLMs can enhance efficiency rather than simply ensuring correctness. The benchmark comprises 140 carefully curated examples, each derived from actual performance-improving changes made in popular GitHub repositories, providing a realistic and relevant testing ground for LLMs. Each example within SWE-Perf includes the original codebase, the specific functions targeted for optimization, performance-related tests to measure improvement, and the expert-authored code changes originally implemented. This allows for a direct comparison between the performance of LLM-generated optimizations and those created by human experts, establishing a clear standard for evaluation.

The researchers rigorously constructed this dataset by analyzing over 100,000 pull requests, selecting those demonstrating measurable and stable performance gains, ensuring the benchmark accurately reflects real-world optimization efforts. Initial evaluations using several leading LLMs reveal a significant gap between their performance and that of human experts in optimizing code. This highlights a critical need for further research and development in this area, demonstrating that while LLMs show promise in code-related tasks, substantial advancements are required before they can reliably and effectively optimize code performance at the repository level. The SWE-Perf benchmark provides a valuable tool for driving this progress, offering a standardized and realistic platform for evaluating and improving the performance optimization capabilities of future LLMs.

Evaluating LLMs for Real-World Code Optimisation

The research team developed SWE-Perf, a novel benchmark designed to rigorously evaluate how well large language models (LLMs) can optimize code performance within authentic software repositories. Unlike previous benchmarks that focus on isolated function-level improvements or simply verifying code correctness, SWE-Perf assesses LLMs’ ability to enhance performance across entire projects, mirroring the complexities of real-world software development. This approach acknowledges that substantial performance gains often arise from collaborative changes spanning multiple files and modules, a nuance often overlooked in simpler evaluations. To construct this benchmark, the researchers meticulously curated a dataset of 140 examples derived from performance-improving pull requests submitted to popular GitHub repositories.

They began by examining over 100,000 pull requests, then rigorously filtered these down by measuring the runtime of unit tests before and after each proposed change, ensuring only those demonstrating stable and measurable performance gains were included. Each example within SWE-Perf comprises the complete repository codebase, the specific functions targeted for optimization, the performance-related tests used to measure improvement, and the original, expert-authored patch that achieved the optimization. A key innovation lies in the use of these expert patches not merely as confirmation of potential improvement, but as a crucial baseline for evaluating the LLM-generated code. This allows for a direct comparison between the performance achieved by the language model and that of a human expert, providing a clear metric for assessing the LLM’s effectiveness. Furthermore, the inclusion of a complete, executable environment, such as a Docker image, ensures consistent and reproducible evaluations, eliminating potential variations due to differing system configurations. By focusing on repository-level optimization and employing expert patches as a gold standard, SWE-Perf offers a uniquely comprehensive and realistic assessment of LLMs’ capabilities in this critical area of software engineering.

SWE-Perf Benchmarks LLM Code Performance Improvements

Researchers have introduced SWE-Perf, a novel benchmark designed to rigorously evaluate the ability of large language models (LLMs) to enhance code performance within authentic software repositories. Unlike existing benchmarks that focus on isolated function-level optimization, SWE-Perf assesses LLMs’ capabilities in a more realistic, repository-wide context, mirroring the complexities of real-world software engineering. The dataset comprises 140 instances, each derived from actual performance-improving pull requests sourced from popular GitHub repositories, providing a robust foundation for evaluating LLM performance. The benchmark employs a unique methodology, focusing on performance-related tests and target functions to narrow the evaluation scope and manage computational demands.

This allows for a focused assessment of LLMs’ ability to identify and implement performance enhancements without being overwhelmed by the scale of an entire codebase. The data collection pipeline systematically gathers pull requests, measures the performance of original and modified code, and verifies stable performance improvements through statistical testing, ensuring the reliability and validity of the benchmark. This rigorous process establishes a high-quality dataset for evaluating LLMs’ performance optimization capabilities. Evaluations reveal a significant performance gap between current LLMs and expert human developers in the realm of code optimization.

While LLMs demonstrate some capacity for improvement, they consistently lag behind human experts in achieving substantial performance gains within complex software repositories. This gap highlights the need for further research and development to enhance LLMs’ understanding of code optimization principles and their ability to apply them effectively in real-world scenarios. The findings underscore the challenges of automating performance optimization and the potential for LLMs to contribute to this critical area of software engineering. Notably, the benchmark distinguishes between two evaluation settings: an “oracle” setting where LLMs are provided with relevant file contexts, and a more demanding “realistic” setting where LLMs operate autonomously within the entire repository.

This distinction allows researchers to assess LLMs’ capabilities in both guided and unguided scenarios, providing a comprehensive understanding of their strengths and limitations. The realistic setting, in particular, challenges LLMs to navigate complex codebases, identify optimization targets, and implement changes without explicit guidance, mirroring the demands of real-world software development. This nuanced evaluation approach provides valuable insights into the capabilities and limitations of LLMs in the context of code performance optimization.

SWE-Perf Benchmarks Code Improvement Capabilities

The research introduces SWE-Perf, a new benchmark designed to rigorously evaluate large language models’ ability to improve code performance within realistic software repositories. Through testing on 140 instances derived from actual performance-enhancing pull requests, the study demonstrates that current models, including those employing agent-based methodologies like OpenHands, still lag significantly behind expert human performance in this area. While OpenHands shows promise and outperforms simpler approaches, a substantial gap remains between its performance and that of experienced developers. Notably, the models tested already achieve performance comparable to experts on certain repositories, such as sklearn, indicating early signs of potential. Analysis reveals that performance is strongly linked to the number of target functions within the code, suggesting that focusing on optimizing fewer, more critical functions may yield greater improvements. Future work could explore expanding the benchmark to include a wider range of optimization goals and investigating methods to further bridge the performance gap between language models and human experts, potentially through more sophisticated agent designs or targeted training strategies.

👉 More information

🗞 SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories?

🧠 DOI: https://doi.org/10.48550/arXiv.2507.12415