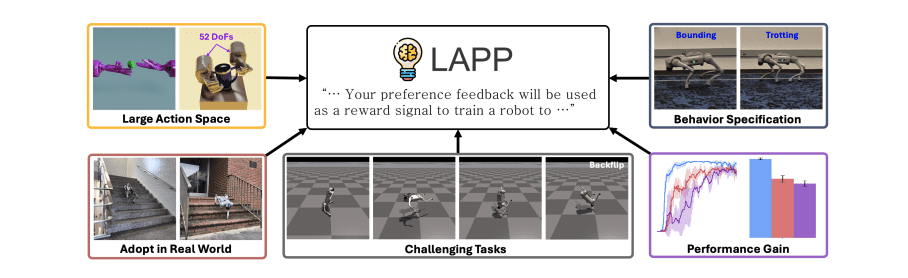

A new study titled LAPP: Large Language Model Feedback for Preference-Driven Reinforcement Learning, published on April 21, 2025, introduces a novel framework that integrates large language models into reinforcement learning to enable efficient and customizable behavior acquisition in robotics.

The paper introduces LAPP, a framework that integrates large language models (LLMs) into reinforcement learning to generate preference labels from state-action trajectories, reducing reliance on reward engineering or human demonstrations. By training an online preference predictor, LAPP guides policy optimization toward high-level behavioral goals, enabling efficient acquisition of complex skills like quadruped gait control and backflips. Evaluated across locomotion and manipulation tasks, LAPP demonstrates superior learning efficiency, performance, and adaptability compared to standard methods, highlighting its potential for scalable preference-driven learning.

Recent advancements in robotics have significantly enhanced their capabilities across various domains, focusing on adaptability and efficiency in real-world environments. These innovations are driven by advanced learning techniques such as deep reinforcement learning (DRL) and self-supervised auxiliary tasks, which address challenges in manipulation, locomotion, human interaction, and autonomous navigation.

In the realm of manipulation tasks, robots now utilize visual-force tactile policies, enabling them to interact with objects using vision and touch feedback. This capability allows robots to dynamically adjust their grip, crucial for handling diverse shapes and sizes effectively. Similarly, in locomotion tasks, a hierarchical reinforcement learning approach is employed. Robots first master basic movements before progressing to complex tasks like navigating uneven terrain, enhancing their ability to move efficiently in unpredictable environments.

Safety in human-robot interaction is achieved through imitation learning, where robots observe and mimic human actions. This ensures that robots can assist humans without posing risks, making collaboration safer and more effective. For autonomous navigation, the integration of DRL with auxiliary tasks improves decision-making and obstacle avoidance, vital for systems like delivery robots, ensuring they navigate dynamically and efficiently.

Each task presents unique challenges—such as object diversity in manipulation or terrain variability in locomotion. However, the use of advanced learning techniques allows robots to adapt and improve continuously. Training often begins in simulated environments using DRL, facilitating efficient learning through trial and error without physical risks.

These advancements collectively aim to produce robots that are not only skilled physically but also adept at making informed decisions. This paves the way for more capable and versatile robotic systems in real-world applications, marking a significant step forward in robotics technology.

👉 More information

🗞 LAPP: Large Language Model Feedback for Preference-Driven Reinforcement Learning

🧠 DOI: https://doi.org/10.48550/arXiv.2504.15472