Spiking neural networks offer a pathway to energy-efficient computation inspired by the brain, but directly training these networks presents significant challenges due to the non-differentiable nature of spiking activity. Jiaqiang Jiang, Wenfeng Xu, and Jing Fan, from Zhejiang University of Technology, along with Rui Yan, address this problem by introducing a new learning algorithm that dynamically adjusts both the firing thresholds of neurons and the method for estimating gradients during training. Their approach, termed DS-ATGO, balances neuronal firing rates and improves gradient signals, allowing networks to learn more effectively. This innovation overcomes limitations in existing training methods and demonstrates substantial performance gains, ultimately enabling more stable and efficient spiking neural networks with improved learning capabilities in deeper layers.

Spiking Neural Networks for Image Classification

This document details the implementation and experimental setup for research focused on spiking neural networks (SNNs), utilising several established datasets including CIFAR-10, CIFAR-100, event-based CIFAR10-DVS, and the large-scale ImageNet dataset. The research explores techniques to improve the performance of SNNs on image classification tasks, ensuring reproducibility and understanding of the methods used. The researchers employed popular network architectures such as ResNet-18, ResNet-19, and a spiking version of the VGG network, VGGSNN. Experiments were conducted using a workstation equipped with a powerful AMD Ryzen Threadripper processor, 128GB of RAM, and four NVIDIA GeForce RTX 4090 GPUs.

Networks were initialised using the Kaiming method and trained with the stochastic gradient descent (SGD) optimiser, employing a cosine annealing learning rate schedule and weight decay. Performance was evaluated using cross-entropy loss with label smoothing for CIFAR datasets and TET loss for the event-based CIFAR10-DVS dataset. Data preprocessing involved subtracting the global mean, dividing by the standard variance, and applying techniques like random cropping, horizontal flipping, and AutoAugment to enhance the training data. Multiple random seeds were used to ensure robustness, and evaluation metrics focused on achieving high accuracy across the various datasets.

Adaptive Thresholding and Dynamic Gradient Optimisation

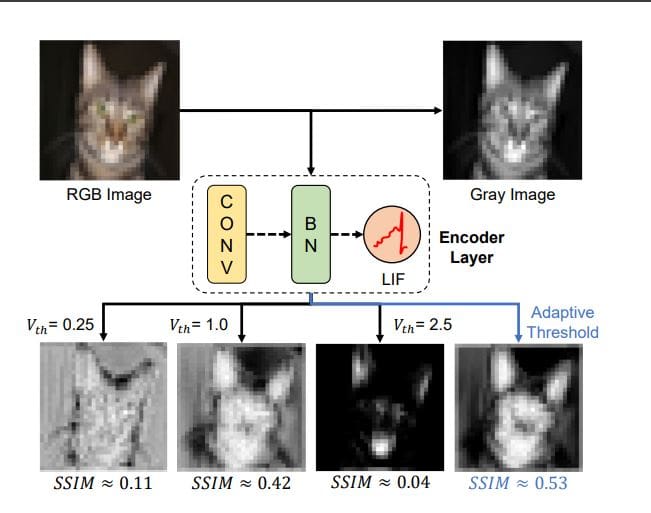

Researchers have developed a novel learning algorithm to improve the training of brain-inspired spiking neural networks, addressing challenges related to imbalanced neuron firing and diminished gradient signals. The study pioneers a dual-stage synergistic approach, incorporating forward adaptive thresholding and backward dynamic surrogate gradient optimisation to enhance performance and stability. Inspired by neuroscience, the method models threshold plasticity observed in biological neurons by dynamically responding to membrane potential levels. To further refine training, the research team implemented a backward propagation stage that dynamically optimises the surrogate gradient itself.

Recognising that gradient information is limited when membrane potentials are far from the firing threshold, scientists optimised the surrogate gradient to align with the spatio-temporal dynamics of membrane potentials. This alignment maximises the number of neurons contributing to gradient updates, effectively mitigating the gradient vanishing problem. Experiments analysed the distribution of firing rates under varying membrane potential variances, revealing the impact of threshold adjustments on neuronal activity, and investigated the proportion of neurons receiving gradients in deeper layers, demonstrating that the dynamic surrogate gradient optimisation increases gradient availability. The results demonstrate significant performance improvements, allowing neurons to maintain stable firing proportions at each timestep and increasing the number of neurons contributing to learning in deeper network layers. This innovative methodology addresses key limitations in spiking neural network training, paving the way for more efficient and biologically plausible artificial intelligence systems.

Dynamic Learning Optimizes Spiking Neural Networks

Scientists have developed a novel learning algorithm for spiking neural networks (SNNs) that addresses challenges in training these energy-efficient systems. The research focuses on improving how SNNs learn by dynamically adjusting key parameters during the training process, leading to significant performance improvements. A core issue in SNN training is the non-differentiable nature of spikes, hindering traditional gradient-based learning methods. This work introduces a dual-stage synergistic learning approach, termed DS-ATGO, which combines forward adaptive thresholding and backward dynamic surrogate gradient (SG) optimisation.

The team observed that fixed thresholds and SGs can lead to imbalanced firing rates and diminished gradient signals, hindering effective learning. To address this, the method adaptively adjusts thresholds based on the distribution of neuronal membrane potential dynamics (MPD) at each timestep. This adjustment enriches neuronal diversity and effectively balances firing rates across layers, ensuring a more stable and informative signal flow. Experiments demonstrate that this adaptive thresholding allows neurons to fire stable proportions of spikes at each timestep, maintaining a moderate level of activity crucial for information processing.

Furthermore, the researchers established a correlation between MPD, threshold, and SG, dynamically optimising the SG to enhance gradient estimation. By aligning SG optimisation with the spatio-temporal dynamics of membrane potentials, the method mitigates gradient information loss. This dynamic optimisation ensures that a greater proportion of neurons receive gradients, even in deeper layers of the network, overcoming the “gradient vanishing problem”. The results show that DS-ATGO significantly improves the ability of SNNs to encode dynamic information and achieve a linear response to input signals, representing a substantial advancement in the field of neuromorphic computing.

Adaptive Thresholds Enhance Spiking Neural Networks

This research presents a novel approach to improving the performance of spiking neural networks, which are increasingly recognised for their potential in low-energy computing. Scientists identified that fixed firing thresholds and surrogate gradients, commonly used in training these networks, become mismatched with the evolving distribution of neuronal membrane potentials during learning. This mismatch leads to imbalanced firing rates and diminished gradient signals, hindering effective training. To address these limitations, the team developed a dual-stage synergistic learning algorithm. This method adaptively adjusts firing thresholds based on the dynamics of membrane potential and simultaneously optimises surrogate gradients, ensuring better alignment throughout the learning process. Experimental results demonstrate that this approach outperforms methods that focus on adjusting either thresholds or gradients in isolation, leading to more stable firing patterns and enhanced gradient signals across network layers. By optimising the loss landscape and improving information encoding efficiency, this work advances the potential of spiking neural networks for more complex and wider-ranging applications.

👉 More information

🗞 DS-ATGO: Dual-Stage Synergistic Learning via Forward Adaptive Threshold and Backward Gradient Optimization for Spiking Neural Networks

🧠 ArXiv: https://arxiv.org/abs/2511.13050