The potential for artificial intelligence to understand and interact with the visual world has taken a significant step forward, as researchers demonstrate remarkable zero-shot learning capabilities in modern video models. Thaddäus Wiedemer, Yuxuan Li, and Paul Vicol, alongside Shixiang Shane Gu, Nick Matarese, Kevin Swersky, Been Kim, Priyank Jaini and Robert Geirhos present evidence that these models can perform a diverse range of visual tasks without specific training for each one. Their work reveals that a single video model, exemplified by Veo 3, successfully segments objects, detects edges, edits images, and even reasons about physical properties and tool use. This achievement suggests that video models are progressing towards becoming unified, generalist foundations for vision understanding, mirroring the recent advances seen in large language models and opening up exciting possibilities for artificial intelligence to perceive and manipulate the visual world.

Video models are emerging as powerful zero-shot learners and reasoners, mirroring recent advancements in large language models. Scientists at Google DeepMind investigated the capabilities of these models, focusing on their ability to understand and interact with the visual world without task-specific training. This work explores whether video models can achieve general-purpose vision understanding, similar to how large language models have achieved general-purpose language understanding.

Veo’s Capabilities Across Diverse Video Tasks

This research provides a comprehensive assessment of current video generation models, specifically Veo, by systematically testing their limits across a broad spectrum of tasks. The study moves beyond simply introducing a new model and instead focuses on rigorously evaluating what existing technology can and cannot achieve. The team tested the model on a vast range of challenges, from basic image manipulations like depth estimation and surface normal calculation to complex reasoning and physics-based simulations, including knot tying and collision detection. A significant portion of the work is dedicated to documenting failure cases, providing crucial insights into current limitations and guiding future research.

Veo demonstrates the ability to generate visually realistic videos and handle complex scenes with multiple interacting objects, generally following textual prompts to create desired video content. However, the research also highlights several key weaknesses, including struggles with realistic physics simulations, maintaining the integrity of rigid bodies, and accurately interpreting 3D space. Complex manipulation tasks, such as puzzle solving and tool use, also prove difficult. Furthermore, Veo sometimes produces inaccurate depth maps and surface normals, struggles with precise visual details, and encounters errors when processing text within videos.

The research utilized diverse image sources, including images generated by large language models, publicly available datasets, hand-drawn images, and content from online platforms. The authors openly acknowledge and detail their use of large language models throughout the research process. Overall, this study provides a valuable contribution to the field of video generation, offering a realistic assessment of current capabilities and limitations. The emphasis on failure cases is particularly important, as it highlights areas requiring further research.

Veo 3 Demonstrates Zero-Shot Video Understanding

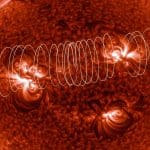

Recent advances in video models, particularly Veo 3, demonstrate a significant leap in visual understanding. Scientists achieved remarkable results with a model capable of zero-shot learning and reasoning, successfully solving a broad variety of visual tasks without explicit training for those specific functions. Building on the success of large language models, this work extends the concept of general-purpose understanding to the visual domain. Experiments reveal Veo 3’s ability to perform classic computer vision tasks, including edge detection, segmentation, keypoint localization, super-resolution, and denoising, all without task-specific training.

For example, the model consistently demonstrated the ability to interpret the classic Dalmatian illusion, a task requiring complex visual parsing. Beyond perception, Veo 3 models the physical world with surprising accuracy, demonstrating an understanding of physics through tasks involving rigid and soft body dynamics, flammability, air resistance, buoyancy, and optical phenomena like refraction and reflection. The team prompted Veo 3 to perform a Visual Jenga task, and the model successfully removed objects in a physically plausible order, demonstrating an understanding of stability and balance. Furthermore, the model accurately determined which objects would fit into a backpack, showcasing an understanding of spatial relationships and object properties.

Veo 3 extends beyond understanding and modeling to actively manipulate the visual world, performing zero-shot image editing tasks, including background removal, style transfer, colorization, inpainting, and outpainting. The team prompted Veo 3 to compose scenes from individual components, and the model generated novel views of objects and characters, demonstrating an understanding of 3D space and object relationships. These achievements suggest a path toward unified, generalist vision foundation models, mirroring the progress seen in natural language processing.

Veo 3 Exhibits Strong Zero-Shot Visual Learning

Recent advances in video models, specifically Veo 3, exhibit strong zero-shot learning capabilities across a diverse range of visual tasks. Unlike models requiring specific training for each task, Veo 3 successfully performs object segmentation, edge detection, image editing, and even visual reasoning challenges like maze solving, without prior exposure. These achievements suggest video models are progressing towards a level of general visual understanding comparable to the broad language capabilities of large language models. Quantitative evaluations confirm a substantial performance increase from its predecessor, Veo 2, with Veo 3 often matching or exceeding the performance of specialized image editing models.

While Veo 3 demonstrates impressive abilities, its performance is not always perfect and currently lags behind state-of-the-art models specifically trained for certain tasks. Furthermore, the model’s performance can vary depending on the frame within a generated video, with later frames sometimes showing reduced accuracy due to continued animation. Future research directions include refining the model to consistently achieve peak performance across all frames and further closing the gap with specialized models on individual tasks, aiming to unlock the full potential of video models as general-purpose vision foundations.

👉 More information

🗞 Video models are zero-shot learners and reasoners

🧠 ArXiv: https://arxiv.org/abs/2509.20328