Achieving truly intelligent autonomous driving requires systems that learn from vast amounts of data, but current approaches struggle with a fundamental problem: limited guidance from the actions a vehicle takes. Yingyan Li, Shuyao Shang, and Weisong Liu, along with colleagues, address this challenge with DriveVLA-W0, a new training method that compels autonomous systems to predict future images of the driving environment. This process generates a rich stream of self-supervision, enabling the system to learn the underlying dynamics of driving more effectively. The team demonstrates that DriveVLA-W0 significantly improves performance on standard benchmarks and, crucially, amplifies the benefits of larger datasets, paving the way for more robust and capable self-driving technology.

Predictive World Models Guide Visual Language Navigation

This research introduces a novel system for autonomous driving that combines a Visual Language Agent with a World Model capable of predicting future images. This allows the agent to not only perceive its surroundings but also anticipate how the environment will evolve, leading to safer and more robust driving decisions. The system establishes a significant advance by demonstrating how predicting future images provides a richer learning signal, improving navigation in complex scenarios.

Self-Supervision Amplifies Data Scaling for Driving Models

This study pioneers a new training method, DriveVLA-W0, to address the limitations of sparse supervision in Vision-Language-Action models for autonomous driving. Researchers engineered a system that uses world modeling to generate dense, self-supervised signals, compelling the agent to learn the underlying dynamics of driving environments more effectively. This work demonstrates how self-supervision amplifies the benefits of increasing training dataset size, achieving accelerated performance gains. Input data undergoes specific processing; language instructions are processed using the model’s native tokenizer, while continuous waypoint trajectories are converted into discrete tokens.

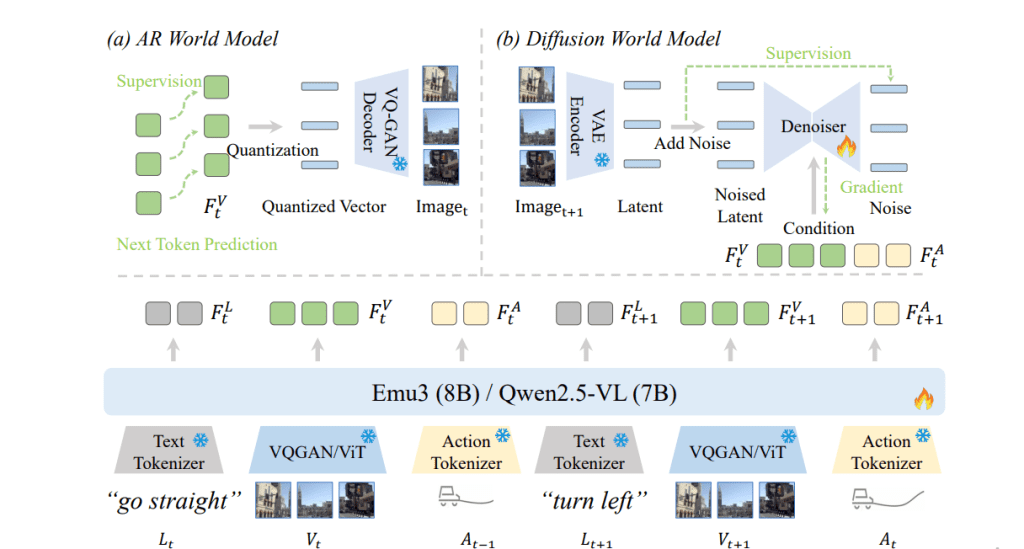

These multimodal inputs are deeply integrated and processed autoregressively, outputting hidden states split into language, vision, and action features. The model is initially trained to predict ground-truth action sequences, enabling it to generate action tokens during operation, which are then converted back into continuous waypoint trajectories. To address the supervision deficit, the researchers introduced world modeling, implemented differently for each VLA architecture. For discrete visual representations, they formulated an Autoregressive World Model, predicting the current visual scene by generating its sequence of visual tokens conditioned on past observations and actions. Conversely, for continuous visual features, they developed a Diffusion World Model to generate future images in a continuous latent space. The complete framework, DriveVLA-W0, is trained jointly by optimizing a weighted sum of the action and world model losses, representing a significant methodological innovation.

Dense Self-Supervision Boosts Driving Intelligence

The research team achieved a breakthrough in vision-action learning by developing DriveVLA-W0, a training paradigm designed to enhance driving intelligence through improved representation learning. Addressing the common “supervision deficit” in these systems, where vast capacity is limited by sparse action labels, the team implemented a world model that predicts future images, generating a dense self-supervised signal. This approach compels the system to learn the underlying dynamics of the driving environment more effectively. For systems utilizing discrete visual tokens, the team created an autoregressive world model, training it to predict the sequence of visual tokens based on past observations and actions.

Experiments demonstrated the model’s ability to generate images from these tokens, optimizing a next-token prediction loss. Conversely, for systems operating on continuous visual features, a diffusion world model was implemented, predicting future images in a continuous latent space. This model learns to predict the future visual scene conditioned on current visual and action features, optimized via a mean squared error objective. To address real-time performance limitations, the team integrated a lightweight action expert, containing 500 million parameters, alongside the larger VLA backbone. This Mixture-of-Experts architecture utilizes a joint attention mechanism, concatenating query, key, and value matrices to create a single set of inputs, allowing for efficient fusion of information. The resulting attention output is then split and routed back to each expert, enabling a tight integration of rich representations and specialized context.

Future Prediction Boosts Driving Model Performance

This research addresses a key limitation in scaling Vision-Language-Action models for autonomous driving, identifying a “supervision deficit” where the potential of these models is underutilized due to sparse action labels. To overcome this, scientists developed DriveVLA-W0, a training paradigm that leverages future image prediction as a dense, self-supervised signal. This approach compels the model to learn the underlying dynamics of the driving environment, enhancing its understanding and predictive capabilities. Experiments demonstrate that DriveVLA-W0 significantly outperforms existing baseline models on challenging benchmarks and a large-scale in-house dataset.

Notably, performance gains accelerate as the size of the training dataset increases, highlighting the paradigm’s ability to effectively utilize large amounts of data. Furthermore, the researchers successfully integrated a lightweight action expert, reducing inference latency and enabling real-time deployment. Future work will likely focus on exploring alternative world modeling techniques and investigating the potential for transfer learning across different driving scenarios. These findings suggest that embracing dense, predictive world modeling is a crucial step towards achieving a more generalized and robust driving intelligence.

👉 More information

🗞 DriveVLA-W0: World Models Amplify Data Scaling Law in Autonomous Driving

🧠 ArXiv: https://arxiv.org/abs/2510.12796