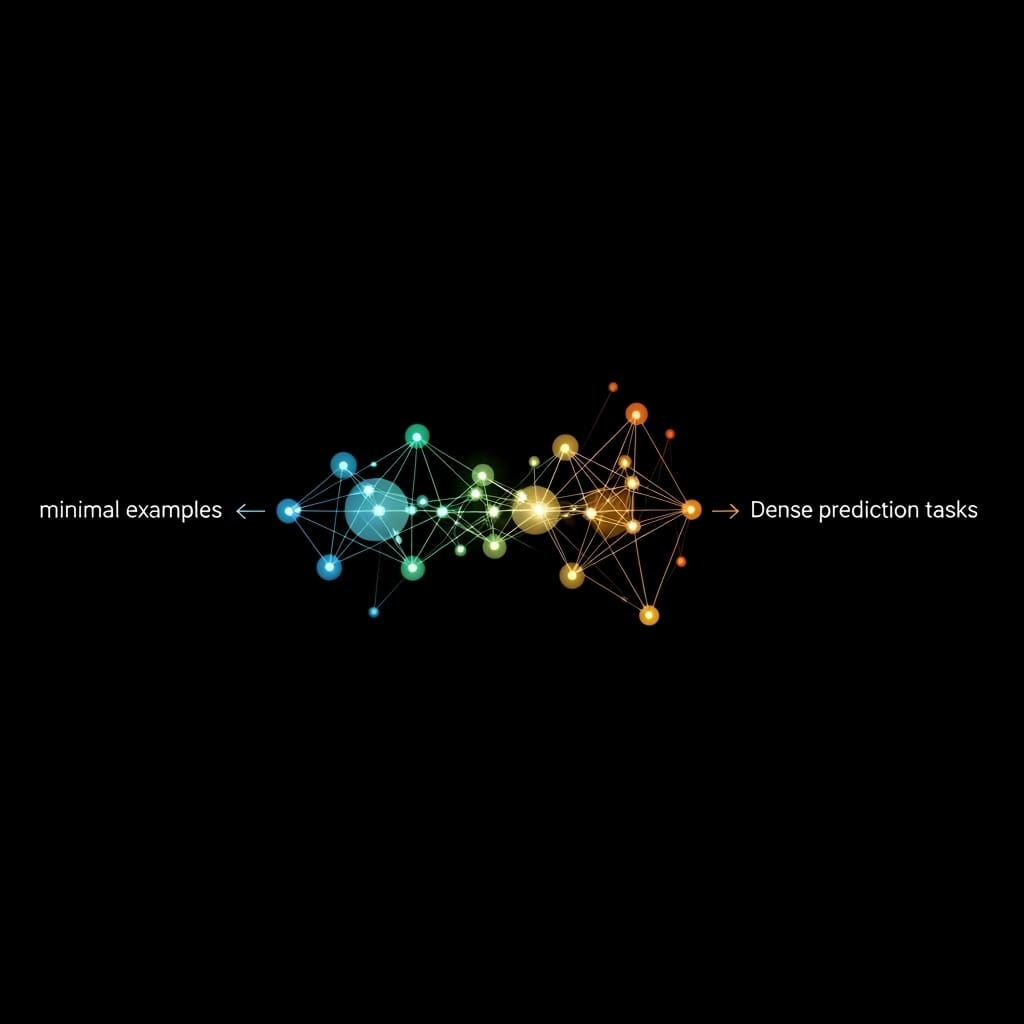

Diffusion models currently excel at generating images, and researchers are now applying this technology to more complex prediction tasks, but a significant limitation remains: these models typically rely on pre-defined settings that don’t always perform optimally. Changgyoon Oh, Jongoh Jeong, Jegyeong Cho, and Kuk-Jin Yoon from KAIST address this challenge by developing a system that learns to select the most useful steps within the diffusion process, specifically for dense prediction tasks where detailed information must be generated. Their innovative approach, which incorporates modules for task-aware timestep selection and feature consolidation, allows the model to adapt to new tasks with minimal training data, achieving superior performance on challenging datasets. This breakthrough promises to broaden the applicability of diffusion models to a wider range of real-world problems requiring accurate and detailed predictions from limited examples.

Efficient Fine-Tuning of Large Language Models

This work details a comprehensive overview of research in machine learning, deep learning, and parameter-efficient fine-tuning methods, with a focus on advancements in vision transformers and multi-task learning. The review encompasses foundational techniques like deep residual learning and U-net image segmentation, alongside more recent developments in adapting large language models with methods such as BitFit and LoRA, which minimize the number of trainable parameters. Several adapter methods, prompt tuning techniques, and variations like MoE-LoRA and LCM-LoRA are also examined, highlighting their roles in enhancing model efficiency and performance. The analysis extends to multi-task learning and transfer learning strategies, including approaches for disentangling task transfer and sharing knowledge between tasks. Vision transformers, such as BEiT and CrossViT, and their adaptations for dense predictions are also covered, alongside the utilization of large-scale datasets like LAION-5B for training image-text models. The review also includes research on specific applications, such as medical image segmentation and skin lesion analysis, as well as other relevant studies in depth estimation and meta-learning.

Adaptive Timestep Selection for Diffusion Models

The study introduces a novel system for dense prediction tasks that adaptively selects optimal diffusion timesteps, overcoming the limitations of relying on empirically determined selections. Researchers designed a framework incorporating Task-aware Timestep Selection (TTS) and Timestep Feature Consolidation (TFC) to dynamically identify the most relevant timesteps for accurate predictions, moving beyond fixed, pre-defined choices. The TTS module iteratively evaluates timestep features based on losses and similarity scores, while the TFC module consolidates these features with support label information to improve performance in few-shot learning scenarios. Experiments utilizing a pre-trained Latent Diffusion Model (LDM) and the Taskonomy dataset demonstrate the system’s superior performance in universal and few-shot learning, enabling generalization to unseen tasks with limited labeled examples. This parameter-efficient fine-tuning adapter represents a significant advancement, offering a pathway to more robust and adaptable diffusion-based systems and pioneering a method for identifying ideal diffusion timesteps for generalization across multiple tasks.

Adaptive Timesteps Enhance Diffusion Model Prediction

Scientists have developed a system that adaptively selects optimal diffusion timesteps, achieving a breakthrough in dense prediction tasks and significantly improving performance in few-shot learning. The research leverages Latent Diffusion Models for tasks requiring detailed, pixel-level predictions, recognizing that earlier timesteps prioritize global structures while later timesteps focus on finer details. To intelligently harness these characteristics, the team introduced Task-aware Timestep Selection (TTS) and Timestep Feature Consolidation (TFC). TTS repeatedly searches for the most relevant timestep features based on losses and similarity scores, and TFC consolidates these features to enhance predictive performance with limited data. Tests on the Taskonomy dataset demonstrate the effectiveness of this approach in universal and few-shot learning, where the system generalizes to unseen tasks with minimal support data. Measurements confirm superior performance using only a small number of support queries, establishing a pioneering method for identifying ideal diffusion timesteps and extending the applicability of pre-trained diffusion models to multiple tasks.

Adaptive Timesteps Boost Few-Shot Prediction

This research highlights the importance of carefully selecting diffusion timesteps for achieving strong performance in few-shot learning of dense prediction tasks. Scientists developed a framework incorporating Task-aware Timestep Selection and Timestep Feature Consolidation, which adaptively identify and refine the most useful timesteps from a diffusion process, improving prediction accuracy with limited data. Applying this method to a large-scale dataset resulted in notable gains in dense prediction performance, particularly in universal and unseen scenarios. The proposed modules represent a computationally efficient advancement, comprising a minimal proportion of total computational cost and model parameters, making them readily applicable to other diffusion model-based applications. While the current work focuses on timestep stratification based on similarity scores, the authors acknowledge potential limitations in broader generalizability and suggest future work could explore more robust methods and expand evaluation to larger, more diverse datasets.

👉 More information

🗞 Task-oriented Learnable Diffusion Timesteps for Universal Few-shot Learning of Dense Tasks

🧠 ArXiv: https://arxiv.org/abs/2512.23210