The pursuit of energy-efficient computing drives innovation in novel computer architectures, and researchers are increasingly exploring designs that blend digital and analog components. Jason Ho, James A. Boyle, Linshen Liu, and colleagues at The University of Texas at Austin address a critical bottleneck in this field: the slow and cumbersome process of simulating and optimising these complex systems. Their work introduces LASANA, a new method that employs machine learning to create fast and accurate ‘surrogate models’ of analog circuits, effectively predicting their energy use, performance, and behaviour without relying on computationally expensive simulations. By training these models on detailed circuit data, the team achieves speedups of up to three orders of magnitude compared to traditional methods, while maintaining remarkably low error rates in key performance metrics, paving the way for more rapid exploration and co-design of future analog and mixed-signal computing architectures.

Neuromorphic Computing Addresses Memory and Energy Limits

The increasing complexity and size of machine learning models are straining traditional computing architectures, particularly in terms of memory bandwidth and energy consumption. Neuromorphic computing offers a promising alternative, inspired by the brain’s efficient processing methods, and enabling in-memory and event-driven computation. Analog spiking systems and efficient compute-in-memory techniques with memristive crossbars are increasingly used to reduce energy consumption and exploit the unique properties of emerging devices. Optimizing the integration of analog and digital domains requires careful co-design, but this is hampered by a lack of tools for fast and accurate modeling and simulation of large-scale hybrid architectures. Traditional analog component modeling relies on slow, low-level simulation methods that are impractical for complex systems.

Data-Driven Analog Surrogate Modeling with Machine Learning

Methods like SPICE, while accurate, are prohibitively slow for large systems. Existing behavioral models, such as SystemVerilog Real Number modeling and SystemC-AMS, simplify complex calculations but require manual annotation of energy and performance estimates. Researchers propose LASANA, a novel data-driven approach using machine learning to create lightweight analog surrogate models that estimate the energy, latency, and behavior of analog sub-blocks at the interfaces between analog and digital components. Given a circuit design, LASANA generates a representative dataset, trains machine learning predictors, and outputs C++ inference models.

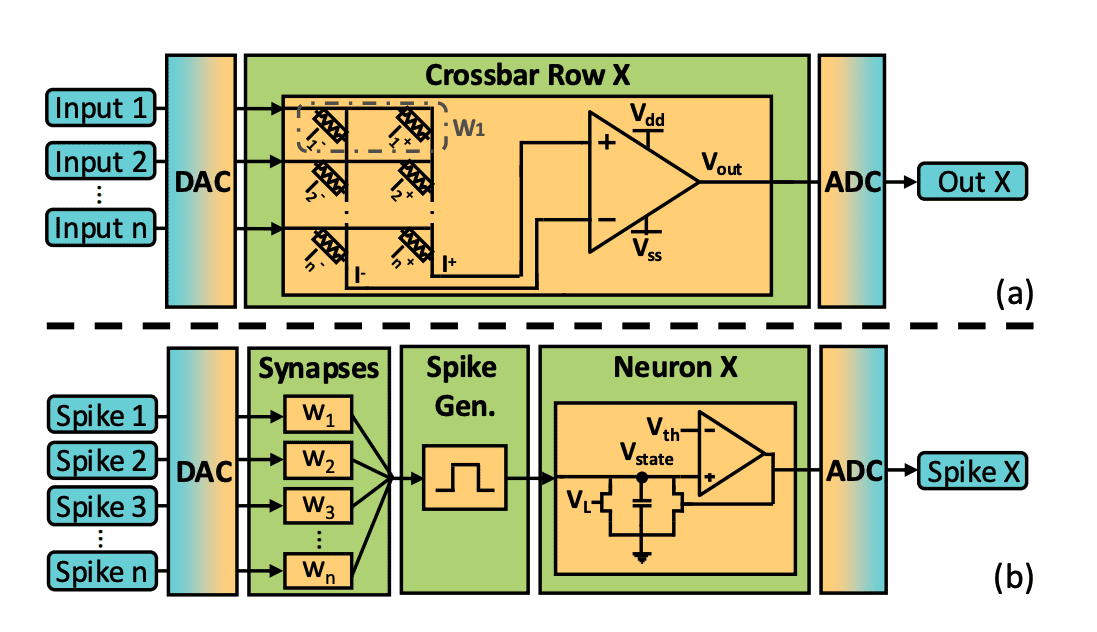

These models estimate energy and performance with high accuracy and low overhead at the granularity of digital clock steps, and can either enhance existing behavioral models or serve as standalone replacements for analog circuit simulations. This is the first approach to develop machine learning-based surrogate models of analog circuit blocks at a coarse-grain event level. The framework incorporates optimizations to improve runtime by batching inferences across the system and merging inactive input periods into single events. Researchers examined the trade-offs between runtime and accuracy, finding that boosted trees and multi-layer perceptrons perform best depending on the complexity of the circuit. Evaluation on a crossbar array and a spiking neuron circuit demonstrates up to three orders of magnitude speedup over SPICE on image recognition tasks, with errors in energy, latency, and behavior remaining below 7%, 8%, and 2%, respectively.

Crossbar Circuit Prediction with Surrogate Models

Results are presented based on circuit behavior using a timestep approach. The algorithm updates the state of circuits experiencing input changes, collecting input parameters and using them with state and static energy predictors. Inference results update corresponding values, and a batch of input events is collected from inputs and parameters. The CatBoost model demonstrated the best performance. Experiments were conducted on a 32 × 32 memristive crossbar array and an analog spiking neural network implementation.

Surrogate models were derived using LASANA for crossbar rows with 1T-1R phase change memory bitcells and for a 20-transistor spiking LIF neuron. Simulations were performed on a Linux system with a 16-core Intel i7 processor and 32GB of memory. The crossbar dataset comprised 1000 random runs of 500 ns length, and the LIF neuron dataset comprised 2000 random runs of 500 ns length. Dataset generation took several minutes for each circuit, resulting in a large number of events. Datasets were split for training, testing, and validation.

For the crossbar, CatBoost achieved significantly better mean squared error and mean absolute percentage error for dynamic energy compared to other models. For the LIF neuron, CatBoost and the multi-layer perceptron achieved similar performance for latency and dynamic energy. Correlation plots demonstrate the predictive capabilities of CatBoost and the multi-layer perceptron.

LASANA Accelerates Neuromorphic Architecture Exploration

CONCLUSIONS This paper proposes LASANA, an automated machine learning-based approach for surrogate modeling, to provide energy and latency annotation of behavioural models or completely replace analogue circuit simulations for large-scale neuromorphic architecture exploration. The models are general and support a wide range of circuits, demonstrated with a memristive crossbar array and a spiking leaky integrate-and-fire neuron. Experimental results demonstrate up to three orders of magnitude speedup over SPICE, with errors in energy, latency, and behavior remaining below 7%, 8%, and 2%, respectively. Future work aims to integrate LASANA into existing digital simulators, apply it to a wider range of circuits, support device variability, and explore LASANA models for circuit-aware application training.

👉 More information

🗞 LASANA: Large-scale Surrogate Modeling for Analog Neuromorphic Architecture Exploration

🧠 DOI: https://doi.org/10.48550/arXiv.2507.10748