Researchers developed Omni Tsetlin Machine AutoEncoder (Omni TM-AE), a new embedding model for natural language processing. It achieves competitive performance in semantic similarity, sentiment classification, and document clustering while offering improved interpretability and reusability compared to conventional ‘black box’ embedding techniques like Word2Vec and GloVe.

The challenge of creating artificial intelligence systems that are both powerful and understandable remains a central focus in machine learning. Current large-scale models, while achieving impressive results, often operate as ‘black boxes’, hindering trust and limiting their application in critical domains. Researchers are now exploring methods to build models that retain performance while offering greater transparency. A team led by Ahmed K. Kadhim (University of Agder), Lei Jiao (University of Agder), Rishad Shafik (Newcastle University), and Ole-Christoffer Granmo (University of Agder) present their work on Omni Tsetlin Machine AutoEncoder (Omni TM-AE), a novel embedding model detailed in their article of the same name, which aims to address this need by fully utilising the state information within the Tsetlin Machine – a type of machine learning algorithm – to create reusable and interpretable representations of data.

Enhanced Word Embeddings via Tsetlin Machine Autoencoders

Natural language processing (NLP) continually requires models that balance predictive power with interpretability and scalability. Traditional word embedding techniques, such as Word2Vec and GloVe, often struggle to simultaneously deliver both high accuracy and readily understandable representations of meaning. While interpretable models offer transparency, they frequently underperform more complex, opaque approaches.

The Omni Tsetlin Machine AutoEncoder (Omni TM-AE) represents a novel approach to address these limitations. It combines the strengths of the Tsetlin Machine – a compact, efficient learning algorithm – within an autoencoder framework. An autoencoder is a type of artificial neural network used to learn efficient codings of input data; it compresses data into a lower-dimensional representation and then reconstructs it. Omni TM-AE constructs reusable and understandable embeddings through a streamlined, single-phase training process.

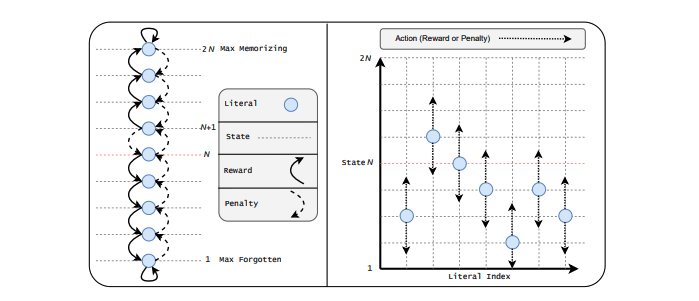

The core innovation lies in fully utilising the information contained within the Tsetlin Machine’s state matrix. The Tsetlin Machine operates using binary literals – essentially, features and their negations – to represent information. Crucially, Omni TM-AE incorporates both positive and negative literals. This means the model considers not only the presence of a feature, but also its absence, when constructing word embeddings.

By explicitly representing information about the absence of features, Omni TM-AE achieves a more nuanced understanding of word meaning. This “Omni” aspect – encompassing both positive and negative feature representations – improves convergence during training and expands the model’s representational capacity, allowing it to capture more complex relationships between words. The model effectively learns what a word is not, as well as what it is.

Experiments demonstrate that Omni TM-AE achieves competitive performance across a range of NLP tasks. These include semantic similarity assessment (determining how alike the meanings of two words are), sentiment classification (identifying the emotional tone of text), and document clustering (grouping similar documents together). In several benchmarks, the model surpasses the performance of established embedding techniques. The model’s ability to capture complex relationships between words, coupled with the clarity of the underlying reasoning behind the generated embeddings, makes it a valuable tool for a wide range of NLP applications. This approach provides a significant advantage for applications requiring transparency and explainability, allowing users to understand the basis for the model’s predictions and decisions.

👉 More information

🗞 Omni TM-AE: A Scalable and Interpretable Embedding Model Using the Full Tsetlin Machine State Space

🧠 DOI: https://doi.org/10.48550/arXiv.2505.16386