On April 7, 2025, researchers including Zhang Xi-Jia, Yue Guo, and Joseph Campbell published Model-Agnostic Policy Explanations with Large Language Models, introducing a novel method to enhance the interpretability of intelligent agents by generating straightforward, natural language explanations using observed behaviour data.

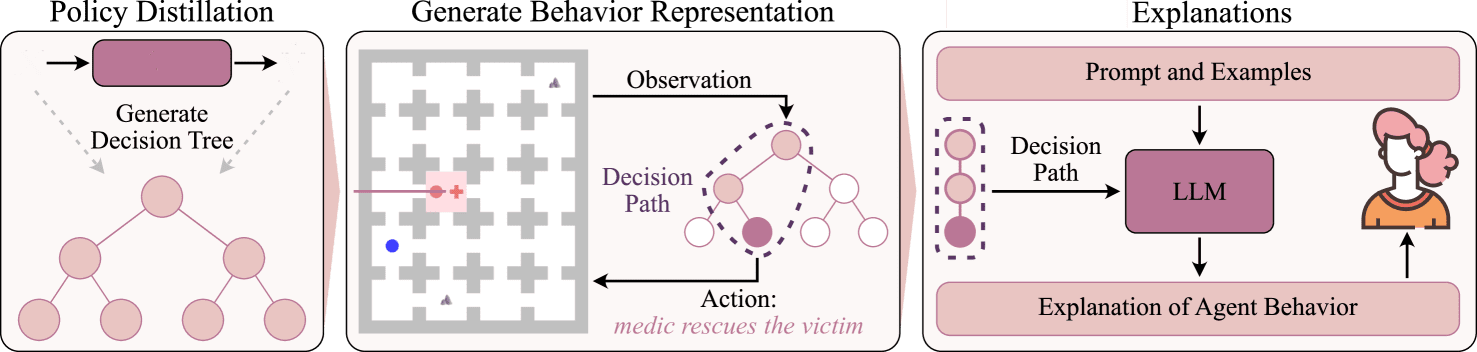

Intelligent agents like robots require explainable behaviour for trust and compliance. Current models often use opaque neural networks, hindering interpretability. This research introduces a method of generating natural language explanations from observed states and actions without access to the agent’s model. A locally interpretable surrogate model guides a large language model to produce accurate, hallucination-minimized explanations.

Evaluations show that these explanations are more comprehensible and correct than baselines, as assessed by both models and humans. User studies reveal that participants better predicted future agent actions with these explanations, indicating an improved understanding of agent behaviour.

This article explores the innovative application of large language models (LLMs) in complex environments such as urban search and rescue (USAR) operations and grid world navigation. By employing In-Context Learning (ICL) and vector state formats, LLMs are trained to make informed decisions based on structured observations, enabling efficient obstacle avoidance and goal achievement without requiring ac oheren extensive fine-tuning.

In today’s rapidly evolving technological landscape, artificial intelligence (AI) plays a pivotal role in addressing complex real-world challenges. Traditional AI methods often struggle with dynamic environments where decision-making requires adaptability and quick processing of diverse observations. LLMs, known for their versatility, are emerging as powerful tools capable of handling such tasks through innovative approaches like ICL and structured data presentation.

Integrating ICL with vector state formats represents a significant advancement in AI decision-making. This approach allows LLMs to handle complex tasks by providing clear, structured observations and using contextual examples for learning, thus enhancing adaptability and efficiency in dynamic environments.

Applying LLMs in USAR and grid navigation highlights their potential in real-world scenarios where quick, informed decisions are crucial. By leveraging ICL and vector state formats, these models offer a promising solution for future AI applications, paving the way for more sophisticated and adaptable systems across various domains.

This approach not only enhances current capabilities but also opens avenues for further research, potentially leading to even more advanced AI solutions in complex environments.

👉 More information

🗞 Model-Agnostic Policy Explanations with Large Language Models

🧠 DOI: https://doi.org/10.48550/arXiv.2504.05625