Researchers are tackling the critical challenge of ensuring safety in increasingly automated industrial inspection scenarios with the introduction of InspecSafe-V1, a novel multimodal benchmark dataset. Zeyi Liu, Shuang Liu, and Pengyu Han from Tsinghua University, alongside Jihai Min, Zhaoheng Zhang, Jun Cen et al. from TetraBOT Intelligence Co., Ltd. and DAMO Academy, Alibaba Group, have created this resource to address the limitations of existing datasets which often rely on simulated data or lack detailed annotations. InspecSafe-V1 distinguishes itself by utilising real-world data captured from over 40 inspection robots across 2,239 sites, offering pixel-level segmentation and seven synchronised sensing modalities , including infrared, audio, and radar , to facilitate robust scene understanding and comprehensive safety evaluation for industrial foundation models. This dataset promises to significantly advance the development of reliable perception systems essential for predictive maintenance and truly autonomous inspection in complex industrial environments.

This groundbreaking work responds to the increasing demand for reliable perception in complex industrial environments, a key bottleneck for deploying predictive maintenance and autonomous inspection systems. The research team constructed InspecSafe-V1 by collecting data from the routine operations of 41 wheeled and rail-mounted inspection robots across 2,239 valid inspection sites, resulting in a comprehensive collection of 5,013 inspection instances. A significant innovation lies in the dataset’s provision of pixel-level segmentation annotations for key objects within visible-spectrum images, alongside detailed semantic scene descriptions and corresponding safety level labels aligned with practical inspection tasks.

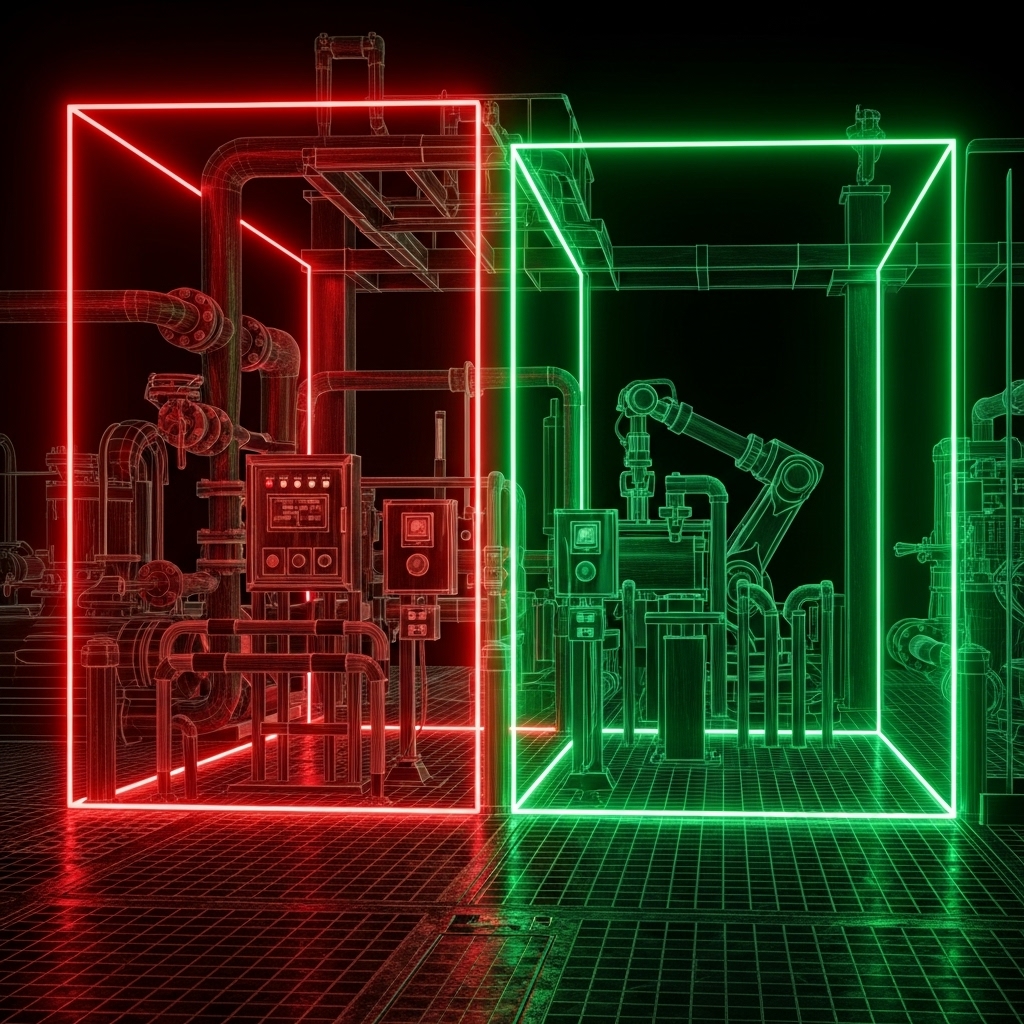

The study establishes a new standard by integrating seven synchronized sensing modalities, including infrared video, audio, depth point clouds, radar point clouds, gas measurements, temperature, and humidity. This multimodal approach supports advanced anomaly recognition, cross-modal fusion, and comprehensive safety assessment, moving beyond traditional single-sensor limitations. Researchers meticulously captured data from five representative industrial scenarios, tunnels, power facilities, sintering equipment, oil and gas petrochemical plants, and coal conveyor trestles, ensuring broad applicability and robustness. The inclusion of real-world data, gathered during actual robot inspections, directly addresses the shortcomings of existing datasets which often rely on simulated environments or limited sensing capabilities.

Experiments demonstrate that InspecSafe-V1’s rich annotation scheme, encompassing both object-level details and scene-level safety assessments, facilitates the development of AI systems capable of nuanced understanding and reasoning. The dataset’s design specifically targets the challenges of industrial inspection, such as high noise levels, severe occlusion, and complex equipment layouts, which often hinder the performance of conventional AI models. By providing a standardized benchmark with reproducible results, this work opens new avenues for fair comparison and iterative improvement of industrial AI algorithms, accelerating the deployment of autonomous inspection solutions. This advancement promises to shift the focus from post-event detection to proactive early warning systems, enhancing safety and efficiency in critical industrial operations.

Robotic Data Collection and Safety Annotation are crucial

Scientists have unveiled InspecSafe-V1, a novel benchmark dataset designed to advance industrial inspection safety assessment, addressing limitations found in existing simulated or single-modality datasets. The study meticulously collected data from 2,239 valid inspection sites utilising 41 wheeled and rail-mounted inspection robots, resulting in 5,013 inspection instances. Each instance features pixel-level segmentation annotations for key objects within visible-spectrum images, alongside semantic scene descriptions and safety level labels aligned with practical inspection tasks. This comprehensive annotation strategy facilitates robust scene understanding and safety reasoning for industrial foundation models.

Researchers engineered a dual-platform robotic system, combining wheeled and rail-mounted robots to achieve comprehensive environmental coverage. Wheeled platforms provide close-range views of ground-level equipment, while rail-mounted robots traverse fixed tracks, enabling long-distance, continuous inspections and bypassing obstacles. This configuration ensures representative object and safety element coverage within diverse industrial settings, including tunnels, power facilities, and petrochemical plants. The collected data encompasses key equipment, basic components, infrastructure elements, and safety-related anomalies, mirroring real-world inspection processes.

The inspection platforms incorporate a multimodal perception system, central to the data acquisition process. Forward-facing RGB and depth cameras, alongside 3D LiDAR sensors, capture visual appearance, spatial geometry, and platform pose, enabling temporal synchronisation and spatial alignment of multimodal data. Thermal infrared cameras, co-located with RGB cameras, monitor surface temperature distributions to detect overheating and thermal anomalies, operating at 25 FPS with a horizontal field of view of 53.7° and vertical field of view of 39.7°. Millimeter-wave radar supplements LiDAR, enhancing robustness in challenging conditions like dust or low light, while acoustic sensors record machine noise and abnormal signals.

Gas sensors, configured based on scenario-specific needs, monitor for combustible or toxic gases, or environmental safety, utilising customisable ranges and frequencies. The RGB camera employs a 1/2.8-inch CMOS sensor with a horizontal field of view ranging from 55.8° to 2.3°, and the TM265-E1 depth camera provides effective measurement from 0.05m to 5m. The MID360 LiDAR, with a maximum detection range of 40m at 10% reflectivity and 70m at 80% reflectivity, further enhances geometric perception. Despite variations in sensor setups between the wheeled and rail-mounted platforms, this detailed multimodal approach enables comprehensive anomaly recognition and cross-modal fusion for improved industrial safety assessment.

InspecSafe-V1 multi-modal dataset for robot inspection safety provides

Scientists have released InspecSafe-V1, a novel benchmark dataset designed for industrial inspection safety assessment, collected from routine operations of 41 inspection robots across 2,239 valid inspection sites. The research yielded 5,013 inspection instances, each meticulously annotated with pixel-level segmentation for key objects in visible-spectrum images, alongside semantic scene descriptions and safety level labels. Seven synchronized sensing modalities were integrated, including infrared video, audio, depth point clouds, radar point clouds, gas measurements, temperature, and humidity, providing a comprehensive data source for anomaly recognition and safety evaluation. Experiments focused on constructing a robust dataset with a detailed directory structure, comprising Annotations, Other modalities, and Parameters, to facilitate efficient access to visual annotations and multimodal sensory data.

The Annotations directory contains both normal and anomaly data, with representative keyframes sampled from RGB video and saved with corresponding JSON files detailing fine-grained object-level annotations and plain text files providing scene-level semantic descriptions. The Other modalities directory stores synchronized data, including RGB, thermal videos, 3D point clouds, acoustic signals, and environmental sensing data, all in widely used formats for compatibility. Data analysis revealed a notable imbalance in inspection point distribution across robot models, with rail-mounted platforms T3 C05 and T3 S05 accounting for 39.1% and 24.9% respectively, totaling 64.0% of all inspection points. Researchers defined 234 object categories within the RGB modality, exhibiting a significant long-tail distribution, with Pipeline (12.9%), Traffic Cone (8.9%), and Stent (7.1%) representing the most frequent categories, collectively comprising 39.3% of all objects.

The dataset encompasses five industrial scenarios, tunnels, power facilities, sintering equipment, oil and gas petrochemical plants, and coal conveyor trestles, with varying ratios of normal to abnormal samples, ranging from 78.2% normal/21.8% abnormal in tunnel scenes to 65.1% normal/34.9% abnormal in oil and gas environments. To ensure data quality, a rigorous two-round independent verification process was applied to all pixel-level annotations, requiring an accuracy exceeding 95% in both rounds for inclusion in the final dataset. Semantic annotations underwent a similar verification process, evaluated on scene description accuracy, safety level relevance, and completeness of hazard descriptions, with the overall safety level determined by the most severe hazard present. This meticulous approach guarantees consistency and accuracy in the core supervision signals across all platforms, delivering a high-quality resource for advancing research in industrial inspection and predictive maintenance.

Real-world multimodal data for industrial safety is increasingly

Researchers have introduced InspecSafe-V1, a novel benchmark dataset designed to advance industrial inspection safety assessment through improved scene understanding and safety reasoning. This dataset addresses current limitations in existing resources, which often rely on simulated data, single sensing modalities, or lack detailed object-level annotations. InspecSafe-V1 comprises data collected from real-world industrial environments using 41 inspection robots across 2,239 sites, resulting in 5,013 inspection instances with pixel-level segmentation and safety level labels. The dataset incorporates seven synchronized sensing modalities, visible-spectrum images, infrared video, audio, depth and radar point clouds, gas measurements, temperature, and humidity, enabling comprehensive anomaly recognition and cross-modal data fusion.

This multi-modal approach is crucial for assessing safety in complex industrial settings characterised by noise, occlusion, and varying illumination. The authors acknowledge limitations related to the specific industrial scenarios covered and potential biases inherent in the data collection process. Future work could focus on expanding the dataset to include a wider range of industrial environments and developing standardised evaluation protocols to facilitate broader adoption and algorithmic improvement. This achievement represents a significant step towards enabling reliable predictive maintenance and autonomous inspection in challenging industrial environments. By providing a high-quality, real-world dataset with detailed annotations and multi-modal sensing, InspecSafe-V1 facilitates the development of more robust and intelligent AI systems for industrial safety applications. The availability of this benchmark will allow for fairer comparison of different approaches and accelerate progress in this critical field.

👉 More information

🗞 InspecSafe-V1: A Multimodal Benchmark for Safety Assessment in Industrial Inspection Scenarios

🧠 ArXiv: https://arxiv.org/abs/2601.21173