Recent advances demonstrate that dedicating more computational power during the image generation process improves the quality of the resulting samples, and Flow Matching has emerged as a powerful technique with applications in vision and scientific modelling. However, methods to scale computational effort at inference time for Flow Matching remain largely unexplored. Adam Stecklov, Noah El Rimawi-Fine, and Mathieu Blanchette from McGill University and the Quebec AI Institute address this gap by introducing new procedures that maintain the efficiency of Flow Matching’s straightforward sampling process, unlike other approaches that introduce complexity. Their work extends the application of inference-time compute scaling beyond image generation, and importantly, demonstrates its effectiveness in a scientific domain through the first successful application to unconditional protein generation, consistently improving sample quality as computational resources increase.

Accelerating Generative Model Inference and Diversity

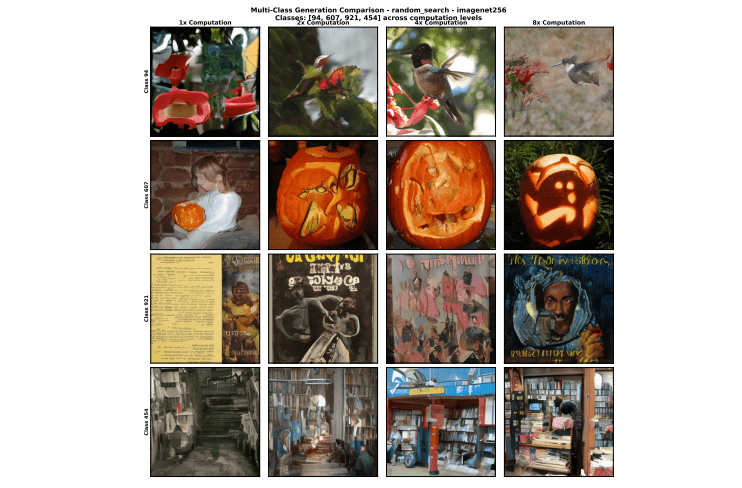

This research details advancements in improving the efficiency and quality of generative modeling, focusing on scaling computation during the sampling process without requiring model retraining. Scientists explored techniques to accelerate sample generation and enhance the diversity and quality of outputs, successfully applying these methods to both image generation, using models like SiT-XL, and protein structure generation, using FoldFlow. The work centres around flow matching, a generative modeling approach that learns a continuous normalizing flow. Key to this progress is the development of score-orthogonal perturbations, a novel technique for injecting noise during sampling.

This noise is carefully designed to be orthogonal to the score function, which guides the sampling process, ensuring the continuity of the probability distribution and improving sampling efficiency. Researchers also combined random search with a noise search strategy to more effectively explore the sample space, and employed verifier-guided sampling, using models to evaluate sample quality and guide the process towards better results. Experiments conducted on ImageNet and FoldFlow datasets demonstrate the effectiveness of these methods. Results show that combining random search with noise search consistently improves sample quality and diversity, and that verifier-guided sampling further enhances results. Researchers developed a method using the Noise Search algorithm to enhance sample quality with relatively small increases in computational cost, initially validated on ImageNet 256×256 image generation. Experiments involved scaling compute to observe improvements in protein designability, revealing significant gains as computational resources increased. Experiments demonstrate that sample quality consistently improves as the amount of computation during inference is increased.

Specifically, the research showcases the successful application of inference-time scaling to a scientific domain, with significant gains in protein designability using the FoldFlow2 framework. This represents a breakthrough in extending the benefits of scalable inference beyond traditional image generation tasks. By maintaining the linear interpolant inherent to flow matching, the researchers developed a noise search algorithm that consistently enhances sample quality as computational resources increase. Evaluations across both image generation and, for the first time, unconditional protein generation, confirm the effectiveness of this approach and extend the applicability of flow matching to scientific domains. Furthermore, the team introduced a novel noise schedule designed to optimise the balance between sample diversity and quality, achieving state-of-the-art results with a two-stage algorithm. This algorithm initially explores a range of starting points with random search, then refines the resulting trajectories using the noise search method, leveraging the algorithm’s independence from initial conditions. While the research demonstrates significant gains, the authors acknowledge limitations stemming from the pretrained models used, suggesting that improvements may be constrained by the models’ lack of explicit training for inference-time scaling.

👉 More information

🗞 Inference-Time Compute Scaling For Flow Matching

🧠 ArXiv: https://arxiv.org/abs/2510.17786