The first quantum computer to run without interruption has emerged from a Harvard laboratory, turning a once‑fictitious promise into a tangible milestone. For years, quantum machines have been shackled by a fragile ingredient called the qubit, which can be lost as quickly as a stray photon leaves a trap. Those losses forced researchers to reboot systems every few milliseconds or, at best, every thirteen seconds. Harvard’s team, led by physicist Mikhail Lukin, has now demonstrated a machine that ran for more than two hours, and the researchers believe it could run indefinitely. This achievement does more than extend runtime; it rewrites the architecture of quantum information processing and opens the door to practical applications in medicine, finance and cryptography.

A New Era of Quantum Persistence

Until now, the dominant narrative around quantum computing has focused on raw speed,how many operations a qubit can perform in a given time. The Harvard breakthrough shifts the focus to endurance. By enabling continuous operation, the new platform removes the bottleneck that forced earlier systems to restart, a process that not only wastes precious time but also disrupts the delicate coherence required for complex calculations. Imagine a drug‑discovery algorithm that can run for days on a single quantum processor, refining protein‑folding models without interruption. In finance, risk‑assessment models that previously ran on clusters of classical machines could be streamlined into a single, always‑on quantum node, cutting both latency and operational costs. The ability to sustain operations for hours, and potentially forever, turns quantum computing from a laboratory curiosity into a viable service model.

The Technical Engine: Conveyor Belts and Tweezers

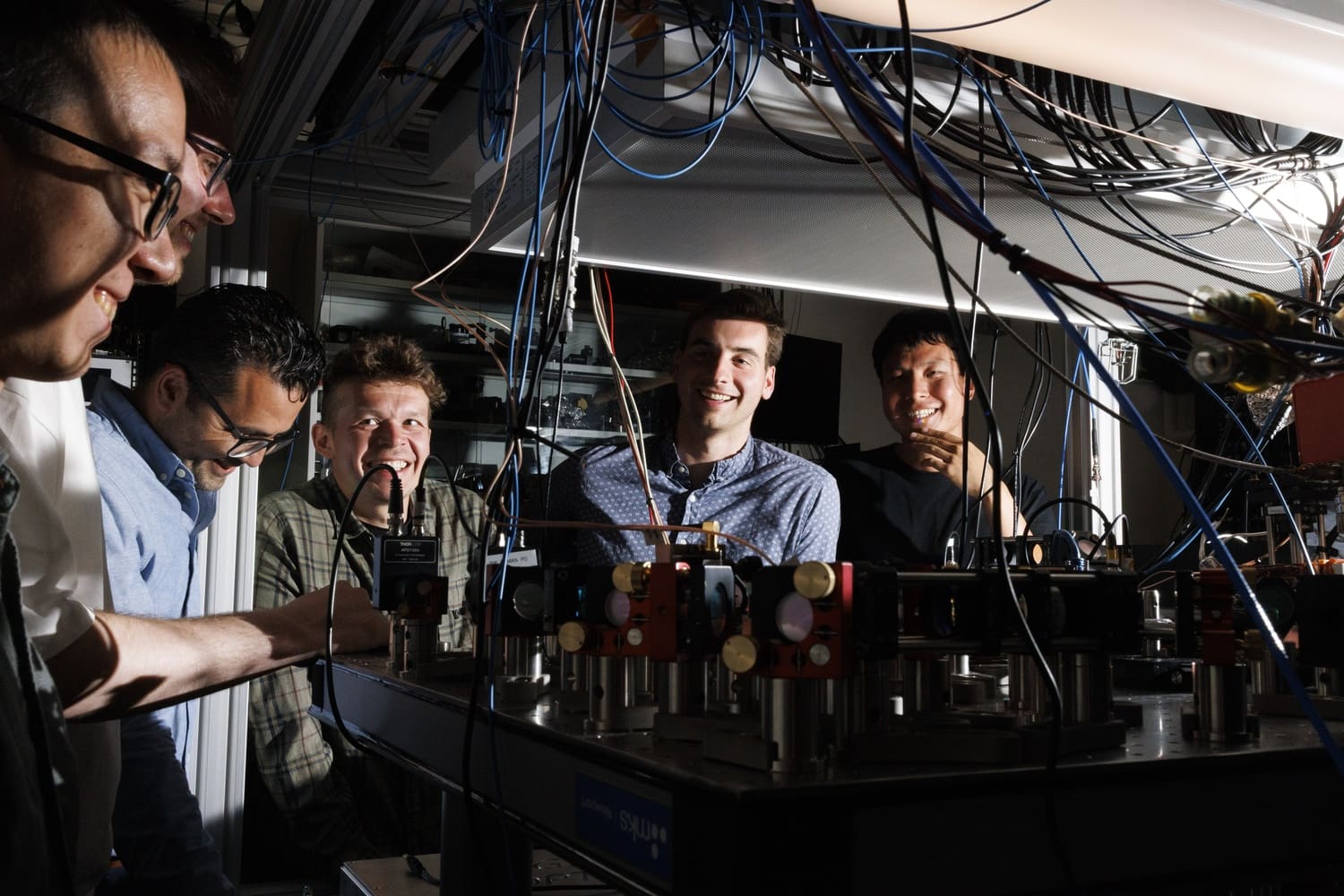

At the heart of this breakthrough lies a clever choreography of light and atoms. The Harvard team combined two optical tools: a lattice conveyor belt that moves atoms across a grid, and optical tweezers that can pick and place individual atoms with nanometre precision. Together, they create a self‑sustaining supply line that replenishes qubits as they are lost. The system hosts 3,000 qubits and injects 300,000 atoms every second, outpacing the loss rate and keeping the quantum state intact. This approach mirrors a factory line, where parts are constantly replaced to maintain output, but here the parts are quantum states that must remain coherent. The result is a dynamic, fault‑tolerant architecture that does not merely tolerate errors,it actively repairs them in real time. This design could be scaled further, potentially allowing future machines to grow from thousands to millions of qubits while maintaining continuous operation.

Beyond the Lab: Implications and the Road Ahead

Harvard’s achievement arrives at a time when governments and corporations are pouring billions into quantum research. The new platform gives industry partners a concrete blueprint for building longer‑running machines, accelerating the timeline for practical quantum applications. The University’s own initiatives,its pioneering Ph.D. program, the partnership with Amazon Web Services for quantum networking, and the broader Quantum Initiative,position it as a hub where theory, experiment, and industry converge. The collaborative spirit is evident in the partnership with MIT’s Vladan Vuletić, who estimates that fully autonomous, never‑ending quantum computers could be operational in three years, a dramatic acceleration from the previous five‑year outlook.

The implications stretch beyond pure computation. In cryptography, continuous quantum processors could test the resilience of current encryption schemes against quantum attacks in real time, informing the transition to post‑quantum standards. In healthcare, real‑time quantum simulations could model complex biological systems, accelerating drug discovery pipelines. Even in climate modelling, the ability to run extensive simulations without downtime could improve the fidelity of predictive models. As the quantum community digests this development, the next steps will involve integrating error‑correction codes, scaling the qubit count, and ensuring that the supply chain of atoms remains robust. Harvard’s work has removed a critical hurdle, but the journey toward fully functional, industry‑grade quantum computers is still in its early chapters.

The long‑term payoff is clear: a quantum computer that can run continuously will redefine what is possible in computation. By turning a fleeting, fragile system into a stable, enduring platform, Harvard has laid the groundwork for a new era where quantum machines can be deployed as reliable services, driving innovation across science and industry.