xAI has announced Grok-1.5, an improved model with advanced reasoning capabilities and the ability to process long contexts of up to 128,000 tokens. The model has shown significant performance in coding and math-related tasks, scoring 50.6% on the MATH benchmark and 90% on the GSM8K benchmark. It also scored 74.1% on the HumanEval benchmark, which evaluates code generation and problem-solving abilities. Grok-1.5 is built on a custom distributed training framework based on JAX, Rust, and Kubernetes. It will soon be available to early testers and existing Grok users on the 𝕏 platform.

Grok 1.5

Grok-1.5: Enhanced Reasoning and Long Context Understanding

The latest model from xAI, Grok-1.5, is set to offer improved reasoning capabilities and an extended context length of 128,000 tokens. This model, which will soon be available on the 𝕏 platform, builds on the progress made with the release of Grok-1‘s model weights and network architecture two weeks ago.

Improved Performance in Coding and Math-Related Tasks

Grok-1.5 has shown significant improvements in coding and math-related tasks. In tests, the model achieved a 50.6% score on the MATH benchmark and a 90% score on the GSM8K benchmark. These benchmarks cover many problems from grade school to high school competition levels. Additionally, Grok-1.5 scored 74.1% on the HumanEval benchmark, which evaluates code generation and problem-solving abilities.

Enhanced Long Context Understanding

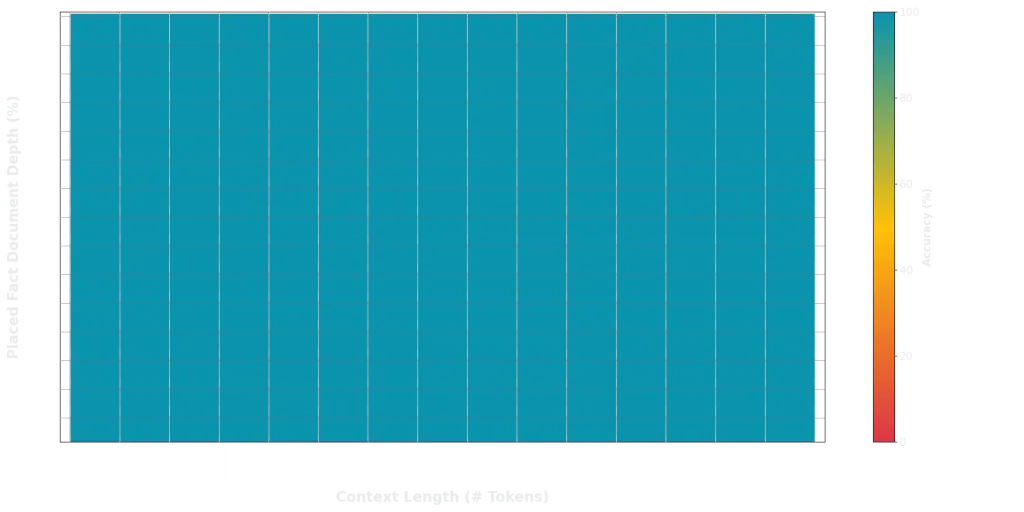

A notable feature of Grok-1.5 is its ability to process long contexts of up to 128K tokens within its context window. This is a significant increase from the previous context length, allowing the model to utilize information from substantially longer documents. The model’s ability to recall information from its context window is visualized in a graph, where the x-axis represents the length of the context window and the y-axis represents the relative position of the fact to retrieve from the window. The graph is entirely green, indicating a 100% recall rate for every context window and every placement of the fact to retrieve.

Grok-1.5 Infrastructure: Robust and Flexible

Grok-1.5 is built on a custom distributed training framework based on JAX, Rust, and Kubernetes. This robust and flexible infrastructure allows the team to prototype ideas and train new architectures at scale with minimal effort. A significant challenge in training Large Language Models (LLMs) on large compute clusters is maximizing reliability and uptime of the training job. The custom training orchestrator of Grok-1.5 ensures that problematic nodes are automatically detected and ejected from the training job. The team has also optimized checkpointing, data loading, and training job restarts to minimize downtime in the event of a failure.

Looking Forward: Grok-1.5 Rollout and Future Developments

Grok-1.5 will soon be available to early testers, and xAI is eager to receive feedback to help improve the model. As Grok-1.5 is gradually rolled out to a wider audience, several new features will be introduced. The scores for GPT-4, another language model, are taken from the March 2023 release. For the MATH and GSM8K benchmarks, maj@1 results are presented, and for HumanEval, pass@1 benchmark scores are reported.

External Link: Click Here For More