Structural plasticity, the brain’s ability to forge new connections and eliminate weak ones, remains a largely untapped potential in artificial neural networks, despite its crucial role in biological learning and recovery. James C. Knight, Johanna Senk, and Thomas Nowotny, from the University of Sussex and Jülich Research Centre, address this challenge by presenting a new framework for implementing structural plasticity within GPU-accelerated spiking neural networks. Their work overcomes limitations in existing machine learning tools, which struggle with the computational demands of sparse connectivity, and leverages the GeNN simulator to achieve significant speed-ups in both supervised and unsupervised learning scenarios. The team demonstrates that their sparse classifiers reduce training time by up to tenfold, while maintaining performance comparable to dense models, and enables real-time simulation of topographic map formation, offering a powerful new tool for exploring the benefits of sparsity in neural networks and beyond.

GPU Acceleration of Spiking Neural Network Simulations

This research details a code generation framework, GeNN (GPU-Enhanced Neural Networks) and its extensions (mlGeNN, PyGeNN), for accelerating brain simulations, particularly spiking neural networks (SNNs). The core focus is achieving efficient and scalable simulations on modern hardware, specifically GPUs, addressing a significant challenge in neuroscience and artificial intelligence: the immense computational cost of simulating large-scale brain models. Spiking neural networks, inspired by biological brains, offer potential advantages in energy efficiency and biological plausibility, but are particularly challenging to train and simulate efficiently. To overcome these limitations, the team developed GeNN, a framework that automatically translates network models into optimized CUDA code for GPUs, avoiding performance bottlenecks associated with interpreted simulation environments.

mlGeNN extends GeNN for machine learning applications, enabling faster training and inference of SNNs, while PyGeNN provides a user-friendly Python interface for defining and simulating networks. The researchers also implemented event-driven simulation techniques, such as using sparsity, to further improve efficiency. The results demonstrate that GeNN and its extensions achieve significant speedups compared to traditional simulation methods and other SNN frameworks, effectively harnessing GPU parallelism. The code generation approach allows simulations to scale to larger network sizes and more complex models, highlighting the benefits of exploiting sparsity in network connections and activity to reduce computational load.

The framework integrates seamlessly with popular machine learning libraries and utilizes standard datasets, while also providing tools for describing and sharing network models. This research provides a powerful framework for accelerating brain simulations and enabling the development of more realistic and efficient spiking neural networks. The focus on code generation, GPU acceleration, and event-driven techniques represents a significant step forward in computational neuroscience and neuromorphic computing, contributing to the broader goal of building brain-inspired AI systems that are both powerful and energy-efficient.

Sparse Structural Plasticity with GPU Acceleration

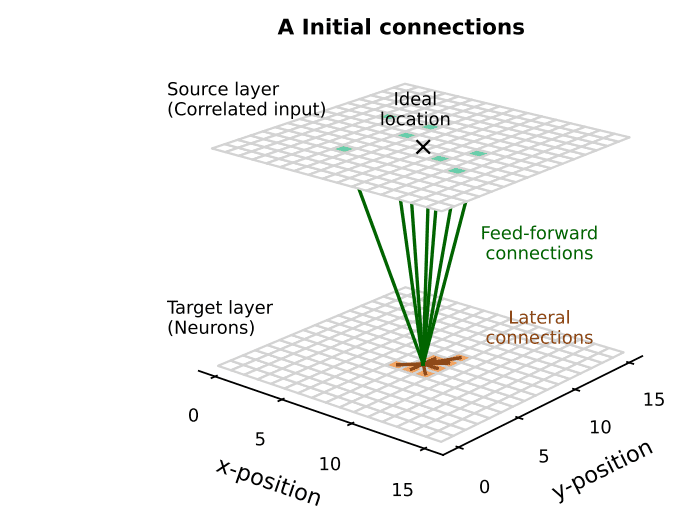

This study pioneers a new framework for implementing GPU-accelerated structural plasticity, inspired by the way biological brains refine connections during learning and recovery. Researchers addressed the computational cost of large neural network models by focusing on sparse connectivity, where only essential connections remain. The team leveraged the GeNN simulator and extended its capabilities to support dynamic structural changes, developing a ‘ragged matrix’ data structure to represent sparse connections. To maximize parallelization, the team allocated one CUDA thread per column of the ragged matrix, enabling efficient reading of synaptic weights and accumulation of input to postsynaptic neurons using atomic operations.

This allows for rapid updates to connectivity without reallocating memory, achieved by adding new connections and swapping removed synapses. Researchers implemented access to crucial synaptic and neuronal variables to support diverse plasticity rules, extending PyGeNN with a ‘custom connectivity updates’ primitive allowing users to define their own models for neurons, synapses, and structural plasticity rules. This system achieved up to a 10x reduction in training time for sparse classifiers while maintaining performance comparable to dense models, and enabled faster-than-realtime simulations of topographic map formation, demonstrating the scalability of the approach. This work establishes a foundation for building more efficient and biologically realistic neural networks capable of adapting and learning in complex environments.

Sparse Connectivity Accelerates Spiking Neural Networks

This work presents a new framework for implementing and accelerating structural plasticity in spiking neural networks (SNNs), achieving significant performance gains during both training and simulation. Researchers developed a flexible system within the GeNN simulator to efficiently manage sparse connectivity, a key feature inspired by structural plasticity observed in biological brains. The core of this advancement lies in a ‘ragged matrix’ data structure, which allows for parallel processing of spikes and efficient updates to network connections on GPUs. Experiments demonstrate that this sparse connectivity framework reduces training time by up to 10x compared to traditional dense models, while maintaining equivalent performance.

The team successfully trained SNN classifiers using both the e-prop supervised learning rule and the DEEP R rewiring mechanism, showcasing the versatility of the new system. Furthermore, they achieved faster-than-realtime simulations of topographic map formation, providing valuable insights into the evolution of network connectivity and scaling performance. The framework supports a range of structural plasticity rules, including access to synaptic weights, pre- and postsynaptic variables, and random number generation, achieved through ‘custom connectivity updates’ within GeNN. The ‘ragged matrix’ structure enables efficient parallel updates to connectivity by adding or removing synapses without reallocating memory, a crucial optimization for large-scale networks. This work establishes a foundation for further research into sparsity in neural networks and opens new avenues for exploring its benefits in diverse applications.

Sparse Networks Learn Faster With Rewiring

This research presents a new computational framework for simulating spiking neural networks with structural plasticity, mirroring the way biological brains dynamically change connections. The team successfully implemented this framework, demonstrating its ability to train sparse networks up to ten times faster than traditional dense networks, while maintaining comparable performance. This was achieved through a combination of efficient GPU acceleration and a novel rewiring process that selectively strengthens and eliminates connections during learning. The framework was tested using supervised classification and unsupervised topographic map formation.

In topographic map simulations, the system accurately formed spatial representations of input data in real-time, providing insights into how network connectivity evolves during learning. Future work will focus on exploring the benefits of sparsity in a wider range of applications and developing methods to maintain sparsity throughout the learning process, ultimately contributing to more efficient and biologically realistic neural networks. This research paves the way for advancements in sparse network design and training.

👉 More information

🗞 A flexible framework for structural plasticity in GPU-accelerated sparse spiking neural networks

🧠 ArXiv: https://arxiv.org/abs/2510.19764