Reducing banded matrices to bidiagonal form represents a critical step in numerous scientific and artificial intelligence computations, yet achieving efficient performance has long been a challenge. Evelyne Ringoot, Rabab Alomairy, and Alan Edelman from the Massachusetts Institute of Technology present a novel algorithm that overcomes previous limitations by successfully leveraging the power of modern Graphics Processing Units (GPUs). This research demonstrates that GPUs, previously considered unsuitable for this task due to memory bandwidth constraints, can now significantly accelerate the process, outperforming established CPU-based libraries such as PLASMA and SLATE, particularly for large matrices. By carefully optimising for GPU architecture and utilising advanced array abstractions, the team achieves performance gains exceeding a factor of 100 for matrices of 32k x 32k, and importantly, scales linearly with increasing matrix bandwidth, opening new possibilities for faster computation with larger datasets.

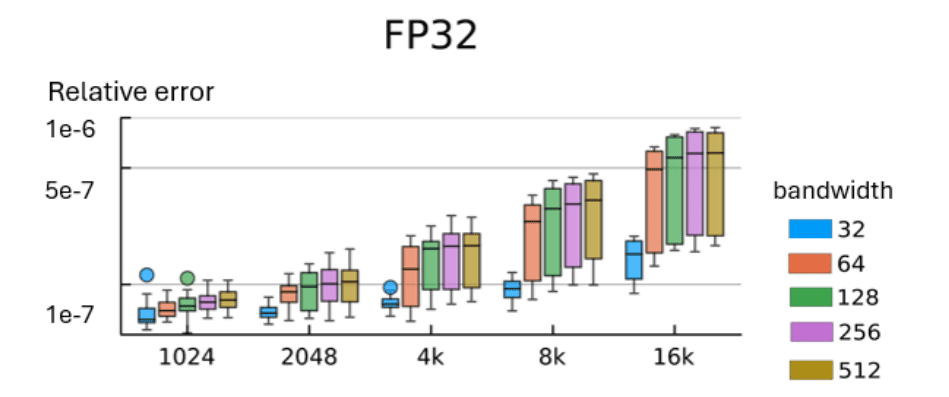

The research focuses on optimizing fundamental linear algebra operations and exploring portable code that efficiently runs on diverse hardware platforms, including those from NVIDIA, AMD, Intel, and Apple. It incorporates advanced software frameworks and libraries, such as Julia, ArrayFire, and NextLA, to facilitate GPU-accelerated computations. Researchers are actively exploring emerging accelerator technologies, including Intel Ponte Vecchio and AMD Instinct MI300X, to push the boundaries of performance. Recognizing previous limitations stemmed from memory bandwidth constraints, the team leveraged recent advancements in GPU architecture, specifically increased L1 memory per Streaming Multiprocessor, to overcome these hurdles. The team engineered a highly parallel implementation of this bulge-chasing algorithm, adapting established CPU-based cache-efficient techniques for optimal GPU throughput. This involved utilizing Julia Language’s Array abstractions and KernelAbstractions to create a single, hardware-agnostic function capable of running on NVIDIA, Intel, and Apple Metal GPUs. Experiments demonstrate significant performance gains over established CPU-based libraries, PLASMA and SLATE, for matrices as small as 1024×1024, and achieve over a 100-fold speedup for 32k x 32k matrices, paving the way for orders-of-magnitude faster algorithms for banded matrix reduction.

GPU Accelerates Bidiagonal Matrix Reduction

Scientists have achieved a breakthrough in reducing banded matrices to bidiagonal form, a crucial step in Singular Value Decomposition (SVD) used extensively in scientific computing and artificial intelligence. The team developed a new algorithm within the NextLA software package, adapting existing CPU-based techniques to optimize for GPU throughput and leveraging Julia Language’s array abstractions and KernelAbstractions for hardware and data precision flexibility. Experiments reveal the GPU algorithm surpasses multithreaded CPU libraries PLASMA and SLATE, achieving performance gains exceeding a factor of 100 for matrices of 32k x 32k, and first demonstrating superior performance at a matrix size of 1024×1024. The research also demonstrates that performance scales linearly with matrix bandwidth size, enabling faster reduction of larger matrices. Researchers developed the first GPU-based algorithm for this task, building upon existing CPU-based methods and adapting them to maximize GPU throughput. The resulting implementation, part of the NextLA software package, achieves portability across different GPU vendors, NVIDIA, AMD, Intel, and Apple, and supports multiple data precisions, including half, single, and double. The team’s algorithm outperforms established high-performance libraries, such as PLASMA and SLATE, for matrices as small as 1024×1024, and achieves speed-ups exceeding 100x for 32k x 32k matrices. Importantly, performance scales linearly with matrix bandwidth size, and optimal performance hinges on efficient utilization of L1 and L2 cache memory.

👉 More information

🗞 A GPU-resident Memory-Aware Algorithm for Accelerating Bidiagonalization of Banded Matrices

🧠 ArXiv: https://arxiv.org/abs/2510.12705