The new flagship model, GPT-4o, can process text, audio, and images in real-time, improving human-computer interaction. It responds to audio inputs in less than a second, matching the performance of GPT-4 Turbo on English text and code, but with significant improvements on non-English languages. It’s also faster and 50% cheaper. GPT-4o is particularly better at understanding vision and audio compared to existing models. The model has been trained end-to-end across text, vision, and audio, meaning all inputs and outputs are processed by the same neural network. It’s the latest step in advancing deep learning towards practical usability.

Introduction to GPT-4o: A Multimodal AI Model

OpenAI has announced the launch of its new flagship model, GPT-4o. The “o” stands for “omni,” indicating the model’s ability to process and generate outputs across multiple modalities, including text, audio, and images. This represents a significant step towards more natural human-computer interaction. The model can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is comparable to human response time in a conversation.

GPT-4o matches the performance of GPT-4 Turbo on English text and code, and shows significant improvement on non-English text. It is also faster and 50% cheaper in the API. The model demonstrates superior capabilities in vision and audio understanding compared to existing models.

GPT-4o: A Single Model for Text, Vision, and Audio

Before GPT-4o, the Voice Mode used to interact with ChatGPT involved a pipeline of three separate models. One model transcribed audio to text, GPT-3.5 or GPT-4 processed the text and generated text outputs, and a third model converted the text back to audio. This process resulted in the loss of a lot of information, as GPT-4 could not directly observe tone, multiple speakers, or background noises, and it could not output laughter, singing, or express emotion.

GPT-4o, however, is trained end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network. This is the first model from OpenAI that combines all these modalities, and the exploration of its capabilities and limitations is still in the early stages.

Evaluating GPT-4o’s Performance

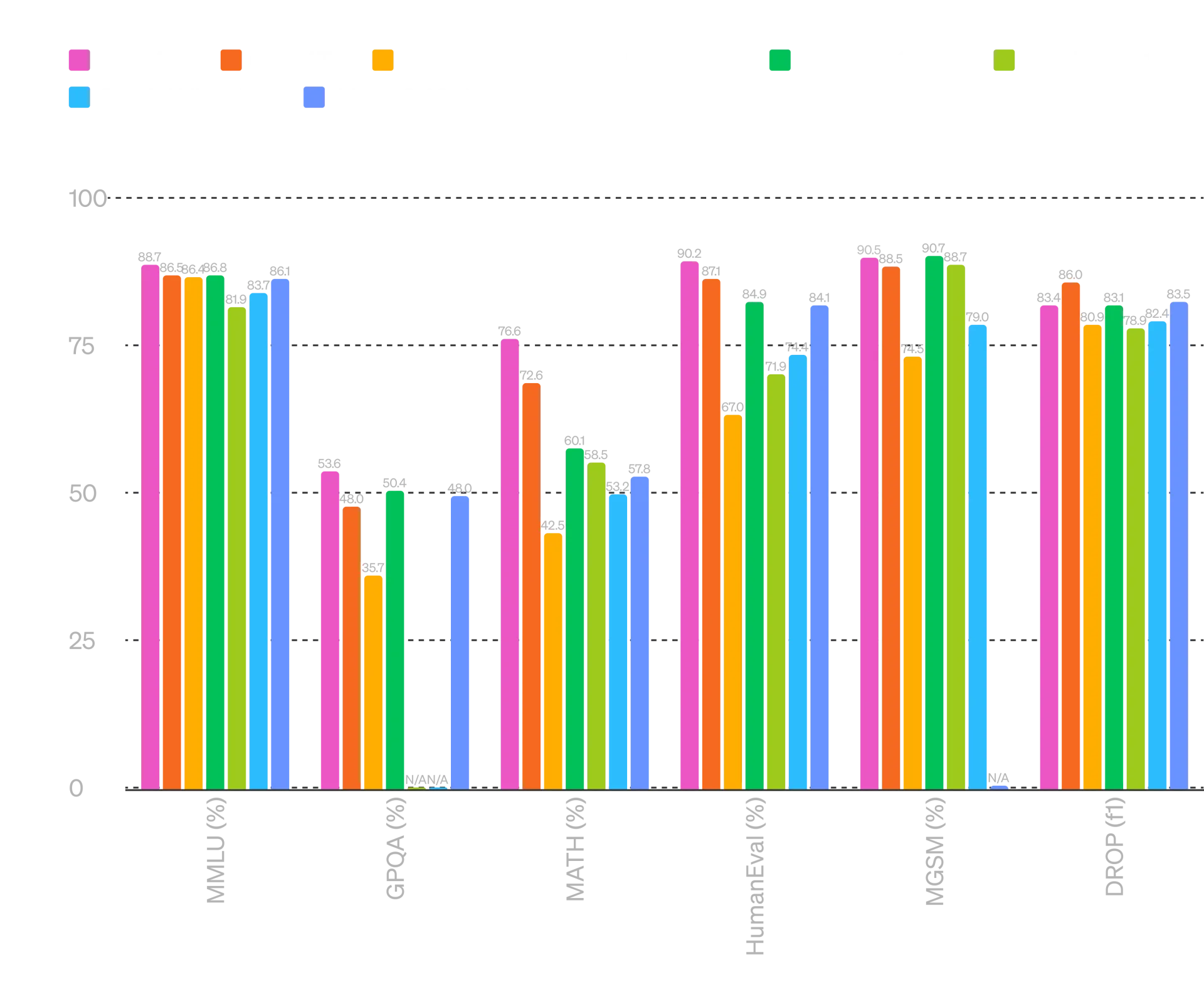

GPT-4o has been evaluated on traditional benchmarks and has achieved GPT-4 Turbo-level performance on text, reasoning, and coding intelligence. It has set new high watermarks on multilingual, audio, and vision capabilities.

In terms of text evaluation, GPT-4o sets a new high-score of 88.7% on 0-shot COT MMLU (general knowledge questions). It also dramatically improves speech recognition performance over Whisper-v3 across all languages, particularly for lower-resourced languages. GPT-4o sets a new state-of-the-art on speech translation and outperforms Whisper-v3 on the MLS benchmark. It also achieves state-of-the-art performance on visual perception benchmarks.

Safety and Limitations of GPT-4o

OpenAI has built safety into GPT-4o by design across modalities, through techniques such as filtering training data and refining the model’s behavior through post-training. New safety systems have been created to provide guardrails on voice outputs.

The model has been evaluated according to OpenAI’s Preparedness Framework and in line with their voluntary commitments. Evaluations of cybersecurity, CBRN, persuasion, and model autonomy show that GPT-4o does not score above Medium risk in any of these categories.

GPT-4o has also undergone extensive external red teaming with 70+ external experts in domains such as social psychology, bias and fairness, and misinformation to identify risks that are introduced or amplified by the newly added modalities.

GPT-4o represents OpenAI’s latest effort in advancing deep learning, particularly in terms of practical usability. The model’s capabilities will be rolled out iteratively. GPT-4o’s text and image capabilities are starting to roll out today in ChatGPT. Developers can also now access GPT-4o in the API as a text and vision model. GPT-4o is 2x faster, half the price, and has 5x higher rate limits compared to GPT-4 Turbo. Support for GPT-4o’s new audio and video capabilities will be launched to a small group of trusted partners in the API in the coming weeks.

External Link: Click Here For More