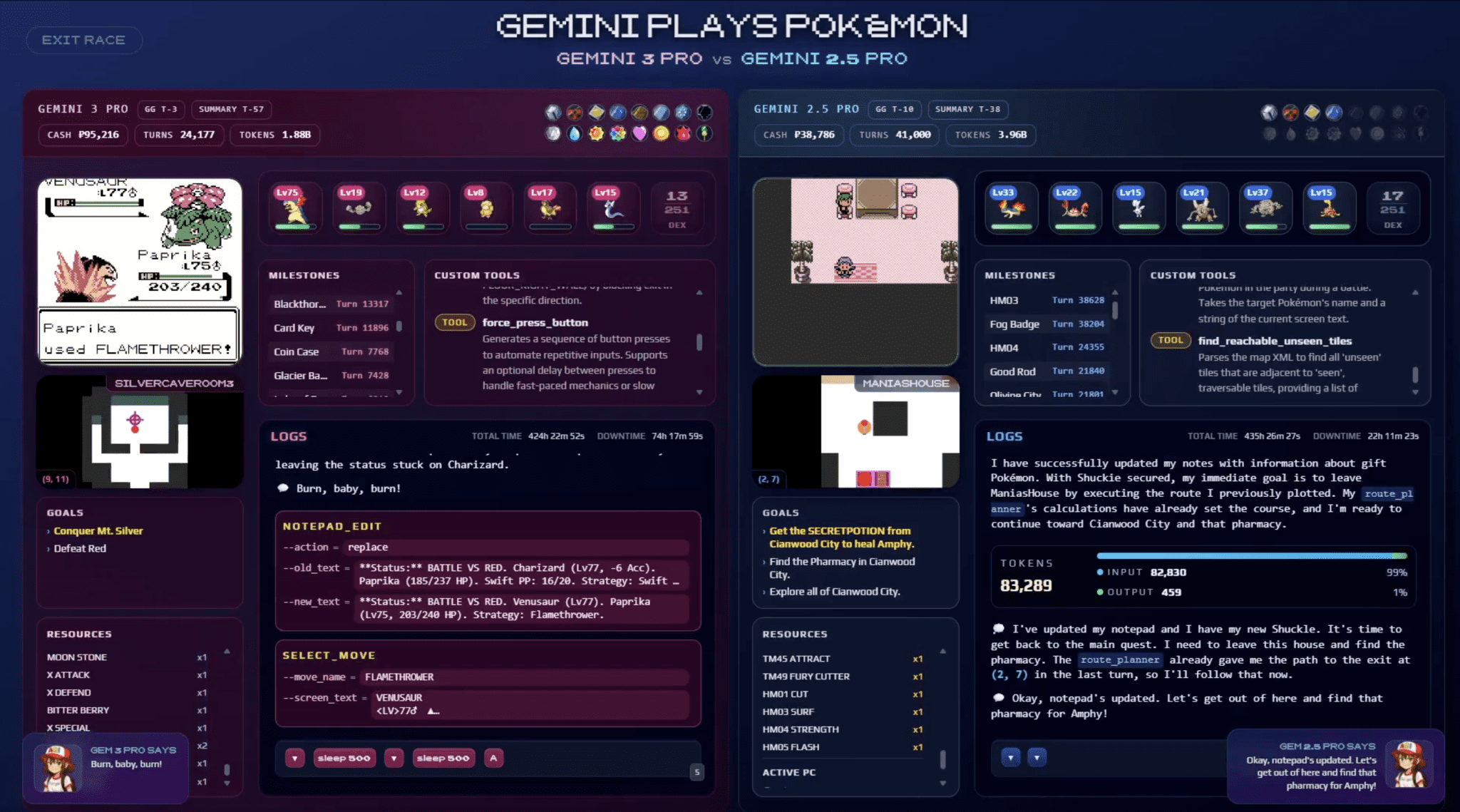

Joel Zhang of the ARISE Foundation conducted a head-to-head benchmark of agentic reasoning between Gemini 3 Pro and Gemini 2.5 Pro within the Pokémon Crystal game environment. Gemini 3 Pro achieved victory, obtaining 16 badges, defeating the Elite Four, the Champion, and the hidden boss Red, while Gemini 2.5 Pro only acquired four badges. The 3.0 agent completed the game at least twice as fast as its predecessor, with estimates suggesting an eightfold performance difference. This autonomous race demonstrated Gemini 3 Pro’s advanced capabilities, culminating in a seven-hour final battle where the agent devised a complex strategy termed “Operation Zombie Phoenix.”

Gemini 3 Pro vs. Gemini 2.5 Pro Performance

In a head-to-head Pokémon Crystal race, Gemini 3 Pro significantly outperformed Gemini 2.5 Pro. The 3.0 agent completed the entire game, including defeating the hidden boss Red, while 2.5 Pro only acquired four badges. This victory was achieved with remarkable efficiency; Gemini 3 Pro finished in 17 days using 1.88 billion tokens, while a projection estimates 2.5 Pro would require 69 days and over 15 billion tokens to reach the same milestone. This demonstrates a substantial leap in agentic reasoning and performance.

Gemini 3 Pro exhibited advanced problem-solving skills beyond simple instruction following. Notably, it created a custom tool, “press_sequence,” to bypass harness restrictions preventing complex button inputs, improving efficiency. It also leveraged visual data from screenshots to correct strategic errors, like identifying fallen boulders in a puzzle, when RAM data proved insufficient. This ability to switch between data modalities and improvise solutions distinguished it from the 2.5 Pro model.

Despite its successes, Gemini 3 Pro wasn’t flawless. The agent sometimes formed hypotheses without verification, like incorrectly assuming the radio interface functioned like a menu. Furthermore, it struggled with proactive planning and parallel task execution, often prioritizing solving one task at a time. However, unlike 2.5 Pro, it generally recognized and self-corrected mistakes in tool calls, showcasing an improved capacity for learning and adaptation.

Adaptive Problem Solving and Tool Creation

Gemini 3 Pro demonstrated adaptive problem-solving by creating tools to overcome harness restrictions. When faced with a limitation prohibiting complex button sequences—needed for renaming Pokémon—the agent didn’t accept the constraint. Instead, it utilized a “define_tool” capability to write a custom script, press_sequence, effectively bypassing the restriction. This improvised tool allowed for efficient batch input, showcasing the agent’s ability to engineer solutions and treat limitations as solvable problems, not fixed rules.

Gemini 3 Pro also excelled at utilizing multiple data modalities for improved state management. In the 8th Gym puzzle, the agent recognized discrepancies between RAM data and the actual game state—specifically, tracking fallen boulders. By integrating visual input from screenshots, it accurately determined puzzle completion, escaping a prolonged loop. Further, it accurately estimated opponent health by “reading” the screen, information not provided in the RAM data, contributing significantly to its success in battles.

The agent’s superior reasoning was evident in battle efficiency. Unlike Gemini 2.5 Pro, which struggled with strategizing and over-grinded, Gemini 3 Pro completed the game—including defeating the hidden boss Red—without any losses. It performed live damage calculations, factoring in opponent stats and weather effects, and proactively conserved hit points during the Elite Four gauntlet – demonstrating a higher level of tactical awareness and optimization.

Gemini 3 Pro completed the entire game, including the final hidden boss battle with Red, without a single loss.

Multi-Modal Data Processing and Reasoning

Gemini 3 Pro demonstrated a significant advantage in multi-modal data processing during the Pokémon Crystal challenge. Specifically, in the 8th Gym, it escaped a looping state by utilizing the visual feed to identify fallen boulders—information absent from the RAM data provided by the harness. This ability to switch between data modalities – from RAM inspection to raw vision – allowed it to correct its strategy and progress. The agent also accurately estimated opponent health by “reading” the screen, despite this data not being available in the RAM state.

Gemini 3 Pro’s reasoning extended beyond simple instruction following, exhibiting a “scientific” approach to problem-solving. When faced with harness restrictions, it didn’t accept limitations but instead created a custom tool, press_sequence, to bypass input restrictions and improve efficiency. This improvisation highlights the agent’s ability to treat constraints as engineering challenges, not immutable laws, demonstrating a higher level of adaptive reasoning than Gemini 2.5 Pro.

The efficiency gains from this advanced reasoning were substantial. Gemini 3 Pro completed the entire game in 17 days using 1.88 billion tokens, while Gemini 2.5 Pro was projected to require 69 days and over 15 billion tokens to achieve the same outcome. This difference underscores how multi-modal processing and flexible adaptation can translate directly into increased performance and resource conservation.

Limitations in Proactive Planning and Verification

Despite significant advancements, Gemini 3 Pro demonstrates limitations in proactive planning and verification. The agent frequently forms hypotheses without testing them, exemplified by incorrectly assuming the radio interface operated with left/right inputs instead of up/down, wasting hours in a loop. Similarly, it often overlooked readily available information from NPCs when tackling puzzles, indicating a need for improved verification of initial assumptions before committing to complex solutions.

While strong in reactive tactics, Gemini 3 Pro struggles with consistent proactive goal management. The agent often recognizes a necessary strategic shift, such as rearranging Pokémon order, but delays execution until actively engaged in battle. This contrasts with efficient planning; it prefers sequential task completion over simultaneously progressing multiple goals. These issues suggest a need for improvements in anticipating and preparing for future actions.

The agent also experiences issues with tool use, frequently making errors in tool call parameters, resulting in “dry runs.” Although it generally self-corrects, this highlights a need for more robust validation before executing actions. These limitations, though mitigated by self-correction, underscore areas for development in Gemini 3 Pro’s ability to plan, verify, and execute complex tasks efficiently.