Researchers at Rice University have discovered a potential threat to the quality and diversity of artificial intelligence-generated data, which they term “MADness” (Mutual Autophagy in Data-driven systems). This phenomenon occurs when AI models are trained on synthetic data without sufficient fresh real data, leading to a rapid deterioration of model outputs.

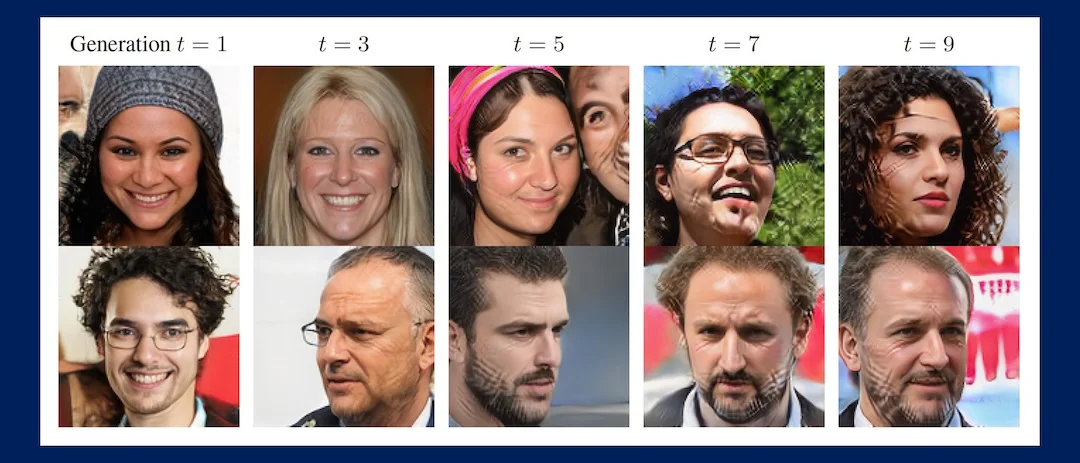

In simulations, datasets consisting of numerals 1 through 9 became increasingly warped and illegible over 20 model iterations, while human faces developed gridlike scars or looked like the same person. The researchers introduced a sampling bias parameter to account for “cherry picking,” where users favor data quality over diversity, but this came at the expense of an even steeper decline in diversity.

The study’s lead author, Richard Baraniuk, warns that MADness could “poison the data quality and diversity of the entire internet if left uncontrolled.” The research was supported by the National Science Foundation, Office of Naval Research, the Air Force Office of Scientific Research, and the Department of Energy. Other key individuals involved in the study include Sina Alemohammad, Josue Casco-Rodriguez, Ahmed Imtiaz Humayun, Hossein Babaei, Lorenzo Luzi, Daniel LeJeune, and Ali Siahkoohi.

AI’s Dark Future: The Dangers of Self-Consuming Training Loops

In a chilling study, researchers at Rice University have uncovered the potential pitfalls of self-consuming training loops in generative models. These loops, where AI models are trained on their own output, can lead to a downward spiral of data quality and diversity, ultimately resulting in “MADness” – a scenario where future generative models are doomed to produce low-quality, homogeneous outputs.

The team, led by Dr. Richard Baraniuk, explored three variations of self-consuming training loops: fully synthetic, synthetic augmentation, and fresh data loops. Their simulations revealed that without sufficient fresh real data, the models would generate increasingly warped outputs lacking quality, diversity, or both.

In a fully synthetic loop, where successive generations of a model are fed a fully synthetic data diet, the researchers observed a rapid deterioration of model outputs. For instance, in a dataset consisting of numerals 1 through 9, all images became illegible by generation 20.

Introducing a sampling bias parameter to account for “cherry picking” – the tendency of users to favor data quality over diversity – led to a prolonged preservation of higher-quality data across iterations. However, this came at the expense of an even steeper decline in diversity.

The implications are dire: if left uncontrolled, MAD could poison the data quality and diversity of the entire internet. Even in the near term, unintended consequences will arise from AI autophagy, leading to a loss of trust in AI-generated content.

To mitigate these risks, it is essential to ensure that generative models are trained on diverse, high-quality datasets with sufficient fresh real data. The study’s findings serve as a warning, urging developers and users to be cautious when deploying self-consuming training loops in their AI systems.

As Dr. Baraniuk ominously puts it, “One doomsday scenario is that if left uncontrolled for many generations, MAD could poison the data quality and diversity of the entire internet.” It’s time to take action to prevent this dark future from unfolding.

External Link: Click Here For More