Researchers are tackling the challenge of ensuring safety in large language models (LLMs) by moving beyond traditional activation-based monitoring, which often lacks precision and adaptability. Shir Rozenfeld, Rahul Pankajakshan, and Itay Zloczower, alongside Eyal Lenga, Gilad Gressel, and Yisroel Mirsky et al from Ben Gurion University of the Negev and Amrita Vishwa Vidyapeetham, present a novel rule-based approach in their paper, GAVEL. This work is significant because it introduces a system that models activations as interpretable ‘cognitive elements’, allowing for the creation of precise, customisable safeguards without the need for constant model retraining , a crucial step towards scalable and auditable governance of powerful AI systems. By framing safety as a set of definable rules, GAVEL promises greater transparency and control over LLM behaviour, and the team will be releasing it as an open-source framework with an automated rule creation tool.

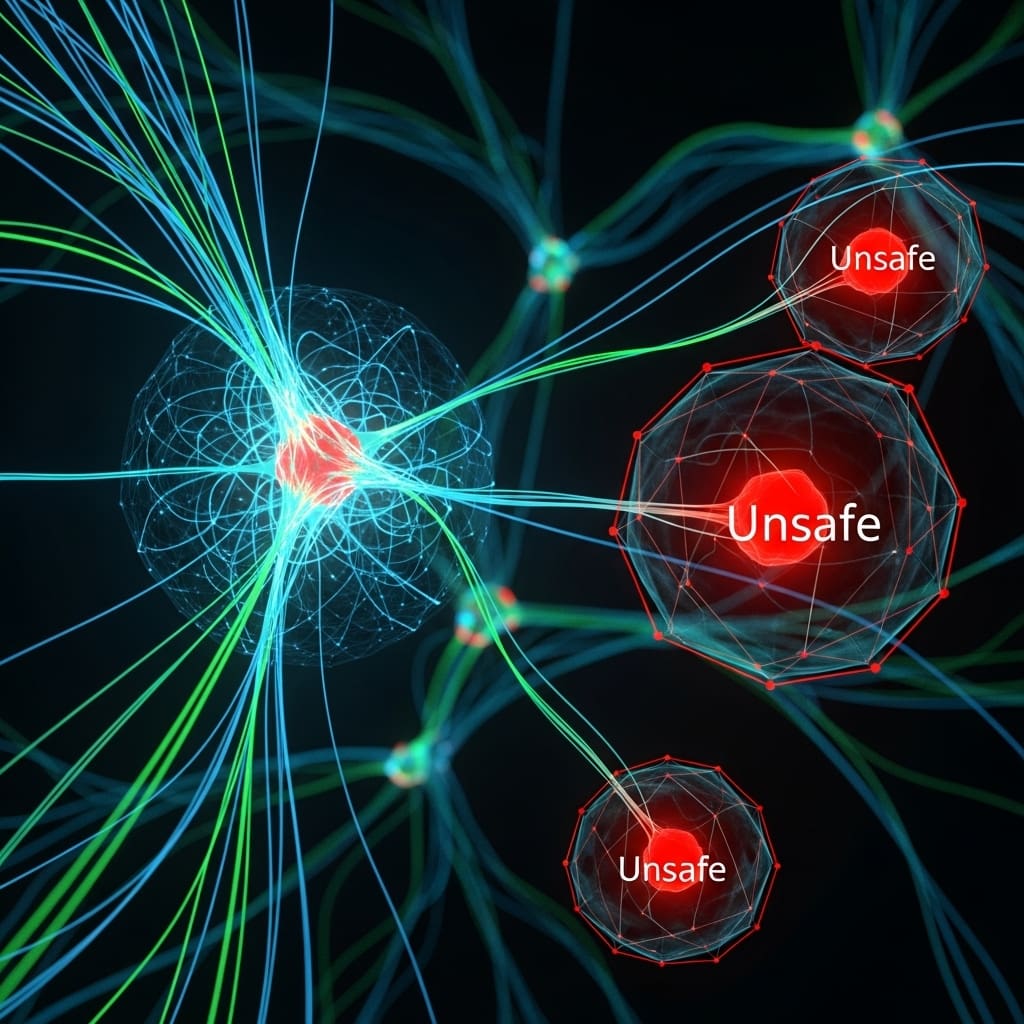

The team achieved this by modelling activations as ‘cognitive elements’ (CEs), fine-grained and interpretable factors like “making a threat” or “payment processing”, which can be combined to capture complex, domain-specific behaviours with unprecedented accuracy. By drawing inspiration from rule-sharing practices in cybersecurity, the researchers established a system that enables the definition of logical rules over CEs, allowing nuanced constraints to be enforced without extensive data collection or model adjustments.

The work opens exciting possibilities for rapid deployment of configurable safety measures, building on shared knowledge within the AI community. The researchers propose that by decomposing LLM behaviours into independent elemental concepts, they can not only improve detection accuracy but also decouple activation monitoring from the specific model architecture. Furthermore, the study unveils an initial CE vocabulary and prototype misuse rulesets, providing a foundation for industry and academic collaboration, mirroring the successful threat intelligence sharing seen in cybersecurity. This commitment to open resources aims to catalyse progress and enable the community to build upon their work, fostering a more robust and transparent approach to AI governance. The research demonstrates that the framework can operate effectively alongside LLMs in real-time, offering a viable solution for practical deployment and continuous monitoring of model behaviour. Ultimately, this work represents a significant step towards building safer, more reliable, and auditable large language models for a wide range of applications.

GAVEL Framework and Cognitive Element Definition are crucial

Scientists have developed a new paradigm for large language model (LLM) safety, shifting from traditional misuse datasets to a rule-based activation approach. Researchers introduced cognitive elements (CEs), interpretable factors like “making a threat” and “payment processing”, to model activations with greater precision. The team measured that these CEs provide a compositional basis for describing complex states, enabling more flexible and interpretable safety systems. Experiments revealed that compositional rule-based activation significantly improves precision in detecting harmful behaviours. Scientists recorded that GAVEL enables practitioners to configure and update safeguards rapidly, building on shared work from the community.

The study highlights the problem of false positives in existing systems; a detector trained on a hate speech dataset might incorrectly flag benign discussions about ethnic cultures. To combat this, the team focused on creating a system with low false positive rates, crucial for viable safeguards. Data shows that the framework’s rule-based nature allows for the expression of nuanced constraints, such as preventing a model from considering (A OR (B AND C)) while still permitting C alone. This level of expressivity is particularly important for AI governance, where transparent rule sets are needed for policy definition and auditing. Inspired by cybersecurity practices like rule-sharing in tools such as Snort and YARA, the researchers argue that AI safety can benefit from a similar collaborative paradigm. The breakthrough delivers a practical system allowing rapid, configurable deployment and interpretability, identifying specifically what triggered an alarm.

GAVEL improves LLM behaviour detection and auditability

This approach models activations as cognitive elements, interpretable factors like ‘making a threat’ or ‘payment processing’, and combines these to pinpoint nuanced, domain-specific behaviours with greater accuracy. By defining behaviours through predicate rules over cognitive elements, GAVEL achieves higher precision and reduces false positives compared to methods trained on broad misuse datasets. Furthermore, the automated rule creation tool enables rapid adaptation to new safety benchmarks, as evidenced by strong performance on datasets covering phishing, political risk, and hate speech. The authors acknowledge that the framework’s performance relies on the careful definition of cognitive elements and rules, and further refinement may be needed for complex scenarios. Future research will likely focus on expanding the library of cognitive elements and automating the rule creation process even further, potentially incorporating machine learning techniques to optimise rule sets.

👉 More information

🗞 GAVEL: Towards rule-based safety through activation monitoring

🧠 ArXiv: https://arxiv.org/abs/2601.19768