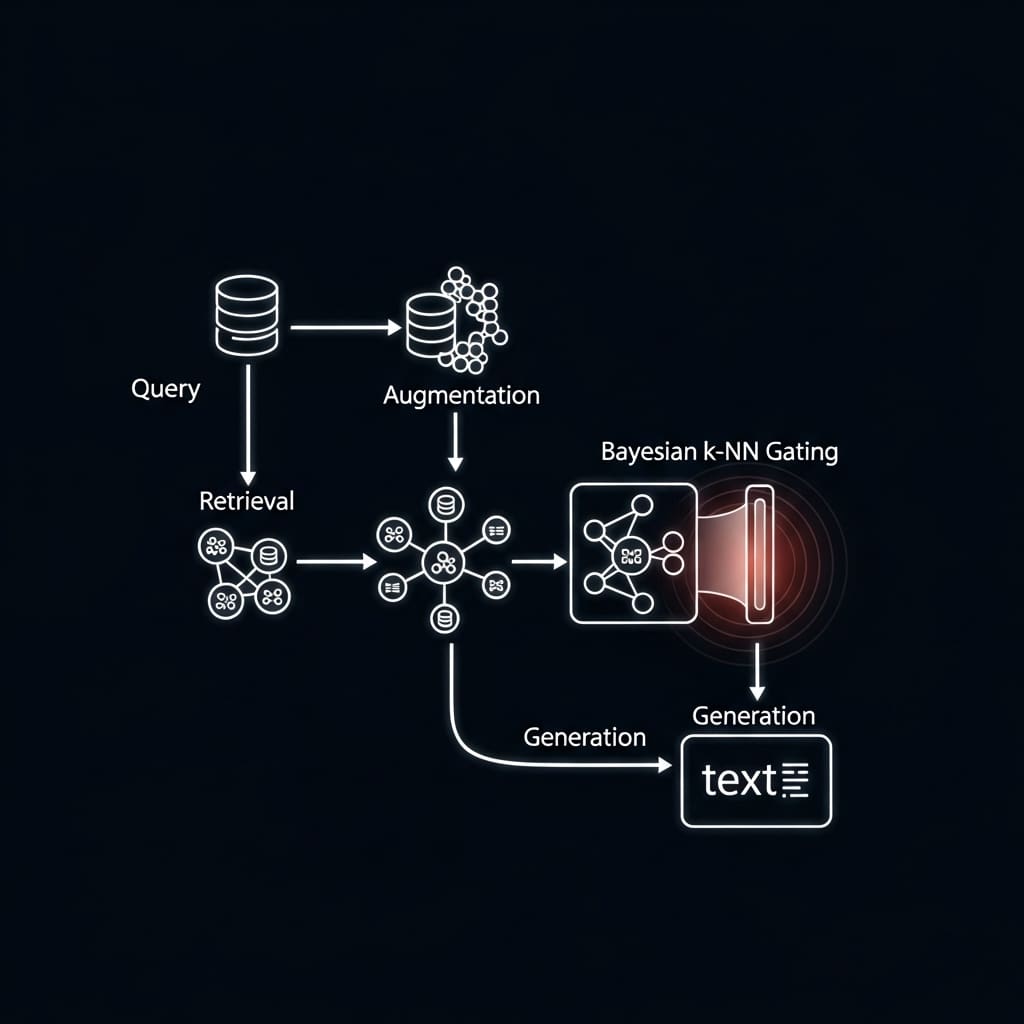

Retrieval-Augmented Generation (RAG) systems increasingly rely on blending pre-trained language models with external knowledge, but establishing the optimal balance between these sources remains a significant challenge. Researchers Gérard Biau and Claire Boyer, from Sorbonne Université and Université Paris-Saclay respectively, alongside Biau et al., present a novel statistical framework to formalise this crucial interplay. Their work introduces a k-nearest neighbour gating mechanism, quantifying the ‘trustworthiness’ of retrieved evidence and penalising reliance on unreliable information. By deriving the Bayes-optimal per-query gate and linking it to a discordance-based hallucination criterion, this research offers a principled foundation for building more factual and robust RAG systems , ultimately addressing a key limitation in current large language model deployments.

Adaptive Gating with Retrieval-Trust Weighting for RAG improves

Scientists have developed a novel statistical framework for retrieval-augmented generation (RAG) systems, formalising how language models should optimally integrate their own predictions with external evidence.The research introduces a method to balance a frozen base language model, denoted as q0(x), with a k-nearest neighbour retriever, r(k)(x), using a measurable gate, k(x), effectively controlling the influence of each component. A key innovation is the ‘retrieval-trust weight’, wfact(x), which quantifies the geometric reliability of the retrieved neighbourhood, penalising reliance on retrieval in areas where the evidence is deemed untrustworthy. This weight provides a data-driven approach to adaptive gating, moving beyond heuristic methods commonly used in current RAG systems.

The team derived the Bayes-optimal per-query gate, meticulously analysing its impact on a discordance-based hallucination criterion, a measure of disagreement between the language model’s predictions and the retrieved evidence. This analysis reveals that the proposed gating rule effectively controls hallucination by activating retrieval only when the local evidence appears reliable, establishing a clear link between retrieval geometry and factual accuracy. Furthermore, the study demonstrates that this discordance converges to a deterministic asymptotic limit, governed solely by the structural agreement or disagreement between the Bayes rule and the language model itself, offering insights into the fundamental limits of retrieval-based correction. To address the common issue of distribution mismatch between queries and the memory used for retrieval, researchers introduced a hybrid geometric-semantic model, combining covariate deformation and label corruption.

This allows the system to perform robustly even when the data used to build the retrieval memory differs from the distribution of incoming queries. Experiments show that this approach improves the accuracy and reliability of RAG systems in real-world scenarios, where perfect alignment between query and memory distributions is rarely achievable. This work establishes a principled statistical foundation for factuality-oriented RAG systems, providing an analytically tractable proxy for understanding the statistical forces governing retrieval-based correction. The research doesn’t aim to replicate the architectural details of complex modern RAG systems, but rather to clarify the underlying mechanisms and stimulate further theoretical work on the foundations of retrieval-augmented generation. By linking retrieval geometry, probabilistic gating, and factual reliability within a unified theoretical framework, the study opens new avenues for developing more robust and trustworthy language models.

Dynamic Gating for Factually Consistent Retrieval Augmentation improves

Scientists developed a statistical proxy framework to formalize the balance between language model (LM) predictions and retrieved evidence in Retrieval-Augmented Generation (RAG) systems. The study pioneered a method combining a frozen base model, denoted q₀(x), with a k-nearest neighbor retrieval component, r(k)(x), governed by a measurable gate k(x) for each query x. This gate dynamically weights the influence of retrieved information, enabling the system to prioritize reliable evidence and mitigate potential hallucinations. Researchers quantified the geometric reliability of the neighborhood using a ‘trust weight’, wfact(x), which penalizes predictions in regions with low trust, thereby enhancing factual consistency.

To derive the Bayes-optimal per-query gate, the team analysed its effect on a discordance-based hallucination criterion, a metric capturing disagreements between LM predictions and supporting evidence. This analysis revealed that the discordance admits a deterministic asymptotic limit, directly reflecting the structural alignment (or misalignment) between the Bayes rule and the LM itself. Experiments employed a hybrid geometric-semantic model, ingeniously combining covariate deformation and label corruption to address distribution mismatch between training queries and the memory used for retrieval. This innovative approach allows the system to generalize effectively even when faced with out-of-distribution data, a common challenge in real-world applications.

The research harnessed k-nearest neighbor search to identify relevant information from a large corpus, utilizing the Biau and Devroye (2015) methodology as a foundation. Specifically, the system delivers a weighted combination of the base model’s prediction and the retrieved evidence, with the weight determined by the gate function k(x). This gate is not static; it adapts to each query, reflecting the confidence in the retrieved neighbors. The team demonstrated that any non-vanishing improvement or deterioration in the hallucination score in large-sample regimes indicates a genuine structural relationship between the Bayes predictor and the LM, rather than statistical noise.

Furthermore, the study pioneered a method for evaluating factual precision using FActScore (Min et al., 2023), enabling a fine-grained assessment of the system’s performance. By meticulously controlling the experimental setup and employing rigorous statistical analysis, scientists established a principled statistical foundation for building factuality-oriented RAG systems, paving the way for more reliable and trustworthy natural language generation. This work builds upon previous advances in retrieval-augmented language models, such as those by Lewis et al (2020) and Izacard et al (2023), and extends them with a novel statistical framework.

Geometry-aware gating controls RAG hallucination effectively

Scientists developed a statistical proxy framework for Retrieval-Augmented Generation (RAG) systems, formalising how a language model should balance its own predictions with external evidence. The team measured a -trust weight, wfact(x), quantifying the geometric reliability of the neighbourhood and penalising predictions in low-trust regions, effectively discouraging retrieval when local evidence is unreliable. Experiments revealed an adaptive, geometry-aware gating rule amenable to statistical analysis, crucial for controlling hallucination. A central theme of the work is hallucination control, achieved through a quantitative discordance criterion that captures disagreements between language model predictions and retrieved evidence.

Results demonstrate that the developed gating rule controls this discordance by activating retrieval only where the local evidence appears reliable. The researchers introduced a discordance criterion, measuring disagreements between LM predictions and retrieved evidence, and showed that the gating mechanism jointly determines when retrieval improves factual reliability. To understand retrieval reliability at scale, scientists analysed the asymptotic regime where both memory size and the number of neighbours grow, establishing consistency for the -NN retrieval estimator. These analyses derived limits for the local discordance signal governing hallucination variation, showing that disagreements between retrieval and the LM reflect structural differences rather than finite-sample noise.

Measurements confirm that, asymptotically, disagreements between retrieval and the language model reflect genuine structural differences rather than noise. The study further considered the effect of a mismatch between the query distribution and the retrieval memory, introducing a hybrid geometric-semantic model combining covariate deformation and label corruption. The team defined the retrieval-trust weight, fact(x), as 1 Õ =1 exp −∥−( ) ()∥2, a scalar measuring geometric fidelity between a query and its retrieved neighbours. When the neighbourhood is compact, fact(x) approaches 1, indicating high trust in retrieval, while inconsistent or distant neighbours reduce fact(x) towards zero.

Tests prove that the population-level loss balances predictive accuracy with trust-dependent regularisation, expressed as L() = E h Õ ∈ |(| ) (−log (| )) i + E h () (1 −fact()) i. This equation incorporates expected cross-entropy for predictive fit and a penalty for retrieval in regions with weak geometric support. The hyperparameter ⩾0 controls the strength of this penalty, allowing for adaptive trade-offs between fluency and grounding. The breakthrough delivers a principled statistical foundation for factuality-oriented RAG systems, clarifying the mechanisms governing retrieval-based correction and stimulating further theoretical work.

👉 More information

🗞 A Note on k-NN Gating in RAG

🧠 ArXiv: https://arxiv.org/abs/2601.13744