The evolution of computing technology began with mechanical devices. Charles Babbage’s Analytical Engine in the 1830s was one such device. It used punched cards for calculations. Ada Lovelace’s work on programming further laid the groundwork for modern computing. By the early 20th century, vacuum tubes replaced mechanical components. The Atanasoff-Berry Computer (ABC) in the late 1930s exemplified this, marking a shift toward electronic computation.

During World War II, the demand for rapid processing led to significant advancements like the Colossus in Britain and the ENIAC in the United States, establishing electronic computing as a cornerstone of modern technology. The invention of the transistor by Bell Labs in 1947 revolutionized the field, replacing bulky vacuum tubes with smaller, faster, and more reliable components. This innovation enabled the development of the first transistorized computer, the IBM 604, in 1954, demonstrating practical advantages beyond computing, such as in TVs and radios.

Companies like Intel drove Silicon Valley’s rise in the 1960s and 1970s, culminating in the introduction of the first microprocessor, the 4004, in 1971. This breakthrough integrated an entire computer’s central processing unit onto a single chip, revolutionizing computing accessibility and sparking the digital age. These advancements have reshaped society, enabling unprecedented connectivity and computational capabilities that define our modern world.

Charles Babbage’s Analytical Engine

Charles Babbage, an English mathematician and engineer in the early 19th century, conceptualized the Analytical Engine, a mechanical general-purpose computer. His design was revolutionary for its time, featuring punch cards for programming and the ability to perform complex calculations. This marked the beginning of programmable computing devices.

The Analytical Engine’s design incorporated several key principles that would later become fundamental in computer science. It used a form of memory storage through its “store” mechanism and employed a “mill” for processing data, akin to modern CPUs. Babbage’s vision included conditional branching and loops, which are essential for algorithmic programming.

Ada Lovelace, an English mathematician, is recognized as the first computer programmer for her work on Babbage’s Analytical Engine. She wrote a detailed set of notes that included an algorithm intended to be processed by the machine. Her insights into the potential of computing machines were ahead of her time and laid the groundwork for future developments in software.

The transition from mechanical to electronic computers occurred in the 20th century, driven by advancements in electrical engineering and the need for faster computation. The development of vacuum tubes and later transistors enabled the creation of smaller, more efficient computers, leading to the rise of digital computing.

Silicon Valley emerged as a hub for technological innovation, particularly in the development of semiconductor devices. This region became synonymous with the digital age, fostering companies that revolutionized computing and information technology, building on the foundational work of pioneers like Babbage and Lovelace.

Ada Lovelace’s Algorithmic Contributions

Ada Lovelace, born Augusta Ada Byron in 1815, is celebrated as the first computer programmer for her work on Charles Babbage‘s Analytical Engine. Her collaboration with Babbage led to the creation of an algorithm designed to be processed by a machine, marking a pivotal moment in computing history. Lovelace’s notes, published in 1843, included this algorithm and demonstrated its potential for complex calculations beyond mere arithmetic.

Babbage’s Analytical Engine was a significant advancement over his earlier Difference Engine. It introduced features like loops and conditional branching, which are fundamental to modern programming. Lovelace’s work on the engine showcased her understanding of these concepts, as she developed an algorithm that could be executed mechanically. This innovation laid the groundwork for future developments in computer science.

Lovelace’s vision extended beyond the immediate capabilities of the Analytical Engine. She envisioned machines capable of handling tasks such as music composition, recognizing the broader potential of computing technology. Her insights were remarkably prescient, anticipating the versatility of computers that would emerge over a century later. This forward-thinking perspective underscores her role as a visionary in the field.

Although the Analytical Engine was never constructed during Lovelace’s lifetime, her algorithms and ideas influenced subsequent generations of computer scientists. Her work demonstrated the potential for machines to process information programmatically, a concept that became central to the development of modern computing. Lovelace’s contributions were not just theoretical; they provided practical blueprints for future innovations.

While some scholars debate the extent of Lovelace’s originality versus Babbage’s influence, most agree that her work was significant and innovative. Her ability to articulate complex ideas in a clear manner contributed to the broader understanding of computing possibilities. Lovelace’s legacy is a testament to her foresight and the enduring impact of her algorithmic contributions on the digital age.

Electro-mechanical Calculators Of The 19th Century

Charles Babbage, an English mathematician and engineer, designed the Analytical Engine in 1837, a precursor to modern computers. This machine was notable for its ability to perform complex calculations using punch cards, a concept that would later influence computer programming. Ada Lovelace, a collaborator of Babbage’s, is recognized as the first computer programmer for her work on algorithms designed for the Analytical Engine.

The Difference Engine, conceptualized by Babbage in 1822, aimed to automate mathematical tables. Despite its innovative design, the project faced funding issues and was never completed during Babbage’s lifetime. The engine’s principles laid the groundwork for future computing developments, emphasizing the potential of mechanical computation.

William Seward Burroughs revolutionized accounting with his adding machine in 1885. This device combined mechanical components with electrical elements, enabling faster and more accurate calculations. Its success highlighted the growing demand for efficient computational tools in business and industry.

Herman Hollerith’s punch card system, introduced in 1890, transformed data processing by automating tabulation tasks. Used extensively in the U.S. Census, this method significantly reduced errors and processing time. Hollerith’s company evolved into IBM, underscoring the lasting impact of his contributions on modern computing.

The legacy of these inventors—Babbage, Lovelace, Burroughs, and Hollerith—paved the way for the digital age. Their innovations demonstrated the transition from mechanical to electromechanical systems, setting the stage for developing electronic computers in the 20th century.

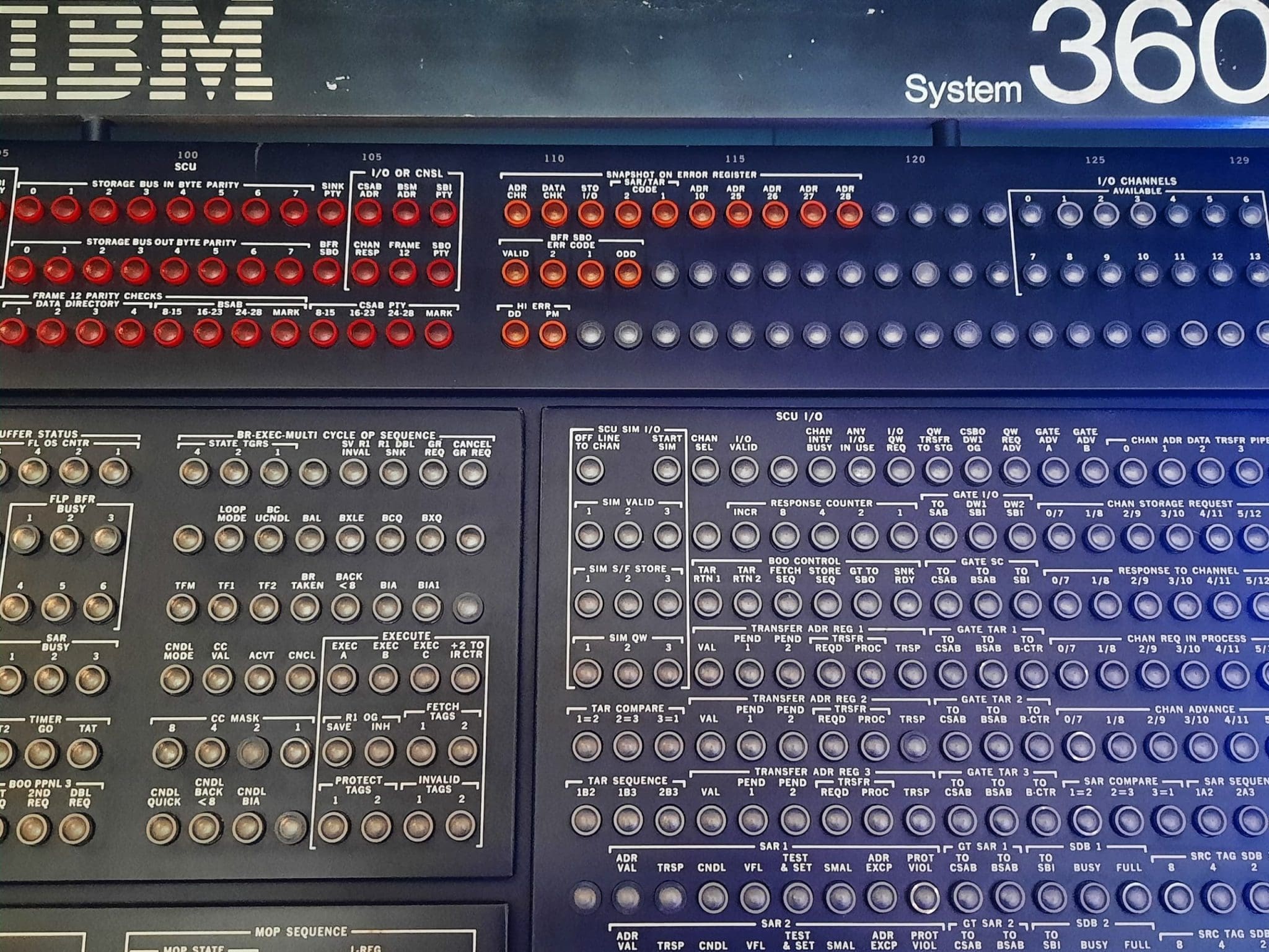

Vacuum Tube Computers In The Mid-20th Century

The development of vacuum tube computers marked a pivotal era in computing history, transitioning from mechanical calculators to electronic systems. The origins of vacuum tubes can be traced back to Lee De Forest’s invention of the triode in 1906, which enabled signal amplification and laid the groundwork for their use in early computing devices. This innovation was crucial as it allowed for more complex operations than possible with mechanical systems.

The Atanasoff-Berry Computer (ABC), developed between 1937 and 1942 at Iowa State University, stands as a landmark in this evolution. It was the first computer to use binary digits and featured separate memory and processing units, setting a foundation for modern computing architectures. This machine demonstrated the potential of electronic computation, moving beyond the limitations of mechanical systems.

World War II significantly accelerated the development of vacuum tube computers, with military applications driving advancements. The Colossus, operational by 1943, was pivotal in code-breaking efforts, highlighting the strategic importance of electronic computing during the war. This period underscored how wartime needs could rapidly advance technological capabilities.

The ENIAC, completed in 1945, represented a major milestone as it was the first general-purpose electronic computer. Utilizing over 17,000 vacuum tubes, it achieved unprecedented computational speeds, performing previously impractical tasks with mechanical systems. The ENIAC’s development marked the beginning of the digital age, showcasing the transformative potential of electronic computing.

Despite their advancements, vacuum tube computers faced significant limitations. They were bulky, power-intensive, generated substantial heat, and prone to frequent failures due to the fragility of vacuum tubes. These challenges highlighted the necessity for more reliable and efficient technologies. This necessity led to the eventual transition to transistor-based systems in the late 1950s.

The Invention Of The Transistor In 1947

The invention of the transistor in 1947 marked a pivotal moment in the evolution of computing technology. Before this, electronic computers relied heavily on bulky vacuum tubes, consumed significant power, and were prone to failure. The transistor, developed by John Bardeen, Walter Brattain, and William Shockley at Bell Laboratories, offered a more reliable, compact, and energy-efficient alternative. This breakthrough revolutionized the design of computing machines and paved the way for the miniaturization and widespread adoption of electronic devices.

The development of the transistor was rooted in the need to overcome the limitations of vacuum tubes. Vacuum tubes had been instrumental in early computing efforts, such as the ENIAC (Electronic Numerical Integrator and Computer), which contained over 17,000 tubes. However, these tubes generated substantial heat, required frequent replacement, and contributed to early computers’ large size and high power consumption. The transistor addressed these issues by utilizing semiconductor materials, enabling the creation of smaller, faster, and more durable electronic components.

The impact of the transistor on computing technology was immediate and profound. By replacing vacuum tubes with transistors, engineers could design computers that were significantly smaller, more efficient, and less prone to failure. This led to the development of the first transistorized computer, the IBM 604, in 1954. The success of this machine demonstrated the practical advantages of transistor-based computing and set the stage for further advancements in the field.

The invention of the transistor also had far-reaching implications beyond the realm of computing. It played a crucial role in developing other electronic devices, such as televisions, radios, and eventually, integrated circuits. The ability to mass-produce transistors at a low cost further accelerated the pace of technological innovation, making advanced electronics accessible to a broader audience.

In summary, the invention of the transistor in 1947 was a landmark achievement that fundamentally transformed the landscape of computing technology. By addressing the limitations of vacuum tubes and enabling the creation of smaller, more efficient electronic components, the transistor laid the foundation for the modern digital age. Its influence can still be seen today in the widespread use of semiconductor-based devices across various industries.

Silicon-based Integrated Circuits And Microprocessors

The history of computing devices spans centuries, beginning with Charles Babbage‘s conceptualization of the Analytical Engine in the 1830s. This mechanical computer was designed to perform complex calculations using punched cards, a precursor to modern programming. Ada Lovelace, collaborating with Babbage, recognized its potential for more than just arithmetic, envisioning it as a tool capable of creating music and art. Her work laid foundational concepts for software programming, making her the first programmer in history.

The early 20th century saw significant advancements with the introduction of vacuum tubes, which replaced mechanical components in computing devices. The Atanasoff-Berry Computer (ABC), developed in the late 1930s, was one of the first electronic computers, utilizing binary arithmetic and regenerative memory. During World War II, the need for rapid computation led to the creation of machines like the Colossus in Britain and the ENIAC in the United States. These developments marked a shift towards electronic computing, setting the stage for modern digital systems.

A pivotal moment came in 1947 with the invention of the transistor by Bell Labs, replacing bulky vacuum tubes and enabling smaller, faster computers. This innovation led to the development of integrated circuits (ICs) in the late 1950s. Jack Kilby at Texas Instruments created the first IC in 1958, followed by Robert Noyce’s improved version a year later. These advancements allowed multiple transistors to be embedded on a single chip, significantly enhancing computing power and efficiency.

The rise of Silicon Valley in the 1960s and 1970s was driven by the semiconductor industry, with companies like Intel leading the charge. In 1971, Intel introduced the first microprocessor, the 4004, which integrated an entire computer’s central processing unit onto a single chip. This breakthrough revolutionized computing, making it accessible for personal use and sparking the digital age.

The evolution from mechanical devices to silicon-based integrated circuits represents a transformative journey in computing technology. Each innovation built upon previous advancements, culminating in the powerful microprocessors that define modern computing. These developments have not only advanced technology but also reshaped society, enabling unprecedented connectivity and computational capabilities.