Flotilla, a new federated learning framework, facilitates scalable and resilient distributed machine learning on edge devices. Evaluations utilising over 200 clients demonstrate rapid fault tolerance and comparable resource usage to existing frameworks like Flower, OpenFL and FedML, while exhibiting superior scalability for large client numbers.

The increasing prevalence of mobile and edge computing, coupled with growing demands for data privacy, fuels the development of distributed machine learning techniques such as Federated Learning (FL). This approach allows algorithms to train on decentralised data sources, minimising the need to centralise sensitive information. However, existing FL frameworks often prioritise the learning process itself, neglecting the practical challenges of deployment on diverse and potentially unreliable edge hardware. Roopkatha Banerjee, Prince Modi, and colleagues from the Indian Institute of Science (IISc) and the Birla Institute of Technology and Science (BITS) address this gap with their newly developed framework, entitled ‘Flotilla: A scalable, modular and resilient federated learning framework for heterogeneous resources’. The team’s work focuses on building a system capable of supporting both synchronous and asynchronous learning strategies, while also exhibiting robustness against client and server failures, and efficient resource utilisation on edge devices.

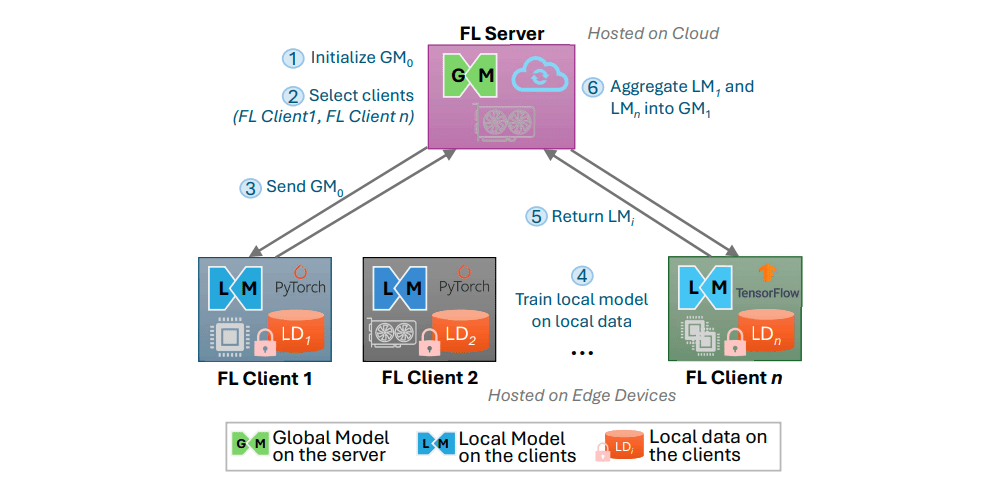

Federated learning (FL), a distributed machine learning technique, enables model training across a decentralised network of edge devices, such as smartphones or IoT sensors, without exchanging the data itself. This preserves data privacy and reduces communication costs, but presents significant engineering challenges when deploying at scale on resource-constrained hardware. Flotilla addresses these challenges with a framework designed for scalable and lightweight implementation of FL.

The architecture prioritises asynchronous aggregation, a method where model updates from participating devices are not required to be synchronised before being incorporated into the global model. This contrasts with synchronous FL, which can suffer from delays caused by slower devices. Flotilla’s stateless clients, combined with externalised session state, contribute to a resilient architecture capable of rapid failover, demonstrated in testing with over 200 clients. Statelessness means each client operates independently, without retaining information about previous interactions, simplifying recovery from failures.

Flotilla’s modular design allows for flexible composition of different FL strategies and deep neural network (DNN) architectures. This adaptability has been confirmed through evaluation with five distinct FL strategies and various DNN models. The framework’s performance is comparable to, or exceeds, that of existing FL frameworks like Flower, OpenFL and FedML, particularly on resource-constrained devices such as Raspberry Pi and Jetson boards. These boards represent a common platform for edge computing due to their low cost and energy consumption.

Scalability testing reveals that Flotilla outperforms alternative frameworks in specific scenarios, suggesting its potential for deployment in large-scale, distributed learning environments. The framework’s lightweight nature and asynchronous aggregation contribute to its efficiency, reducing the computational burden on individual devices and improving overall system responsiveness.

👉 More information

🗞 Flotilla: A scalable, modular and resilient federated learning framework for heterogeneous resources

🧠 DOI: https://doi.org/10.48550/arXiv.2507.02295