Modern methods for estimating optical flow, which determines how objects move within a scene, rely heavily on ‘cost volumes’, but these complex structures limit both processing speed and the resolution of images they can handle. Simon Kiefhaber, Stefan Roth, and Simone Schaub-Meyer, all from the Technical University of Darmstadt and the Hessian Center for AI, demonstrate a new training strategy that eliminates the need for cost volumes altogether. Their approach stems from the observation that these volumes become less critical as other parts of the flow estimation network become well-trained. This innovation leads to significantly faster processing and reduced memory requirements, culminating in models that achieve state-of-the-art accuracy with a smaller computational footprint, and even enable real-time processing of high-definition video on limited hardware.

Researchers address this limitation by developing methods to reduce the memory footprint of these estimators during training, ultimately creating fast and accurate optical flow estimators. The most accurate model developed achieves state-of-the-art accuracy while demonstrating improved efficiency in both inference and memory usage compared to existing systems. The most efficient model predicts sharper motion boundaries than widely used methods, while maintaining comparable efficiency. These advancements represent a significant step towards real-time, high-resolution optical flow estimation.

Cost Volume Removal for Efficient Flow Estimation

Researchers have fundamentally re-evaluated the role of cost volumes in optical flow estimation, recognizing that advancements in network architectures and training datasets allow for their removal without sacrificing performance. Cost volumes, traditionally essential for calculating motion, become redundant when the estimation system is trained strategically, leading to significant improvements in processing speed and reduced memory requirements. This work demonstrates that by carefully phasing out cost volumes during training, researchers can create efficient and accurate optical flow estimators. The team observed that the importance of cost volumes diminishes as other parts of the estimation pipeline become sufficiently trained.

This insight led to a novel training strategy where the cost volume is gradually removed, allowing the network to learn motion estimation without relying on this computationally expensive component. The key benefit is a substantial reduction in memory usage, as the network no longer needs to store and process large cost volumes during inference. Experiments reveal that this approach allows for the creation of models with varying computational budgets, offering a trade-off between accuracy, speed, and memory usage. The team developed three distinct models, each optimized for different performance requirements.

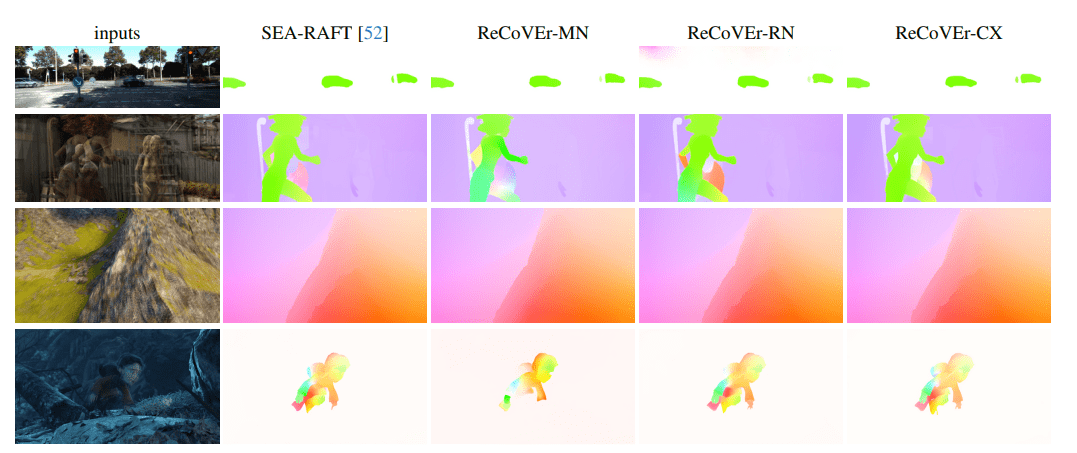

Detailed analysis confirms that reducing FLOPS translates to faster runtime, especially when memory access bottlenecks are addressed. The results demonstrate that their strategy improves performance on various datasets, including Sintel, Monkaa, and Spring. Qualitative examples showcase the quality of the optical flow estimation produced by their methods on datasets like DAVIS, KITTI, Sintel, and Spring. These visual demonstrations provide compelling evidence of the effectiveness of their approach. The team also explored the use of downsampling and upsampling techniques to further improve efficiency, finding that these methods can enhance performance on certain datasets.

Cost Volume Removal Accelerates Optical Flow Estimation

Researchers have developed a new training strategy that enables the removal of cost volumes from optical flow estimators, significantly improving processing speed and reducing memory requirements. This work demonstrates a pathway to overcome the limitations imposed by the computational complexity of traditional cost volumes. The team achieved this breakthrough by observing that the importance of cost volumes diminishes once other parts of a pipeline are sufficiently trained. Experiments reveal that by strategically removing the cost volume during training, the team created models with varying computational budgets.

Their most accurate model achieves state-of-the-art accuracy while simultaneously being faster and requiring less memory than comparable systems. Notably, their fastest model can process Full HD frames using only 0. 93GB of GPU memory, a substantial reduction in resource demand. Initial tests demonstrated that simply removing the cost volume early in training led to worse results, but by carefully controlling when the cost volume is phased out, performance could be maintained. Further analysis showed that fading out or completely cutting off the cost volume after a certain number of training steps yielded similar improvements over a system that never removed the cost volume.

Specifically, the team found that removing the cost volume before training on the FlyingThings dataset resulted in the best performance. Measurements confirm that this strategy can improve endpoint error on the Spring dataset by up to 0. 18 pixels. The results demonstrate a significant advancement in optical flow estimation, paving the way for more efficient and resource-conscious systems.

Cost Volume Removal Speeds Optical Flow Estimation

This work presents a comprehensive re-evaluation of cost volumes within optical flow estimation, considering advancements in convolutional network architectures and available datasets. Researchers discovered that while cost volumes are initially necessary during training, they become redundant with an appropriate training strategy, allowing for their removal during inference. This innovation significantly improves processing speed and reduces memory requirements without compromising accuracy. To demonstrate this approach, the team developed three distinct models, each optimized for different computational budgets.

The most accurate model achieves state-of-the-art performance while maintaining a lower memory footprint and faster processing speed than comparable methods. The team acknowledges that their evaluation focused on the impact of replacing the context network. Future work could investigate these areas to further optimize performance and explore additional improvements. This research represents a significant step towards creating more efficient and resource-conscious optical flow estimation systems, enabling real-time applications in areas like autonomous driving and robotics. The team’s findings demonstrate that strategic training can unlock substantial performance gains by eliminating unnecessary computational overhead.

👉 More information

🗞 Removing Cost Volumes from Optical Flow Estimators

🧠 ArXiv: https://arxiv.org/abs/2510.13317