The quest to harness the bizarre properties of quantum mechanics for secure communication and random number generation relies on demonstrating strong violations of Bell inequalities, but these tests are surprisingly vulnerable to background noise. Piotr Mironowicz from Gdańsk University of Technology, alongside Piotr Mironowicz from the Polish Academy of Sciences and Mohamed Bourennane from Stockholm University, investigate how spurious detection events, known as accidental counts, degrade the observed violation of these inequalities. The team proposes a new model that directly connects the strength of the Bell violation to the power of the laser used to create entangled photons, revealing that increasing brightness can paradoxically reduce the evidence for quantum nonlocality. This research provides crucial practical guidance for optimising quantum light sources, paving the way for high-performance, real-world quantum technologies that are less susceptible to noise and operate at higher rates.

In photonic experiments designed to test fundamental quantum mechanics, false detection events, which do not originate from genuine entangled photon pairs, impact the observed violation of Bell inequalities. These false coincidences become increasingly significant at higher laser pump powers, ultimately limiting the strength of Bell violations and, consequently, the performance of quantum protocols such as device-independent quantum random number generation and quantum key distribution.

Certifying Randomness via Quantum Non-Locality

This is a comprehensive research report focusing on quantum randomness, non-locality, and device-independent quantum key distribution (QKD). It explores how to generate and certify truly random numbers and secure communication without relying on the internal workings of the quantum devices used, a crucial aspect because imperfections in real-world devices can be exploited. The paper leverages Bell’s theorem and the concept of quantum non-locality as a foundation for certifying randomness and security, as violations of Bell’s inequalities demonstrate that quantum correlations cannot be explained by classical theories. A significant focus is on experimental verification of these concepts, with the authors highlighting numerous experiments that have successfully certified randomness and QKD in a device-independent manner.

They emphasize the challenges of achieving this in practice due to detector imperfections and other noise sources. The report delves into more advanced protocols like randomness expansion and informationally complete quantum measurements, which enhance the capabilities of device-independent QKD and randomness generation. The paper provides a thorough overview of the field, demonstrating a solid understanding of the underlying physics and mathematics. The potential applications of this work are significant, including secure communication through unbreakable encryption, truly random numbers for cryptography and simulations, and the development of new quantum cryptographic protocols. Key concepts explained within the paper include Bell’s inequalities, device-independent QKD, and randomness expansion.

Accidental Counts Limit Bell Inequality Violation

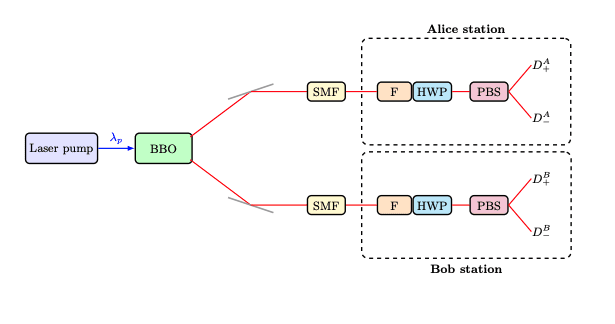

Researchers have investigated the impact of unwanted detection events, known as accidental counts, on the strength of quantum correlations observed in experiments testing Bell’s inequalities. These accidental counts, arising from the simultaneous generation of multiple entangled photon pairs, become increasingly problematic at higher laser power, effectively masking the genuine quantum signals. The team’s work demonstrates that these counts limit the degree to which Bell inequalities can be violated, impacting the performance of technologies reliant on strong quantum correlations. The study focuses on experiments utilizing spontaneous parametric down-conversion (SPDC), a process where a laser beam generates pairs of entangled photons.

While increasing the laser power naturally boosts the rate of photon pair creation, it also elevates the probability of these spurious accidental counts. Understanding this trade-off between signal strength and noise is crucial for optimizing quantum technologies. The researchers developed a model that quantitatively links the observed violation of Bell’s inequalities to the laser pump power. This model accounts for the probabilistic nature of photon pair generation, the imperfect efficiency of detectors, and the contribution of these accidental coincidences. By fitting the model to experimental data, they accurately predicted Bell values across a range of pump settings, demonstrating its ability to capture the complex interplay between signal and noise.

This predictive capability is particularly valuable for optimizing experimental setups. The findings have significant implications for device-independent quantum technologies, such as quantum random number generation and quantum key distribution. The research demonstrates that simply increasing laser power does not necessarily improve performance, as the resulting increase in accidental counts can counteract the benefits. Instead, the team’s model provides a means to optimize pump power for a given experimental setup, maximizing the quality of randomness or key generation. By applying this model to experimental data, researchers accurately predicted Bell values across a range of pump settings, demonstrating its ability to optimise source brightness while maintaining the necessary nonlocality for secure quantum communication. Future work could broaden the model’s applicability and refine its predictive power for diverse quantum technologies.

👉 More information

🗞 When More Light Means Less Quantum: Modeling Bell Inequality Degradation from Accidental Counts

🧠 DOI: https://doi.org/10.48550/arXiv.2507.20596