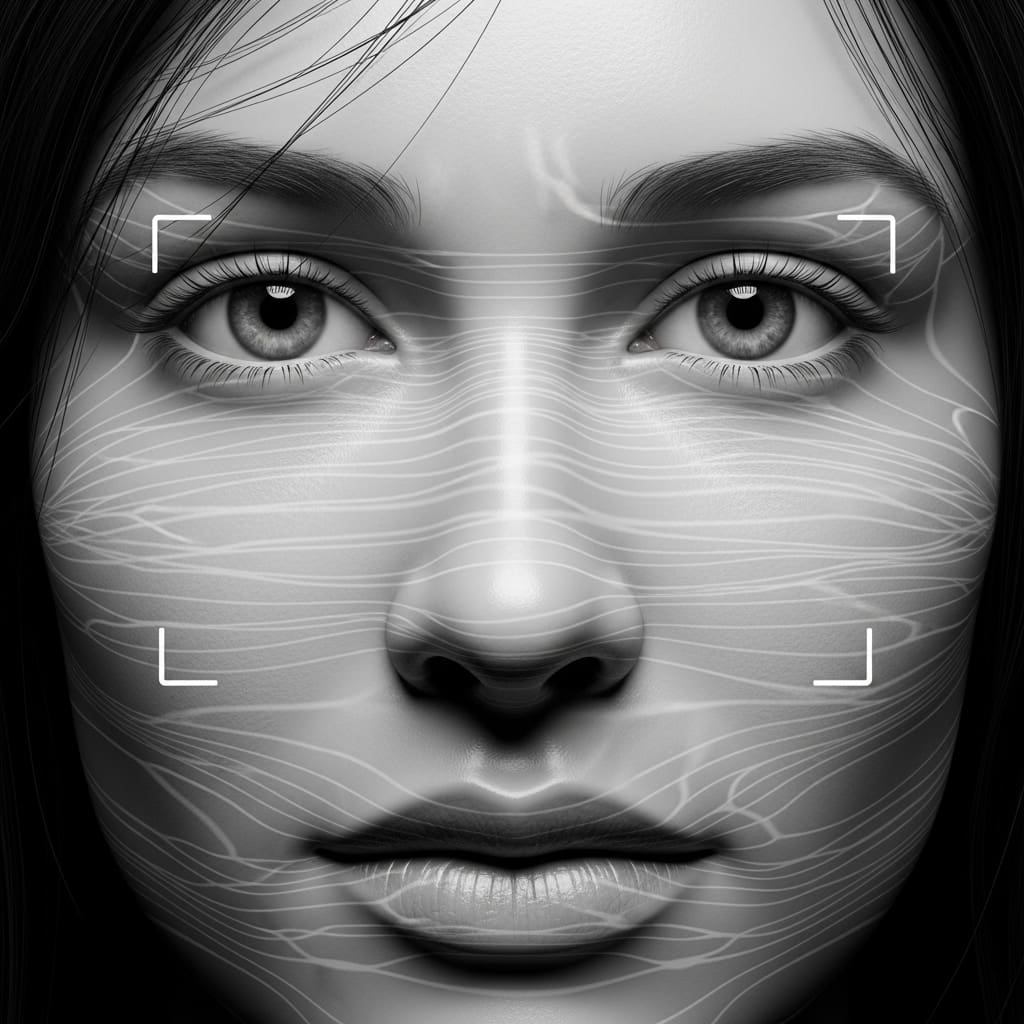

Facial identity verification systems are increasingly vulnerable to sophisticated attacks, prompting research into methods of both deception and detection. Shahrzad Sayyafzadeh, Hongmei Chi, and Shonda Bernadin, from the FAMU-FSU College of Engineering and Florida A&M University, detail a novel pipeline for creating and analysing adversarial patches designed to compromise these systems. Their work focuses on generating subtle, yet effective, visual alterations that can evade facial recognition, while simultaneously developing forensic tools to identify such manipulations. This research is significant because it addresses a critical gap in biometric security, offering insights into both the potential for attack and the means of safeguarding against it. By combining diffusion models with adversarial techniques, the team demonstrates a powerful approach to understanding vulnerabilities in facial identity verification and expression recognition technologies.

Stable Diffusion Generates Adversarial Face Patches

Adversarial Patch Generation for Facial Biometrics

The research team engineered an end-to-end pipeline to generate, refine, and evaluate adversarial patches designed to compromise facial biometric systems, with direct applications in forensic analysis and security testing protocols. Initially, Fast Gradient Sign Method (FGSM) was employed to create adversarial noise targeting identity classification models, establishing a baseline for patch generation. This noise was then refined using a diffusion model incorporating reverse diffusion, achieving imperceptibility through Gaussian smoothing and adaptive brightness correction, a technique that facilitates the creation of synthetic adversarial patches capable of evading recognition systems while preserving natural visual characteristics. To assess the efficacy of these patches, the refined adversarial elements were applied to facial images and subjected to rigorous testing against various recognition systems, measuring changes in identity classification and captioning results.

A (ViT)-GPT2 model was developed to generate semantic descriptions of individuals in the adversarial images, providing crucial forensic interpretation and documentation of identity evasion and recognition attacks. This innovative approach allows for detailed analysis of how adversarial patches impact both the technical performance of biometric systems and the interpretability of the resulting data. The study pioneered a multimodal detection system for identifying adversarial patches and samples, leveraging perceptual hashing and segmentation techniques to achieve a Structural Similarity Index (SSIM) of 0.95. Hamming distance was calculated to quantify perceptual changes, with a threshold of 5 established to determine the presence of tampering.

Beyond hash distance, the system incorporates Structural Similarity Index differences, segmentation anomaly detection, contour analysis, heatmap analysis, and label change monitoring during classification, ensuring robust and reliable detection. This multifaceted approach enables the identification of subtle manipulations often missed by traditional image comparison methods, offering a significant advancement in forensic image analysis. By combining perceptual hashing with multiple detection modalities, the research delivers a highly effective system for safeguarding biometric security and maintaining the integrity of digital evidence in critical applications.

Adversarial Patches Evade Facial Recognition Systems

Scientists achieved a breakthrough in compromising facial biometric systems through the development of an end-to-end pipeline for generating and refining adversarial patches. The research team successfully generated adversarial noise using the Fast Gradient Sign Method (FGSM) targeting identity classifiers, subsequently enhancing imperceptibility with a diffusion model employing Gaussian smoothing and adaptive brightness correction. Experiments revealed that applying these refined patches to facial images effectively evades recognition systems while maintaining natural visual characteristics, demonstrating a significant advancement in adversarial attack methodology. The pipeline’s efficacy was validated through rigorous testing on facial images with a standard input size of 224x 224 pixels.

The study further details the use of a Vision Transformer (ViT)-GPT2 model, which generates semantic descriptions of a person’s identity from adversarial images. This capability supports forensic interpretation and documentation of identity evasion attacks, providing crucial insights into the mechanisms of these vulnerabilities. Measurements confirm that the pipeline accurately evaluates changes in identity classification and captioning results, alongside vulnerabilities in facial identity verification and expression recognition under adversarial conditions. Data shows the successful detection and analysis of generated adversaries, achieving a Structural Similarity Index (SSIM) of 0.95, indicating a high degree of perceptual accuracy in identifying manipulated images.

Researchers measured the impact of adversarial patches on deep learning models within an image classification, captioning, and identity verification pipeline. The work demonstrates that adversarial perturbations, even on images processed to 112×112 or 160×160 pixels for identity verification models like ArcFace and FaceNet, significantly degrade recognition accuracy. Tests prove the effectiveness of forensic analysis techniques, such as spectral analysis using Fast Fourier Transform (FFT) and depth estimation with MiDaS models on 256×256 pixel images, in detecting geometric inconsistencies introduced by adversarial attacks. The diffusion model employed in this work utilizes a forward process adding Gaussian noise over time steps defined by a variance schedule, and a reverse process generating images from noise.

Integrating adversarial objectives into this diffusion process defines a total loss function, enabling the generation of highly effective adversarial patches that seamlessly blend into facial features. Adversarial purification, achieved by reconstructing images from Gaussian noise, effectively maps adversarial images back to the natural data manifold, preserving key features while eliminating distortions. This dual role of diffusion models represents a significant technical accomplishment in both attack and defense strategies within the field of facial biometric security.

👉 More information

🗞 Diffusion-Driven Deceptive Patches: Adversarial Manipulation and Forensic Detection in Facial Identity Verification

🧠 ArXiv: https://arxiv.org/abs/2601.09806