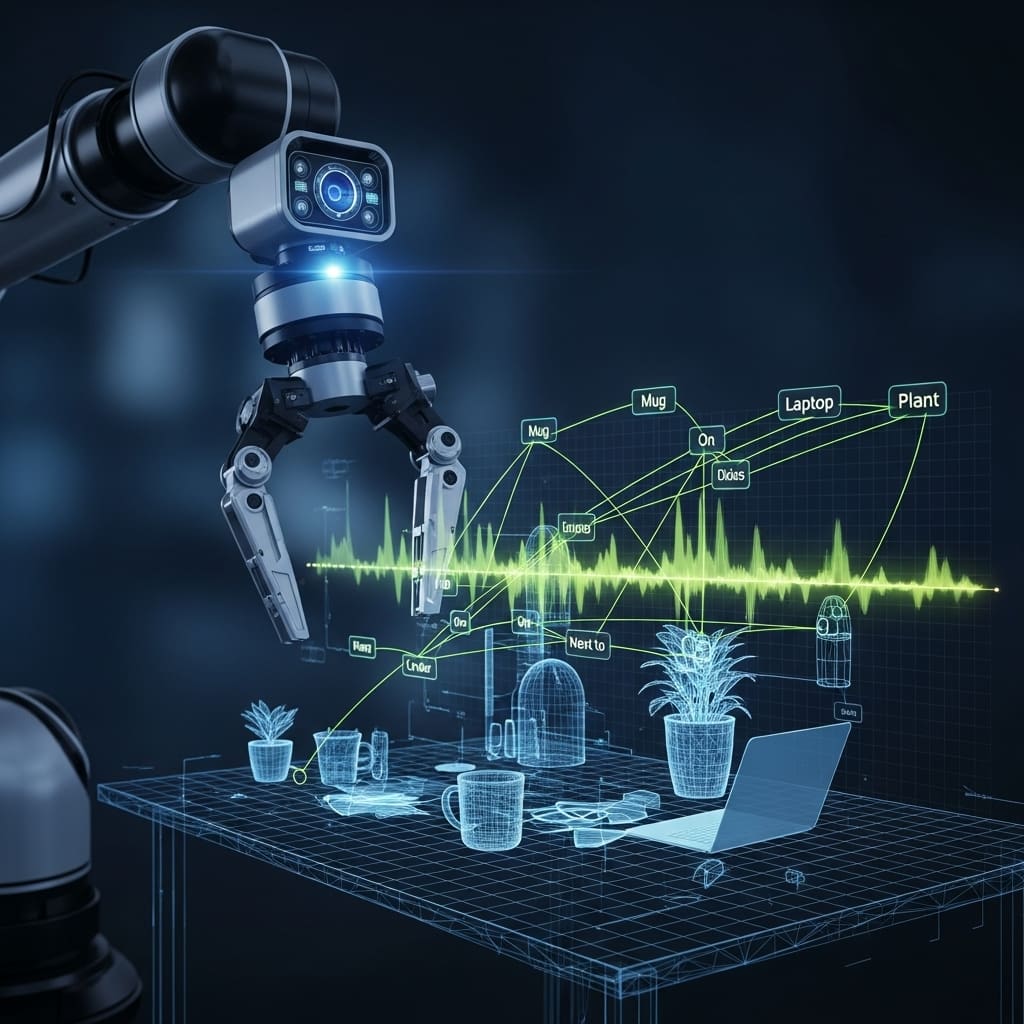

Scientists are tackling the limitations of current robotic object recognition, which frequently struggles with semantic consistency despite advances in deep learning. Marian Renz, Martin Günther, and Felix Igelbrink, from the German Research Center for Artificial Intelligence, alongside Oscar Lima and Martin Atzmueller from Osnabrück University, present a novel approach in their ExPrIS project, detailed in a new report. They demonstrate how incorporating knowledge-level expectations , drawing on both past observations and external semantic resources like ConceptNet , significantly improves object interpretation from sensor data. This research is particularly significant as it moves beyond simple, frame-by-frame analysis, instead building an incremental 3D Semantic Scene Graph and employing a heterogeneous Graph Neural Network to create a robust and consistent understanding of dynamic environments , paving the way for more reliable robotic systems.

This approach differs from conventional methods that rely solely on the power of deep neural networks, instead combining data-driven learning with knowledge-driven insights. The team conducted a comprehensive survey of existing online knowledge integration techniques for 3D semantic mapping, identifying a clear trend towards symbolic representations and establishing the 3DSSG as a particularly flexible paradigm. This foundational work informed the design of a dynamic 3DSSG architecture, capable of evolving from sensor data in a bottom-up fashion and supporting the inference of composite semantic concepts.

Furthermore, the research introduces a modular and extensible layer design for the 3DSSG, separating spatial and semantic relations to enhance adaptability to new domains and facilitate the integration of dynamically inferred higher-order concepts. Unlike rigid hierarchical structures used in previous systems, this design allows the graph to evolve beyond static categories, incorporating domain-specific knowledge and leveraging implicit information sources such as CLIP features. Experiments demonstrate the potential of this approach to create more densely connected subgraphs for complex scenes, overcoming limitations of distance-based heuristics used in prior work. This work is complemented by the LIEREx project, which focuses on incorporating vision-language models for open-vocabulary queries and language-driven interaction with the environment, both projects sharing the 3DSSG as their foundational representation. The ExPrIS project’s architecture, detailed in the report, outlines a planned integration onto a mobile robotic platform, promising advancements in autonomous navigation and interaction. Crucially, the 3DSSG allows for edges connecting nodes across different layers, enabling the representation of meta-relations such as object memberships.

Experiments employed a comprehensive survey of online knowledge integration techniques for 3D semantic mapping to inform the 3DSSG’s design. The survey revealed a growing trend towards structured, symbolic environment representations, solidifying the 3DSSG as a powerful and flexible paradigm. Scientists harnessed this insight to systematically investigate the integration of knowledge-level expectations within the 3DSSG framework. The ExPrIS project is closely linked to the LIEREx project, which focuses on extending the approach with vision-language models for open-vocabulary queries and language-driven interaction.

This approach enables the combination of knowledge-driven and data-driven methods, leveraging both persistent object representations within the 3DSSG and powerful object detection capabilities from sensor data. The system delivers a foundational representation shared between both projects, ensuring interoperability and facilitating the integration of symbolic knowledge with multimodal open-set perception. Researchers. This accumulated global 3DSSG serves as a crucial contextual expectation, assisting in subsequent local graph predictions by matching segments from the current frame to previously observed geometric instances.

Data shows that the system effectively integrates this contextual information by connecting matching nodes with edges, allowing information to traverse from the global to the local graph via the GNN’s message passing process. Furthermore, the research team connected global nodes to a static knowledge graph, ConceptNet, leveraging external prior knowledge about the environment as a second type of expectation. To manage the size and noise within ConceptNet, subgraphs were extracted using a breadth-first search based on node classes and relationship types for a specified number of hops, ensuring relevant information was utilized for 3DSSG generation. The architecture employs a heterogeneous GNN, specifically GraphSAGE or HGT, capable of handling multiple node and edge types, and node features combine PointNet-based geometric feature vectors with hand-crafted descriptors. Tests prove that the training objective, a composite loss over node and edge predictions in the local graph, accounts for class imbalance, ensuring the global graph functions as a bias rather than direct supervision. The breakthrough delivers a dynamic biasing mechanism during inference, enriching global nodes with prior-based features like previously predicted class labels or CLIP embeddings.

3DSSG and GNN for expectation-biased recognition offer promising

The researchers are evaluating their system through a “tidy-up” scenario, where a robot explores a lab environment, builds a semantic map, and then identifies and relocates misplaced objects. This task is designed to rigorously test the system’s ability to maintain semantic consistency and resolve ambiguities by leveraging its memory of past observations, a key benefit of the global graph structure employed. Initial hypotheses suggest the expectation-biased approach will outperform context-free models, demonstrating improved stability in object interpretation even with incomplete or ambiguous perceptual data. Acknowledging the current stage of development, the authors note limitations in the depth of external knowledge integration, currently utilising external knowledge graphs primarily as priors. Future work will focus on incorporating these knowledge graphs more comprehensively, enabling complex symbolic knowledge processing for large-scale semantic mapping and Explainable AI (XAI). Ongoing evaluation of the fully integrated embodied system, including open-vocabulary query capabilities from a related project, will be detailed in a forthcoming publication, promising further insights into the system’s capabilities and potential.

👉 More information

🗞 ExPrIS: Knowledge-Level Expectations as Priors for Object Interpretation from Sensor Data

🧠 ArXiv: https://arxiv.org/abs/2601.15025