On April 27, 2025, researchers introduced a novel approach to enhancing sequential recommendation systems through relative contrastive learning. By incorporating similarity-based positive samples, they addressed limitations in existing contrastive methods and achieved a notable 4.88% improvement over state-of-the-art techniques across multiple datasets.

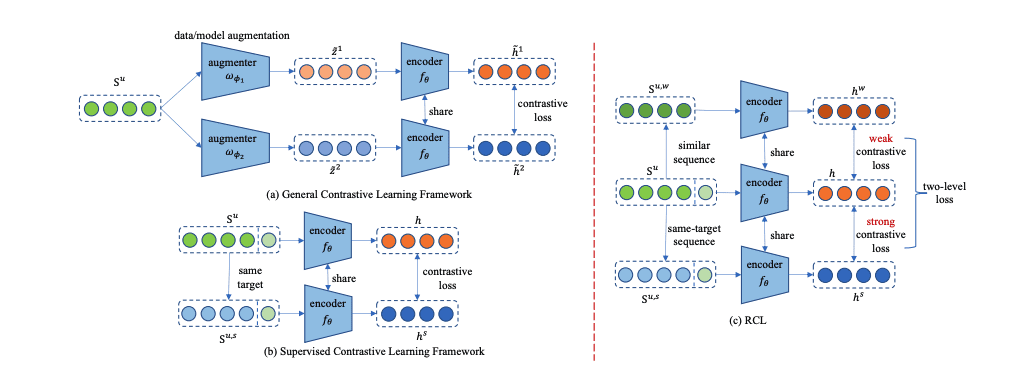

The study addresses limitations in contrastive learning (CL) for sequential recommendation (SR) models, where existing methods either alter user intent through data augmentation or lack sufficient same-target sequences for supervised contrastive (SCL) approaches. The proposed Relative Contrastive (RCL) framework introduces a dual-tiered positive sample selection module to include both same-target and similar sequences as strong and weak positives, respectively. A weighted relative contrastive loss ensures sequences are closer to strong than weak positives. Applied to mainstream SR models, RCL achieves a 4.88% improvement over state-of-the-art methods across six datasets.

In the ever-evolving digital landscape, recommendation systems are crucial for enhancing user experiences by offering personalized suggestions. Traditionally reliant on static models that struggle to adapt to changing user preferences, these systems have seen significant improvements through advancements in deep learning.

A cutting-edge framework has emerged, employing elastic adaptation to dynamically adjust focus based on user behavior changes. This approach utilizes dynamic attention mechanisms, allowing the model to prioritize different aspects of user data as needed. By incorporating temporal patterns, the system better understands how timing influences user actions, leading to more accurate predictions.

This framework addresses a key challenge: feature staleness, where outdated information diminishes model relevance. The system now incorporates methods to detect and mitigate stale features, ensuring recommendations remain current and pertinent. This capability is crucial for engaging in fast-paced environments like e-commerce and media streaming.

Recent research has contributed significantly to this field. Wang and Shen (2023) focused on incremental learning, which updates models with new data without forgetting past information—a solution to the problem of catastrophic forgetting. Zhou et al. (2022) introduced filter-enhanced MLPs, enhancing efficiency in processing sequential data.

The innovation lies in a flexible deep learning framework that dynamically adapts to user behavior, handles multiple interests effectively, and addresses feature staleness. This leads to more accurate and personalized recommendations, crucial for engagement in various digital platforms. However, practical implementation considerations include real-time data handling without resource overconsumption. While these models offer significant benefits, they require careful monitoring to ensure fairness and transparency, as recommendation systems can profoundly influence user behavior and preferences.

In summary, this dynamic approach addresses critical challenges in recommendation systems, promising better user experiences through adaptable and responsive technology.

👉 More information

🗞 Relative Contrastive Learning for Sequential Recommendation with Similarity-based Positive Pair Selection

🧠 DOI: https://doi.org/10.48550/arXiv.2504.19178