The increasing demand for detailed environmental monitoring and analysis drives the need for powerful artificial intelligence systems capable of interpreting vast amounts of satellite imagery, yet scaling these systems presents unique challenges. Charith Wickrema, Eliza Mace, Hunter Brown, and colleagues, working with MITRE, investigate how to effectively train these large-scale image analysis models, known as foundation models, using unprecedented volumes of high-resolution Earth observation data. Their research demonstrates that even with models containing billions of parameters, performance remains heavily dependent on the quantity of training data, rather than the model’s size itself, a finding that significantly alters strategies for developing future remote sensing applications. By training models on over a quadrillion pixels of satellite data, the team identifies critical factors for optimising data collection, computational resources, and training schedules, ultimately paving the way for more accurate and insightful Earth observation technologies.

Scaling Vision Transformers for Remote Sensing

This work details the scaling of a vision transformer for understanding remote sensing images. The team demonstrates that, mirroring progress in natural language processing and computer vision, increasing both model and dataset size improves performance in remote sensing tasks. Achieving these benefits, however, requires careful attention to training stability and hyperparameter tuning. The researchers present a pragmatic approach to hyperparameter optimization, quickly identifying stable configurations, and emphasize the importance of data diversity. The foundation model utilizes a Vision Transformer architecture, building on the success of transformers in other fields.

The study employs a Warmup-Stable-Decay learning rate schedule, finding that a conservative approach to learning rates is essential for stable training, particularly during initial phases. Investigations into scaling laws reveal how performance changes with increases in model size, dataset size, and compute, while pragmatic hyperparameter tuning involves a “fail fast” strategy, quickly evaluating learning rates during a warmup phase to identify stable candidates. The team stresses that diversifying training data across geography, sensor type, season, and context is more impactful than simply increasing data volume. Results show that increasing model size and dataset size consistently improves performance, and that expanding data diversity has a greater impact than increasing data quantity.

The fail-fast warmup sweep strategy proves effective for identifying stable learning rate configurations, and the results suggest that power law scaling relationships, similar to those observed in other fields, apply to remote sensing foundation models. The paper offers practical recommendations for building remote sensing foundation models, prioritizing stable training with conservative learning rates and the WSD schedule. Researchers should focus on diversifying training data, anticipating label noise through careful data curation, and measuring scaling pragmatically to guide data collection and compute budget allocation. The work builds upon existing large-scale remote sensing datasets and models, such as SatlasPretrain and SkySense, and connects findings to established scaling laws in natural language processing and computer vision.

Petascale Vision Transformers Reveal Data Limits

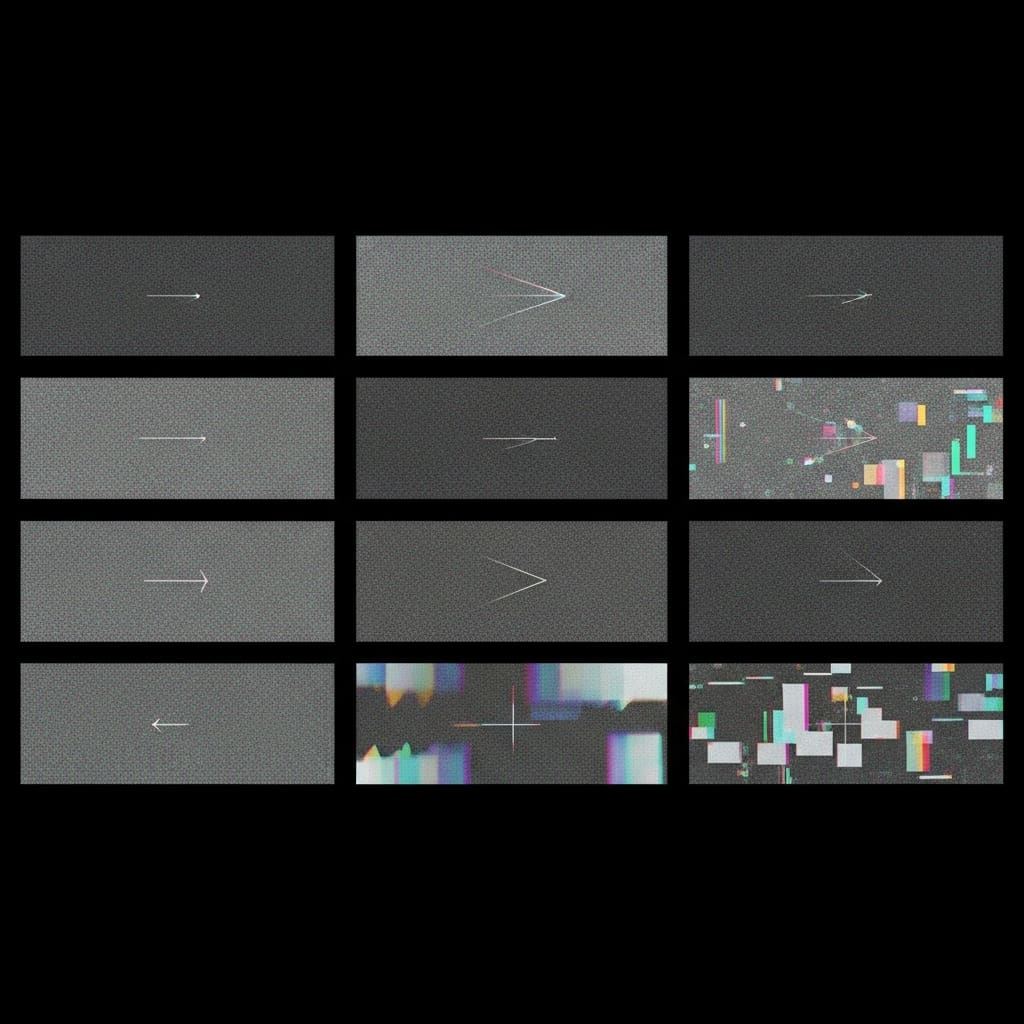

Scientists trained progressively larger vision transformer models on a quadrillion pixels of commercial satellite data, analyzing performance and failure modes at petascale. Experiments revealed that even at this immense scale, performance remains consistent with a data-limited regime, indicating that increasing data volume is more impactful than simply increasing model size. To optimize training, the team investigated learning rate scheduling, employing a “fail fast” protocol to quickly identify and discard unstable learning rates. Preliminary tests successfully identified stable learning rates, demonstrated through smooth loss decrease and bounded gradient statistics, before progressing to full Warmup, Stable, Decay training.

This approach conserved valuable computing time and focused resources on promising configurations, achieving a target validation loss during training on NVIDIA DGX nodes. Further analysis focused on establishing power laws governing data-constrained training, constructing progressively larger datasets while maintaining consistent data distribution. Training a large vision transformer backbone, the team demonstrated that model performance scales predictably with dataset size, following a power-law model. Log-log regression analysis quantified the data-scaling exponent, providing insights for future data collection and compute budgeting. Varying the vision transformer backbone size confirmed that encoder capacity also influences performance, adhering to a separate power-law relationship under fixed data and optimization settings.

Data Scaling Drives Remote Sensing Performance

This research establishes empirical scaling behaviors for remote sensing foundation models, demonstrating predictable decreases in model loss as dataset size increases when training at peta-scale data volumes. The team trained progressively larger vision transformer models using a quadrillion pixels of commercial satellite data, revealing that performance remains constrained by data availability rather than model capacity. These findings indicate that increasing computational power or model size will yield diminishing returns without a corresponding expansion in the diversity and richness of the training data. The study provides a practical framework for planning future pretraining efforts in remote sensing, allowing researchers to estimate the benefits of additional data collection and optimize compute budgets.

While acknowledging the results are specific to high-resolution imagery and vision transformer architectures, the authors suggest the observed trends likely hold across different sensors and model types. Future work should focus on extending this framework to incorporate multi-modal, multi-temporal, and cross-sensor data, aiming for globally consistent geospatial representation learning. The team also offers a practical checklist for scaling remote sensing models, emphasizing data diversity, stable training procedures, and pragmatic measurement of scaling laws.

👉 More information

🗞 Scaling Remote Sensing Foundation Models: Data Domain Tradeoffs at the Peta-Scale

🧠 ArXiv: https://arxiv.org/abs/2512.23903