Scientists are tackling the longstanding challenge of inverse rendering, a process complicated by its inherent ambiguity, but simplified through the use of illumination priors. Paul Walker, Andreea Ardelean, and Bernhard Egger from Friedrich-Alexander-Universität Erlangen-Nürnberg, alongside James A. D. Gardner and William A. P. Smith from the University of York and pxld.ai et al, present a novel variational autoencoder , VENI , that models natural illumination on the sphere without relying on traditional 2D projections. Their research is significant because it addresses limitations in existing methods by preserving the spherical and rotation-equivariant nature of illumination environments, ultimately delivering a smoother, more well-behaved latent space for realistic and controllable lighting effects.

Scientists. and Gardner et al. have shown that neural field-based light modelling can capture more detailed environments using fewer parameters than SG or SH, so this approach is followed for the decoder. Many inverse rendering works do not use illumination priors and instead reconstruct lighting directly [4, 48, 49, 57], often leading to unrealistic estimations and hindering the estimation of other parameters, such as albedo.

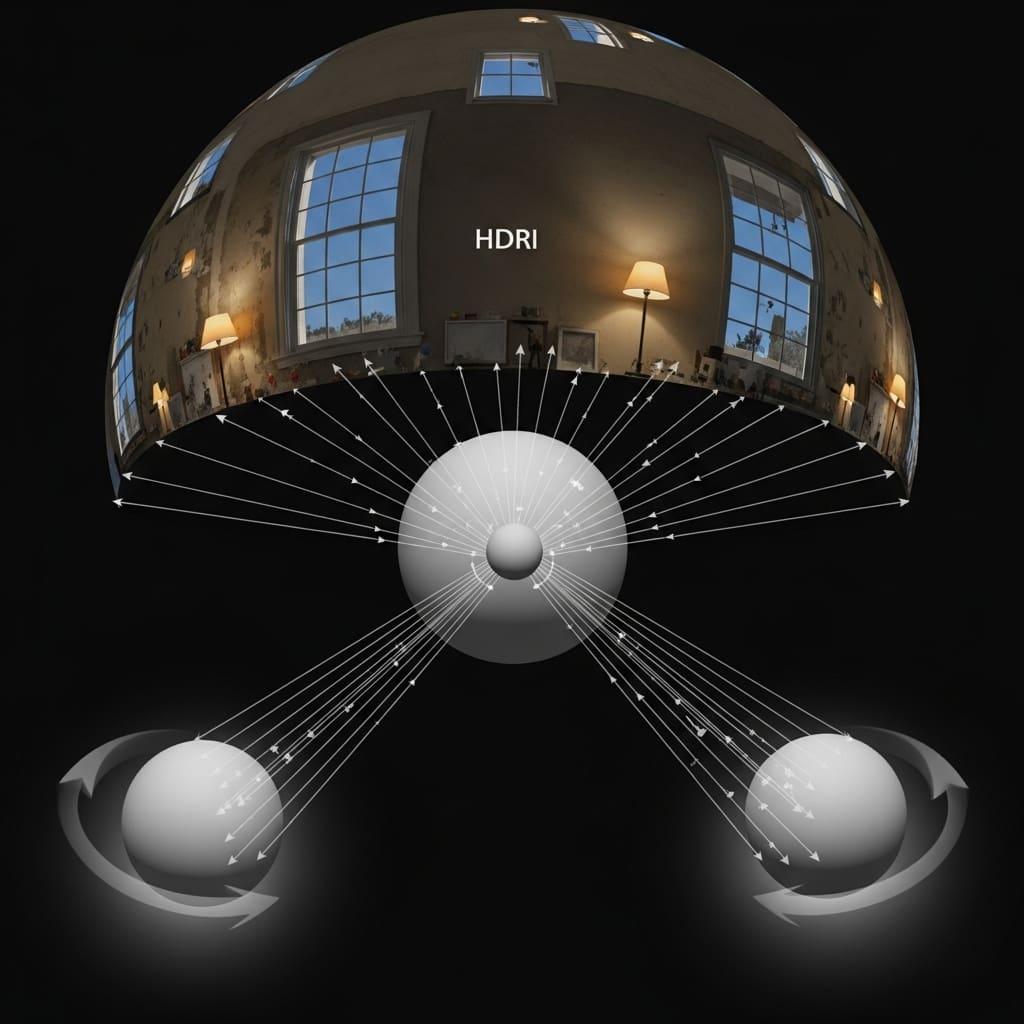

Real-world illumination exhibits regularities, so illumination priors help constrain the inverse rendering task. Recent work focuses on outpainting a 360° HDR environment map from an LDR crop to estimate lighting in a scene [10, 47, 55], introducing an implicit prior on illumination. Zhan et al. and Dastjerdi et al. inpaint a chrome ball into a scene to estimate the lighting, which can also be seen as an outpainting method. While these models reconstruct high-quality illumination environments, they require a part of the illumination environment to be visible. Earlier works proposed statistical models over SH parameters of custom datasets [2, 3, 14, 52], while more recent works rely on neural approaches [7, 20, 40]. Sztrajman et al. build an environment map convolutional autoencoder, and Boss et al. use a neural field to model pre-integrated lighting. Closest to this approach is RENI++, which proposes a natural illumination prior that is rotation-equivariant by design, using an autodecoder architecture where latent codes are initialized randomly and jointly optimized.

This results in a non-unique latent space, adversely affecting downstream performance. Lighting environments are inherently rotation-equivariant; any rotation around the up-axis results in another valid illumination environment.This property has been largely overlooked in prior work [3, ], while others try to achieve it via augmentation. Models like VN-Transformer apply the Vector Neuron framework to transformer models, achieving rotation equivariance. RENI and RENI++ also used the Vector Neurons approach to build a rotation-equivariant prior for natural illumination [19, 20].

RENI uses the full Gram matrix, achieving rotation equivariance at the cost of O(n²) complexity, while RENI++ uses the VN-Invariant layer, reducing complexity to O(n). This work follows the approach of RENI++ to obtain a rotation-equivariant decoder. 360° panoramic images present challenges, as the 2D projection (e. g., equirectangular projection, cube mapping) inevitably introduces distortion. Vision Transformers use global self-attention to solve computer vision tasks, but are not designed to handle distortion. Shen et al. propose sphere tangent-patches to remove the effects of distortion when using vision transformers with 360° equirectangular projected images, while others address it via deformable convolutions [53, 54, 58].

Yun et al. use deformable convolutions with fixed offsets as a linear projection into the transformer encoder. Zhao et al. propose a distortion-aware transformer block using deformable convolution with learnable offsets. Yuan et al. use deformable convolutions with learnable. Tests confirm that this layer effectively reduces equivariance from SO(3) to SO(2), preserving crucial rotational properties while simplifying the model.

Data shows this approach overcomes the limitations of previous autoencoders, such as RENI++, which suffered from a non-unique latent space due to per-image latent code initialization. Results demonstrate the model’s ability to represent illumination environments with a unique and structured latent space, enabling more meaningful operations like interpolation. Scientists recorded that the use of a VN-Transformer encoder, operating directly in the 3D domain, eliminates the need for distortion handling typically required when projecting 360° panoramic images onto 2D representations. Measurements confirm that this approach avoids the distortions inherent in equirectangular projections and cube mappings, offering a more accurate and efficient representation of spherical data.

The breakthrough delivers a significant improvement in handling 360° panoramic images, as the model operates directly on the spherical data without requiring explicit distortion correction. The SO(2)-equivariance is achieved through a novel fully connected layer that combines invariant and equivariant operations within a single neuron, processing inputs of dimension din×(2+cinv), where cin = 4 and din represents the number of inputs to the layer. This technique allows for the preservation of rotational equivariance in the x and y dimensions while maintaining invariance in other dimensions, resulting in a more realistic and physically plausible illumination model.

SO2 Equivariance Improves Spherical Illumination Modelling by enabling

The core innovation lies in a Vector Neuron (VN-ViT) encoder and a rotation-equivariant conditional neural field decoder, enabling realistic high dynamic range (HDR) environment map generation. Researchers introduced an SO(2)-equivariant extension to Vector Neurons, successfully reducing the model’s complexity from SO(3) to SO(2) equivariance and improving performance. The authors acknowledge that reducing equivariance to SO(2) is sufficient for the entire model, and that the performance of their model is dependent on the chosen loss functions and pre-training datasets. Future work could explore applications of this illumination prior in downstream tasks such as inverse rendering and relighting, potentially enhancing the accuracy and realism of these vision problems.

👉 More information

🗞 VENI: Variational Encoder for Natural Illumination

🧠 ArXiv: https://arxiv.org/abs/2601.14079