The increasing demand for artificial intelligence at the network edge presents a significant challenge, as powerful language models often exceed the limitations of resource-constrained devices. Pablo Prieto and Pablo Abad, from Universidad de Cantabria, along with their colleagues, investigate how to overcome this hurdle by comparing the performance of Small Language Models (SLMs) on different hardware platforms. Their research comprehensively evaluates the inference speed and energy efficiency of commercially available CPUs, GPUs, and Neural Processing Units (NPUs) when running these lightweight AI models. The team’s findings demonstrate that specialized hardware, particularly NPUs, significantly outperforms general-purpose CPUs for edge computing tasks, offering a crucial step towards deploying efficient and responsive AI applications directly on devices with limited power and processing capabilities. This work establishes that designs prioritising both performance and efficiency are essential for unlocking the full potential of edge-based artificial intelligence.

Memory and energy consumption often limit the deployment of large language models (LLMs), particularly as their size and computational demands increase. These resource limitations create significant challenges in selecting the optimal hardware platform, as it remains unclear which option best balances performance and efficiency. The study highlights the importance of model compression and quantization, techniques that reduce model size and improve speed, for deployment in environments with limited resources. A key focus is understanding the sustainability implications of LLMs, including their energy consumption and environmental impact.

The research reveals significant performance differences between hardware types. GPUs offer substantial acceleration for LLM inference, though they can consume considerable power. FPGAs provide a good balance of performance and flexibility, and are particularly promising for low-power applications. NPUs, designed specifically for neural network workloads, deliver high performance and energy efficiency. The study also examines the trade-offs between accuracy and performance when using different precision formats, such as BF16 and FP16, demonstrating that BF16 can sometimes offer better accuracy.

The team benchmarked a variety of open-source LLMs, including Llama 2, Qwen, DeepSeek-R1, Falcon, and ggml/llama. cpp, a C/C++ implementation for efficient inference. They utilized software frameworks like ggml/llama. cpp to optimize performance, particularly on CPUs. The findings demonstrate that no single hardware and software configuration is ideal; the optimal choice depends on specific application requirements, such as latency, throughput, and power consumption.

Specialized hardware, particularly FPGAs and NPUs, offers significant potential for improving efficiency, especially for edge devices and low-power applications. Model compression techniques are crucial for reducing model size and improving performance, and reducing the energy consumption of LLMs is vital for mitigating their environmental impact. Using a suite of state-of-the-art SLMs and a standardized software stack, scientists measured both performance and energy efficiency across four commercial platforms. Results indicate that specialized NPUs substantially outperform general-purpose CPUs and GPUs for SLM inference. The team analyzed maximum achievable performance, revealing that NPUs consistently achieved the highest throughput.

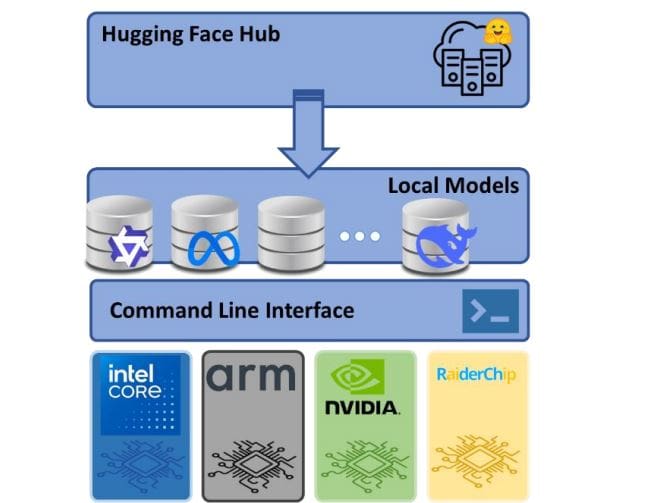

To ensure fair comparisons, bandwidth normalization was applied across architectures, highlighting the inherent advantages of the NPU design. Measurements confirm that NPUs deliver superior performance even when considering energy consumption; metrics combining performance and power, such as Energy Delay Product (EDP), consistently favored the NPU architecture. While low-power ARM processors demonstrated competitive energy usage, the NPU consistently outperformed them in combined performance and power metrics. The study employed a standardized software stack built around the Hugging Face Hub and GGUF format, ensuring consistent model loading and execution across all platforms. Scientists utilized quantized models to further reduce memory footprint and accelerate inference, demonstrating the effectiveness of this technique for edge deployment. The research demonstrates that NPUs consistently outperform both CPUs and GPUs in terms of raw processing speed, achieving substantial gains across a range of model sizes and precision formats. Normalizing performance by available memory bandwidth reveals that this advantage stems from more effective utilization of hardware resources, rather than simply greater bandwidth capacity. Furthermore, the team’s analysis of energy efficiency, measured as tokens generated per joule, confirms that NPUs offer a compelling combination of speed and power savings, critical for edge computing applications. While CPUs and GPUs provide viable options for running these models, the results clearly indicate that hardware specifically designed for language model workloads delivers superior performance and efficiency.

👉 More information

🗞 Edge Deployment of Small Language Models, a comprehensive comparison of CPU, GPU and NPU backends

🧠 ArXiv: https://arxiv.org/abs/2511.22334