The increasing power of diffusion models presents a growing threat to facial privacy, as even a handful of images can now be used to create remarkably realistic synthetic identities. Jun Jia, Hongyi Miao, and Yingjie Zhou, along with Linhan Cao, Yanwei Jiang, and Wangqiu Zhou, address this critical issue with a novel defence framework called DLADiff. This research introduces a dual-layer protective mechanism that uniquely safeguards against both fine-tuning and zero-shot customization techniques, methods that allow diffusion models to generate highly realistic images from limited source material. By combining innovative techniques like Dual-Surrogate Models and Alternating Dynamic Fine-Tuning with a surprisingly effective simple layer for zero-shot defence, DLADiff demonstrably outperforms existing approaches and establishes a new benchmark for protecting against the misuse of diffusion models. This work represents a significant step towards mitigating the risks to personal privacy in an era of increasingly sophisticated image generation technology.

Dual-Layer Defence Against Diffusion Model Attacks

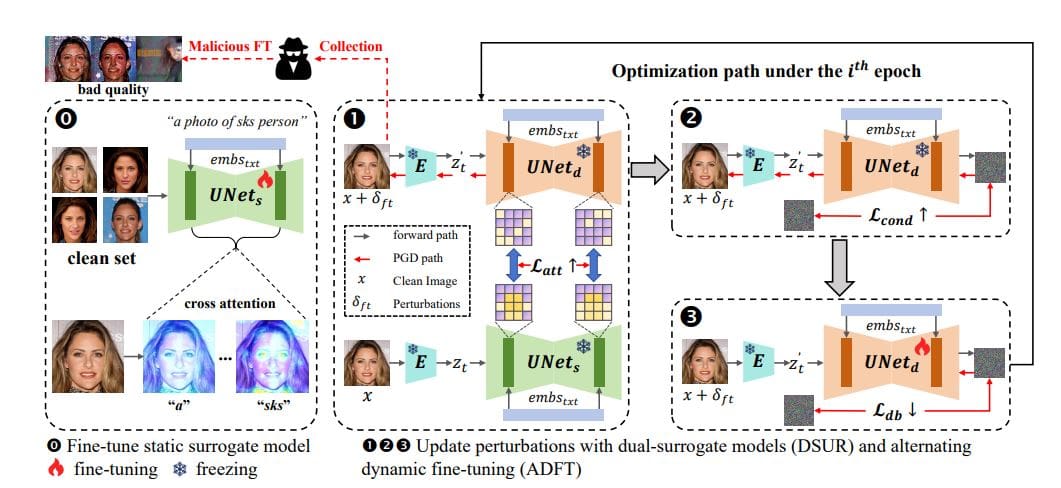

Recent advances in diffusion models enable remarkably realistic image generation from limited data, sometimes requiring as few as three to five images, and even single-image zero-shot generation. However, this technology introduces significant privacy risks, as malicious actors can exploit these methods to create synthetic identities using only a few images of an individual. To address this vulnerability, scientists have developed a new framework, Dual-Layer Anti-Diffusion (DLADiff), which simultaneously defends against both fine-tuning and zero-shot customization of diffusion models. This approach pre-fine-tunes a static surrogate model, significantly enhancing the protection of facial details and rendering genuine identity information unlearnable during malicious fine-tuning attempts. ADFT further strengthens this defence by dynamically adjusting training parameters, making it significantly more difficult to adapt the model to a specific identity. The second layer specifically targets zero-shot methods, utilizing a weighted Projected Gradient Descent (PGD) attack to ensure robust generalization across diverse zero-shot generation techniques.

Experiments demonstrate that DLADiff significantly outperforms existing approaches in defending against diffusion model fine-tuning and achieves unprecedented performance in protecting against zero-shot generation, a previously unaddressed vulnerability. By employing separate protective layers tailored to each attack type, scientists have created a comprehensive defence framework capable of safeguarding personal images in the face of increasingly sophisticated image generation technologies. The effectiveness of the surrogate models is crucial to the system’s performance, and future work could explore adaptive perturbation strategies and investigate the transferability of the defence mechanism to other generative models. This research provides a significant step towards mitigating the privacy risks associated with increasingly powerful diffusion models and offers a promising direction for future investigations in this critical area.

👉 More information

🗞 DLADiff: A Dual-Layer Defense Framework against Fine-Tuning and Zero-Shot Customization of Diffusion Models

🧠 ArXiv: https://arxiv.org/abs/2511.19910