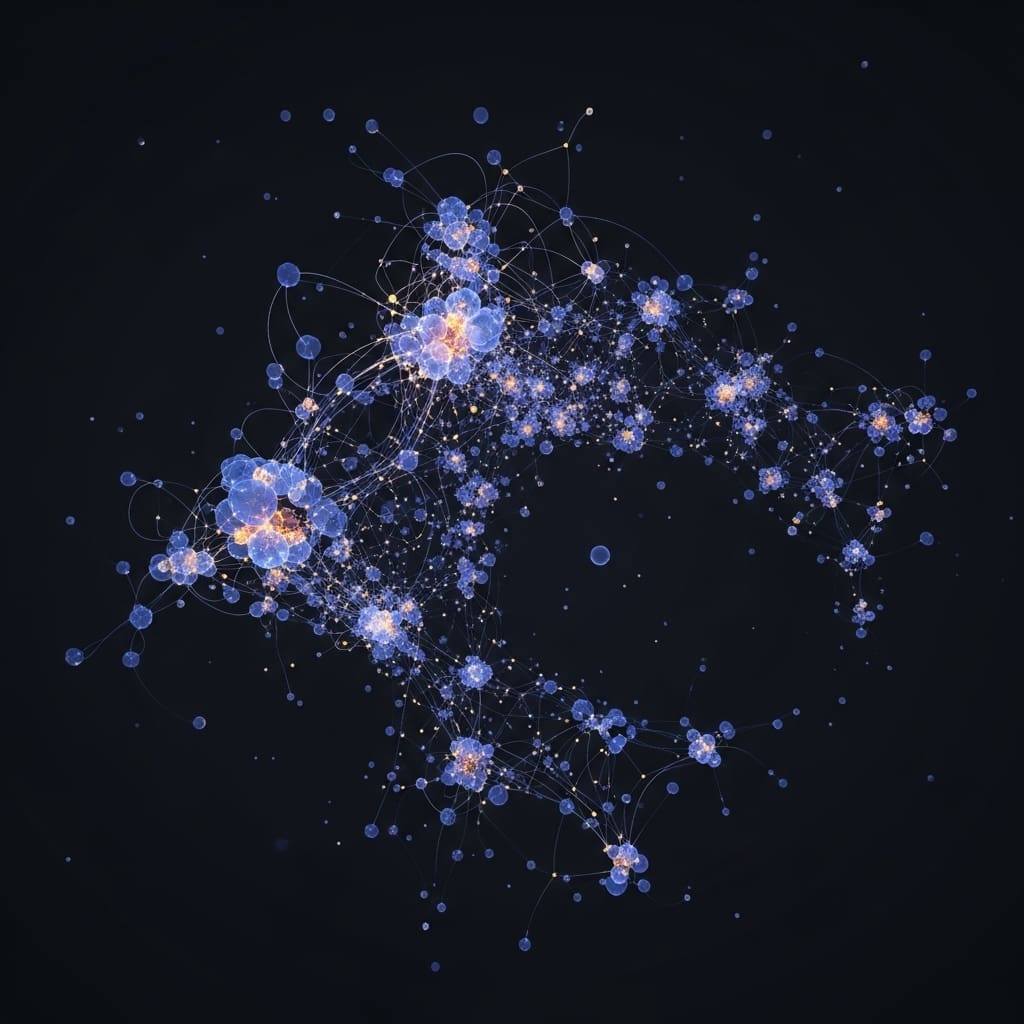

Researchers are tackling the challenge of generating truly creative images, moving beyond simply replicating existing styles or concepts. Kunpeng Song and Ahmed Elgammal, both from Rutgers University, present a novel framework leveraging diffusion models to achieve this, defining creativity as the rarity of an image within the CLIP embedding space. Their approach distinguishes itself by calculating and actively steering image generation towards these low-probability, imaginative regions, unlike methods relying on manual adjustments. This work is significant because it introduces a principled, automated method for fostering innovation in visual content synthesis, demonstrated through experiments producing unique and visually compelling images, and offering a new perspective on creativity within generative models.

Creativity via low-probability image generation is a fascinating

Scientists have demonstrated a novel framework for creative image generation using Diffusion models, redefining how artificial intelligence approaches visual innovation. This research associates creativity directly with the inverse probability of an image existing within the CLIP embedding space, a significant departure from previous methods. Unlike earlier approaches that relied on manually blending concepts or excluding specific subcategories, the team calculated the probability distribution of generated images and actively steered the process towards low-probability regions. This innovative technique facilitates the production of rare, imaginative, and visually striking outputs, pushing the boundaries of what is possible with AI-driven art.

The study unveils a specialised loss function designed to encourage exploration of less probable image embeddings, effectively driving the model towards more creative and imaginative results. Researchers introduced ‘pullback’ mechanisms to prevent the model from collapsing into unrealistic or out-of-domain outputs, ensuring high creativity is maintained alongside visual fidelity. Extensive experiments conducted on text-to-image diffusion models confirm the effectiveness and efficiency of this creative generation framework, showcasing its capacity to produce genuinely unique and thought-provoking images. This work establishes a new perspective on creativity within Generative models, offering a principled method to foster innovation in visual content synthesis.

Specifically, the team’s approach addresses a fundamental limitation of current generative AI systems: their tendency to mimic training data rather than generate truly novel content. By explicitly targeting low-probability regions, the framework bypasses the inherent bias towards typical outputs that plagues many existing models. For instance, when prompted to generate a “handbag”, the system aims to create images that semantically resemble a handbag but deviate from common, pre-existing norms. This probabilistic framework provides an effective means of pushing the boundaries of generative models and unlocking new possibilities for imaginative visual content.

Furthermore, the research highlights the importance of evaluation metrics in fostering creativity. Traditional metrics like Fréchet Inception Distance (FID) often prioritise similarity to training data, inadvertently discouraging innovation. This work proposes a shift towards evaluating generative models based on their ability to produce genuinely novel outputs, rather than simply replicating existing styles or concepts. The team’s directional control method allows for steering the model’s exploration trajectory, maintaining both creativity and semantic fidelity, opening avenues for more controlled and nuanced creative expression.

Rare image generation via low probability optimisation offers

Scientists have developed a new framework for creative image generation, associating creativity with the inverse probability of an image existing within the CLIP embedding space. The research team calculated the probability distribution of generated images and intentionally drove it towards low-probability regions, resulting in rare and visually captivating outputs. This approach differs from previous methods that relied on manual blending of concepts or exclusion of subcategories, offering a more principled method for fostering innovation in visual content synthesis. Experiments were conducted utilising a Kandinsky 2.1 Latent Diffusion Model, a system consisting of a diffusion prior and a diffusion UNet.

Results demonstrate the effectiveness of this creativity-oriented optimisation, with the team designing a specialised loss function that directly encourages exploration of less probable image embeddings. Measurements confirm that this drives the model towards more imaginative results, while pullback constraints prevent out-of-domain collapse. The breakthrough delivers directional control, allowing scientists to steer the model’s exploration trajectory in specific directions, maintaining both creativity and semantic fidelity. Tests prove the system can generate a building and vehicle image in just 2 minutes, showcasing its efficiency. The study quantified novelty using information theory, considering a user’s prior exposure to generated images.

Low probability drives creative image generation by forcing

Scientists have developed a new framework for creative image generation, associating creativity with the inverse probability of an image existing within the CLIP embedding space. Unlike previous methods that manually blend or exclude concepts, this approach calculates the probability distribution of generated images and directs it towards low-probability areas to produce unusual and visually appealing results. A pullback mechanism is also introduced, maintaining visual fidelity while enhancing creativity. Researchers demonstrated the effectiveness of this framework through extensive text-to-image experiments, successfully generating unique and thought-provoking images.

The work offers a new understanding of creativity in generative models, providing a systematic method for encouraging innovation in visual content creation. The authors acknowledge that their demonstrations used the Kandinsky model due to its efficient prior sampling, but suggest the method is applicable to other frameworks like Hyper-SD. Limitations included the need to present detailed results and evaluations in supplementary material due to page constraints. Future work could explore the application of this technique to other generative models and investigate the optimisation of the probability distribution further.

The authors believe this research represents a first step towards more expressive and creative artificial intelligence systems. They observed that reducing the dimensionality of image embeddings to 50 dimensions, using Principal Component Analysis, retained over 95% of the variance, simplifying the embedding space and facilitating the identification of low-probability regions. Fitting a Gaussian distribution to the image embeddings was supported by the inherent Gaussian behaviour of diffusion models like Kandinsky 2.1.

👉 More information

🗞 Creative Image Generation with Diffusion Model

🧠 ArXiv: https://arxiv.org/abs/2601.22125