Misinformation poses a critical challenge in today’s digital landscape, extending beyond simple factual errors to exploit the ways people think, feel, and interact with information. Arghodeep Nandi from the Indian Institute of Technology Delhi, Megha Sundriyal from the Max Planck Institute for Security and Privacy, and Euna Mehnaz Khan, Jikai Sun, Emily Vraga, and Jaideep Srivastava from the University of Minnesota, Twin Cities, investigate this complex problem by surveying the intersection of traditional fact-checking and human psychology. Their work reveals that current misinformation detection systems often overlook crucial cognitive biases, social dynamics, and emotional responses that influence how people perceive information, thereby limiting their effectiveness. By analysing existing methods through a human-centric lens, the researchers identify key shortcomings and propose future directions, including the development of neuro-behavioural models, to create more robust and adaptive frameworks for detecting and mitigating the harmful effects of misinformation on society.

Psychological Roots of Misinformation and Fact-Checking

This document presents a comprehensive exploration of misinformation, examining the psychological factors that contribute to its spread and how these insights can inform more effective fact-checking strategies. It details a multi-faceted analysis, establishing a strong theoretical foundation for understanding why people believe and share inaccurate information. The analysis consists of three main parts: a detailed explanation of key psychological concepts such as cognitive dissonance and confirmation bias, a comprehensive survey of recent research on misinformation and fact-checking, and a summary of the literature. This clearly indicates which studies explicitly consider psychological phenomena in their methodology and analysis.

The document argues that understanding the psychological influences on belief and sharing is essential for developing effective countermeasures. Recent research increasingly incorporates psychological theories, although many studies still prioritize technical aspects like detection accuracy without fully considering the cognitive biases and motivations of users. Concepts like framing effects and the heuristic-systematic model are particularly relevant to how misinformation takes hold, and the importance of considering multimodal misinformation, combining text, images, and videos, is highlighted. There is a call for more integrated and interdisciplinary research that combines technical expertise with psychological insights.

The document is thorough in its coverage of both psychological concepts and recent research, and its structure is logical and easy to follow. It critically analyzes existing research and identifies gaps for improvement, offering practical implications for researchers and practitioners. While the definitions of psychological concepts are well-explained, a deeper dive into the mechanisms underlying these biases could further strengthen the argument. A more granular approach could be useful, and a more detailed discussion of specific future research directions would be beneficial. A brief discussion of the ethical considerations of using psychological insights to counter misinformation, such as avoiding manipulation, could also be valuable. Overall, this is an excellent and insightful document that makes a strong case for the importance of integrating psychology into misinformation research. It is well-organized, comprehensive, and provides a solid theoretical foundation and a clear roadmap for future research.

Human Cognition Largely Absent in Misinformation Detection

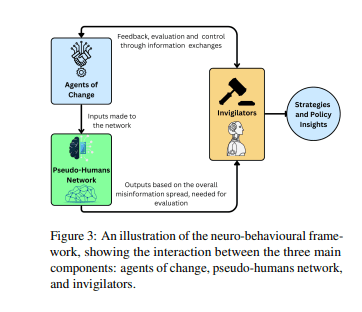

This study pioneers a human-centered approach to misinformation detection, moving beyond simple fact-checking to analyze how individuals perceive and react to information. Researchers conducted a comprehensive survey of existing misinformation detection systems, evaluating their attention to psychological and cognitive factors, specifically cognitive biases, social dynamics, and emotional responses. This evaluation revealed a significant gap in current research, with many systems prioritizing technical aspects over understanding the human element of misinformation spread. To address this gap, the study highlights the growing importance of Large Language Models (LLMs) in simulating user reactions and generating interaction graphs, enabling a more nuanced understanding of how misinformation propagates through social networks.

Researchers emphasize that future advancements will likely leverage Graph Neural Networks (GNNs) in conjunction with LLMs, allowing for the modeling of complex social and psychological dynamics. The team also notes the emergence of specialized datasets designed to explore ambiguous and persuasive content, recognizing the need for more realistic and challenging test cases. This work underscores the importance of developing systems that not only identify false information but also account for the cognitive vulnerabilities that make individuals susceptible to its influence. Researchers advocate for a shift towards modeling the complexities of human cognition and social influence, recognizing that even technically accurate information can contribute to misperceptions when presented with bias or lacking context. This approach enables the development of more robust and adaptive frameworks capable of mitigating the societal harms of misinformation by addressing the underlying psychological mechanisms that drive its spread.

Belief Change Mirrors Neural Network Learning

This work details a comprehensive exploration of misinformation detection, moving beyond simple fact-checking to incorporate principles of human psychology and social dynamics. Researchers demonstrate that current automated systems often overlook crucial aspects of how people perceive and react to information, highlighting the need for more human-centered approaches. The study reveals that changing existing beliefs is significantly more challenging for humans than reinforcing them, a principle mirrored in the observed behaviour of class-selective neurons within ResNet-50 models trained on ImageNet. Initial findings indicate that achieving label changes in these models requires considerably more training effort.

The research extensively examines psychological factors influencing susceptibility to misinformation, identifying the mere-exposure effect and confirmation bias as key drivers in the spread of false information. Meta-analyses confirm that individuals tend to favour information they encounter repeatedly and readily accept claims aligning with pre-existing beliefs, intensifying polarization in public discourse. The heuristic-systematic model is also explored, demonstrating how non-analytic processing makes individuals more vulnerable to sharing misinformation. Recent initiatives are incorporating these psychological insights, moving from purely fact-based paradigms to more abstract, psychologically informed approaches.

To simulate realistic information environments, researchers developed a framework leveraging large language models (LLMs) to generate diverse user reactions and construct user-news networks. This model implements three strategies, generating comments, responding to existing comments, and selecting comments for engagement, to mimic a potential misinformation propagation process. The resulting network embodies an artificial social perspective, enabling the use of graph neural networks for enhanced analysis. Furthermore, debates between LLM agents are used to improve factuality assessments, with agents constructing reasoned arguments and extracting facts in a collaborative process.

These models, however, face limitations related to interpretability and potential biases within LLMs, requiring metrics to assess the appropriateness and logic of responses. Researchers also addressed the challenge of entity ambiguity in LLMs, where information about multiple entities is merged, potentially misleading users. The D-FActScore metric was developed to evaluate factuality in these scenarios, but the study suggests that this issue reflects deeper cognitive phenomena, as individuals interpret and integrate information through the lens of cognitive biases. This work demonstrates a pathway to simulate misinformation propagation, but acknowledges the need for further research using real-world datasets and focusing on model interpretability to combat misinformation effectively.

Cognitively Grounded Approaches to Misinformation Research

This survey examines the evolving field of misinformation research, demonstrating a shift from approaches focused solely on.

👉 More information

🗞 The Psychology of Falsehood: A Human-Centric Survey of Misinformation Detection

🧠 ArXiv: https://arxiv.org/abs/2509.15896