Researchers are tackling the complex challenge of coordinating unmanned aerial vehicle (UAV) swarms in environments where communication is impossible. Myong-Yol Choi, Hankyoul Ko, and Hanse Cho, from the Department of Mechanical Engineering at Ulsan National Institute of Science and Technology, along with Changseung Kim, Seunghwan Kim, Jaemin Seo et al, present a novel deep reinforcement learning (DRL) controller that allows UAVs to navigate collectively using only onboard LiDAR sensing, a significant step towards robust, autonomous swarming. Inspired by the collective behaviour of biological groups, their system enables followers to implicitly track a leader without explicit communication or reliance on external positioning, overcoming a key limitation in many current UAV swarm technologies. This breakthrough, validated through extensive simulations and real-world experiments, promises to unlock the potential of UAV swarms for applications in search and rescue, infrastructure inspection, and environmental monitoring, even in challenging and communication-denied scenarios.

Leader-follower DRL for communication-denied UAV swarms

Scientists have demonstrated a groundbreaking deep reinforcement learning (DRL) based controller for the collective navigation of unmanned aerial vehicle (UAV) swarms in environments devoid of communication. Inspired by the elegant coordination observed in biological swarms, where informed individuals guide groups without explicit signalling, the team employed an implicit leader-follower framework to achieve robust operation in complex, obstacle-rich settings. This innovative approach allows a swarm to navigate effectively even when inter-agent communication is unavailable or unreliable, a significant limitation of many existing systems. The core of this research lies in the development of a system where only the leader UAV possesses goal information, while follower UAVs learn robust policies solely from onboard LiDAR sensing, crucially, without requiring any inter-agent communication or leader identification.

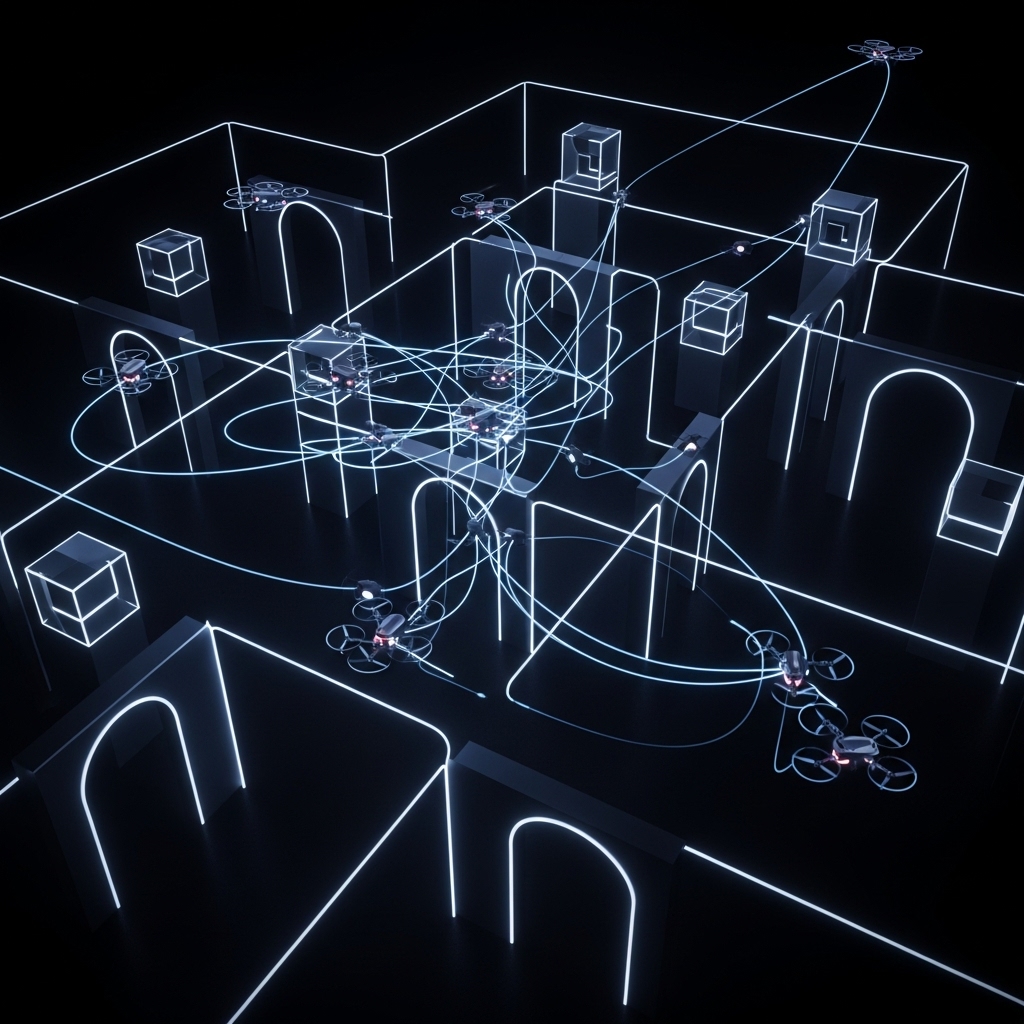

Researchers utilised LiDAR point clustering coupled with an extended Kalman filter to provide stable neighbour tracking, ensuring reliable perception independent of external positioning systems. This allows the swarm to operate autonomously, overcoming the limitations of systems reliant on external infrastructure or constant communication links. This work’s central innovation is a DRL controller, trained using GPU-accelerated Nvidia Isaac Sim, which enables follower UAVs to learn complex emergent behaviours, effectively balancing the need for flocking with robust obstacle avoidance, using only local perception. This allows the swarm to implicitly follow the leader, even when faced with perceptual challenges such as occlusion or limited field-of-view.

Extensive simulations and challenging real-world experiments with a swarm of five UAVs confirmed the robustness and sim-to-real transfer capabilities of this approach. The team successfully demonstrated collective navigation across diverse indoor and outdoor environments without any communication or external localisation, a remarkable achievement in autonomous swarm robotics. This breakthrough opens exciting possibilities for applications in scenarios where communication is compromised, such as search and rescue operations in disaster zones, surveillance in contested environments, or environmental monitoring in remote areas. The research establishes a new paradigm for resilient and scalable UAV swarm navigation, paving the way for more adaptable and reliable autonomous systems.

Leader-follower DRL for communication-denied UAV swarms

Scientists developed a novel deep reinforcement learning (DRL) controller for the collective navigation of unmanned aerial vehicle (UAV) swarms operating without inter-agent communication, addressing a critical limitation in challenging environments. The research team pioneered an implicit leader-follower framework inspired by biological swarms, where a single informed leader guides the group without explicit signalling, enabling robust operation in communication-denied scenarios. This approach circumvents the bandwidth and latency constraints of traditional communication-dependent methods, offering scalability and real-time performance improvements. To facilitate robust perception, the study harnessed onboard LiDAR sensing coupled with an extended Kalman filter for stable neighbor tracking, providing reliable positional data independent of external systems.

The team employed LiDAR point clustering to identify surrounding UAVs, then fed this data into the Kalman filter to maintain accurate and continuous tracking of each follower’s position relative to the leader and its peers. This system delivers precise localization even in the presence of occlusion or limited field-of-view, crucial for maintaining swarm cohesion. The core innovation lies in the DRL controller, trained within a GPU-accelerated Isaac Sim environment, which empowers follower UAVs to learn complex behaviours, balancing flocking and obstacle avoidance, solely from local perceptual inputs. Experiments employed a reward function designed to incentivise both proximity to neighbours and successful avoidance of obstacles, driving the development of emergent behaviours.

This training process enabled the followers to implicitly track the leader’s movements, creating a cohesive swarm without requiring explicit leader identification or mission broadcasting. Robustness and sim-to-real transfer were rigorously validated through extensive simulations and real-world experiments involving a swarm of five UAVs navigating diverse indoor and outdoor environments. The swarm successfully demonstrated collective navigation without any communication or external localization, confirming the efficacy of the proposed methodology and its potential for real-world deployment. The system achieved successful navigation across varied terrains, proving its adaptability and resilience in complex, dynamic scenarios.

Leader-follower UAV swarms navigate without communication

Scientists have developed a deep reinforcement learning (DRL) based controller for the collective navigation of unmanned aerial vehicle (UAV) swarms in environments devoid of communication, achieving robust operation amidst complex obstacles. The research team employed an implicit leader-follower framework, mirroring biological swarms where informed individuals guide groups without explicit communication, only the leader possesses goal information, while follower UAVs learn policies using onboard LiDAR sensing alone. Experiments revealed that follower UAVs successfully navigate collectively by responding to the leader’s positional changes, rather than being constrained by the swarm’s average velocity direction. The primary objective of this study was to train a robust control policy, π, via DRL, enabling followers to balance flocking behaviours, cohesion and separation, with obstacle avoidance using only onboard LiDAR data.

Results demonstrate that successful execution of these behaviours results in emergent leader-following, where the swarm naturally moves towards the destination as followers maintain positional cohesion with neighbours, influenced by the leader’s goal-directed motion. The system achieves collective movement in a fully communication-free manner, a significant technical accomplishment. Measurements confirm the effectiveness of the LiDAR-based perception system, which consists of ego-state estimation, object tracking, and point downsampling modules. The perception system filters raw point clouds, stacking the most recent two point clouds and retaining points within a range of 0.05 to 10.0 metres.

High-intensity points, identified by an intensity threshold of 170, are used as key seeds for object detection, while region-of-interest points within a 0.3-metre radius of existing track centroids are also retained. DBSCAN clustering, utilising a distance threshold of 0.1 metres and a minimum cluster size scaled by the number of stacked frames, groups filtered points into clusters. Tests prove that each cluster is tracked using an extended Kalman filter (EKF) with a constant velocity model, and tracks are validated based on a high-intensity ratio threshold of 0.05 for continuous duration of 0.01 seconds. The resulting perception outputs, representing validated neighbours, are then fed into the DRL control policy. The DRL framework models the follower’s control problem as a partially observable Markov decision process, utilising an encoder to process observations into a latent vector, and actor and critic heads to determine the policy and estimate value, enabling stable collective navigation without external positioning systems. Extensive simulations and real-world experiments with a five-UAV swarm successfully demonstrated collective navigation across diverse indoor and outdoor environments without communication or external localisation.

Implicit Leadership for UAV Swarm Navigation requires robust

Researchers have developed a deep reinforcement learning (DRL) controller enabling collective navigation for unmanned aerial vehicle (UAV) swarms operating without communication between agents. This system addresses the challenge of coordinating multiple UAVs in complex environments, such as those with obstacles, by drawing inspiration from the behaviour of biological swarms where leadership emerges naturally. The core innovation lies in an implicit leader-follower framework, where a single ‘leader’ UAV possesses goal information, and follower UAVs learn to navigate using only onboard LiDAR sensing, eliminating the need for inter-agent communication or explicit leader identification. The developed system employs LiDAR point clustering and an extended Kalman filter to ensure stable neighbour tracking, providing reliable perception independent of external positioning systems.

A DRL controller, trained using GPU-accelerated simulation, allows follower UAVs to learn complex behaviours, balancing flocking and obstacle avoidance, based solely on local perception. Extensive simulations and real-world experiments with a five-UAV swarm confirmed the robustness and sim-to-real transferability of this approach, successfully demonstrating collective navigation in diverse indoor and outdoor environments without communication or external localisation. The authors acknowledge a limitation in the current scalability to larger swarms, and future work will explore enhancing this aspect alongside investigating more complex collective behaviours like adaptive role-switching. This research demonstrates the practicality and robustness of utilising DRL for communication-free collective navigation, offering a promising solution for UAV swarm operation in challenging and communication-denied scenarios.

👉 More information

🗞 Communication-Free Collective Navigation for a Swarm of UAVs via LiDAR-Based Deep Reinforcement Learning

🧠 ArXiv: https://arxiv.org/abs/2601.13657