Optimising tasks for increasingly powerful, yet unpredictable, quantum computers presents a major challenge, as the inherent noise and variability of different processors significantly impacts results. Researchers at The University of Melbourne and Data61, CSIRO, led by Hoa T. Nguyen, Muhammad Usman, and Rajkumar Buyya, now address this issue with a novel system called QFOR. This fidelity-aware orchestrator uses deep reinforcement learning to intelligently allocate tasks across heterogeneous quantum computing resources, dynamically adapting to processor characteristics and prioritising both speed and accuracy. The team’s approach demonstrably improves performance, achieving substantial gains in task fidelity, between 29. 5 and 84 percent, over existing methods, while maintaining comparable execution times and paving the way for more reliable and cost-effective quantum computation.

Quantum Cloud Simulation and Resource Challenges

This research explores the complex challenges of managing quantum computing resources in cloud environments, a rapidly growing field. Researchers have developed QSimPy, a learning-centric simulation framework, alongside related tools, to efficiently allocate limited quantum resources, schedule tasks, and provide a platform for innovation. Quantum computing introduces unique resource constraints, such as qubit quality, connectivity, and coherence times, which traditional cloud management techniques cannot easily handle. The QSimPy framework allows researchers to model and simulate these environments before deploying solutions on real hardware, a necessity given the limited and expensive access to actual quantum computers.

The research emphasizes the use of deep reinforcement learning and simulation for thorough evaluation of algorithms before deployment. Tools like iQuantum create realistic models of quantum cloud environments, while QFaaS leverages serverless computing to provide a flexible and scalable platform for running quantum applications. This comprehensive approach aims to provide a complete ecosystem for quantum cloud research. By addressing challenges like qubit decoherence, building quantum networks, and developing performance benchmarks, this work contributes to harnessing the potential of quantum computing and making it accessible to a wider range of users.

Reinforcement Learning Optimizes Cloud Quantum Scheduling

Researchers have developed a novel approach to managing quantum computations in cloud environments, addressing limitations in handling limited resources, high costs, and the instability of quantum hardware. By framing the problem as a ‘Markov Decision Process’, they employed ‘Proximal Policy Optimization’, a deep reinforcement learning algorithm, to learn optimal scheduling policies for quantum tasks. The system balances execution speed and ‘fidelity’, a measure of the accuracy of the computation, critical given the sensitivity of quantum states to noise. Instead of relying on pre-defined rules, the algorithm learns from experience, continuously refining its scheduling decisions to maximize performance based on real-time conditions.

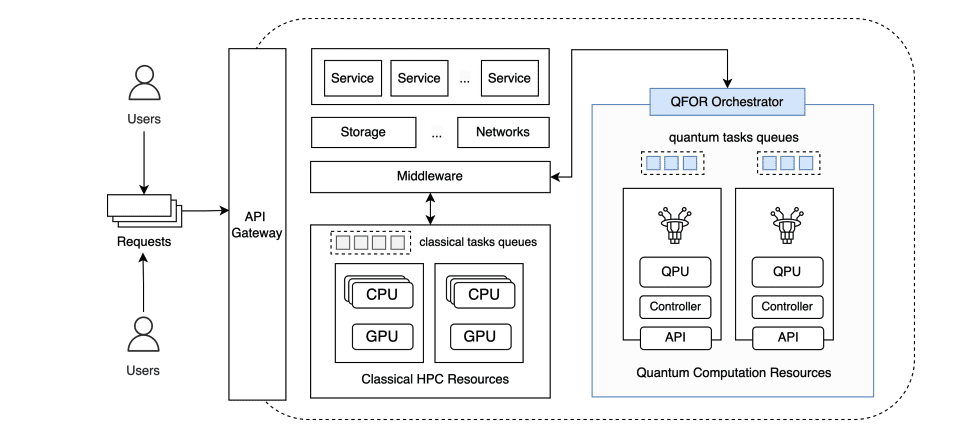

This allows intelligent distribution of tasks across available quantum processing units, minimizing the impact of noise. Crucially, the system incorporates processor calibration data, allowing it to estimate noise levels and schedule tasks accordingly, minimizing errors and improving reliability. This improves the efficiency and cost-effectiveness of quantum cloud computing, paving the way for more complex and reliable quantum applications. The system’s configurability allows users to prioritize either speed or accuracy. By seamlessly integrating with existing cloud infrastructure and leveraging classical high-performance computing resources, this framework creates a powerful hybrid quantum-classical computing environment, particularly important in the ‘Noisy Intermediate-Scale Quantum’ era.

Intelligent Task Allocation Boosts Quantum Reliability

Researchers have developed QFOR, a new system that intelligently manages the allocation of quantum computing tasks across cloud-based resources, achieving significant improvements in the reliability of results. QFOR addresses the inherent variability and noise present in quantum hardware by actively learning how to best match tasks to available processors, taking into account the specific characteristics of each machine. The system models task allocation as a decision-making process and employs a sophisticated learning algorithm to adapt to changing conditions and optimize performance. QFOR balances execution speed and fidelity, the accuracy and trustworthiness of the results, offering a flexible and configurable solution by allowing users to prioritize either speed or accuracy.

The system achieves this through detailed modeling of quantum hardware, incorporating calibration data to accurately estimate the performance and error rates of individual processors. Extensive testing demonstrates that QFOR significantly outperforms existing heuristic methods, improving the relative fidelity of quantum computations by 29. 5 to 84 percent, without sacrificing speed. QFOR’s effectiveness stems from its use of deep reinforcement learning, allowing it to learn from experience and adapt to the unique characteristics of each quantum processor. By simulating the behavior of noisy quantum devices based on real-world calibration data, QFOR can make informed decisions about task allocation, maximizing the probability of obtaining accurate and reliable results.

Learning-Driven Fidelity Optimisation for Quantum Clouds

This work presents QFOR, a novel deep reinforcement learning framework designed to optimise fidelity-aware task orchestration within heterogeneous quantum cloud environments. By modelling task allocation as a Markov Decision Process and employing adaptive scheduling policies informed by processor calibration data, QFOR successfully balances execution fidelity and time, achieving gains of 29. 5-84% in relative fidelity compared to traditional heuristic methods. The findings establish a foundation for learning-driven quantum resource management, particularly important as hybrid quantum-HPC systems become more prevalent. Future research will focus on incorporating dynamic device calibration data, scaling the framework to larger quantum infrastructures, and exploring distributed reinforcement learning approaches to address potential bottlenecks in geographically distributed environments. These developments are essential to fully optimise resource management and unlock the potential of quantum cloud computing as the technology matures.

👉 More information

🗞 QFOR: A Fidelity-aware Orchestrator for Quantum Computing Environments using Deep Reinforcement Learning

🧠 ArXiv: https://arxiv.org/abs/2508.04974