Deep research demands efficient information gathering, accurate synthesis, and robust reasoning, yet current artificial intelligence agents often struggle with these complex tasks. Yi Wan, Jiuqi Wang from Pokee AI, Liam Li, and colleagues address this challenge with PokeeResearch-7B, a new deep research agent built on a unified reinforcement learning framework. The team trains PokeeResearch-7B using a novel method that leverages feedback from large language models, optimising its ability to find factual information, accurately cite sources, and follow instructions. This innovative approach, combined with a reasoning scaffold that promotes self-verification and recovery from errors, allows PokeeResearch-7B to achieve state-of-the-art performance on ten popular deep research benchmarks, demonstrating the potential for creating resilient and efficient AI agents capable of conducting high-quality research.

Reinforcement Learning Agent for Robust Research Synthesis

Researchers have pioneered PokeeResearch-7B, a deep research agent built upon a reinforcement learning framework, achieving robust, aligned, and scalable performance in complex research tasks. The team engineered a system that breaks down complex questions, retrieves relevant evidence, and synthesises grounded responses, addressing limitations found in existing deep research agents. This work centres on an annotation-free Reinforcement Learning from AI Feedback (RLAIF) framework, which optimises the agent’s behaviour using reward signals generated by large language models, specifically assessing factual accuracy, citation faithfulness, and adherence to instructions. In research mode, the agent engages in multi-turn interactions, alternating between issuing tool calls to gather information and generating potential answers.

Crucially, the agent continues experimenting with new calls until success or a turn limit is reached. Following answer generation, the agent transitions to verification mode, where it examines the entire research thread to assess the correctness of its response, re-entering research mode if refinement is needed. This self-verification step proactively prevents common failures such as incomplete answers or insufficient supporting evidence. To equip the agent for efficient information gathering, scientists integrated a suite of specialised tools. A web searching tool surveys the internet based on string queries, returning structured lists of URLs and descriptive summaries. Complementing this, a web reading tool extracts concise summaries from webpages identified through search results, providing a high-level understanding of content. The agent was trained using the MiroRL-GenQA dataset and the RLOO algorithm, a true on-policy method that achieves lower variance through multiple samples.

PokeeResearch-7B Achieves State-of-the-Art Research Performance

Scientists have developed PokeeResearch-7B, a deep research agent that demonstrates significant advancements in automated information gathering and synthesis. This 7-billion-parameter model utilises a reinforcement learning framework to achieve robustness, alignment, and scalability in complex research tasks. The work centres on equipping the agent with tools to decompose queries, retrieve external evidence, and synthesise grounded responses, ultimately functioning as a deep research assistant. Experiments reveal that PokeeResearch-7B achieves state-of-the-art performance among 7-billion-parameter deep research agents across ten popular benchmarks.

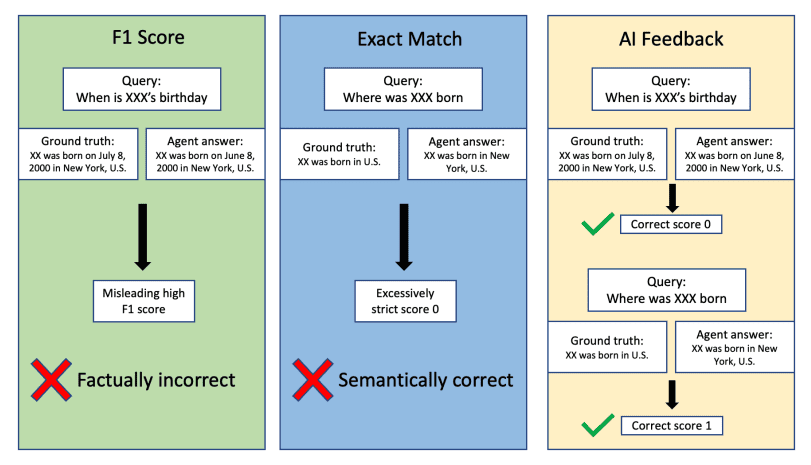

The team trained the agent using a novel annotation-free Reinforcement Learning from AI Feedback (RLAIF) framework, optimising policies with reward signals that capture factual accuracy, citation faithfulness, and adherence to instructions. This training process involved the RLOO algorithm, which samples multiple completions per prompt and uses a leave-one-out baseline to reduce variance and improve learning stability. The research team designed a reward system that focuses on answer correctness, employing three complementary evaluation approaches: F1 score, Exact Match (EM), and AI feedback. Measurements confirm that combining AI feedback with a small format reward delivers optimal performance.

The AI feedback leverages an external large language model to assess semantic equivalence to the ground truth, addressing limitations of purely lexical metrics like F1 score and EM. For example, the team demonstrated that the AI feedback approach can identify semantic errors even when token overlap with the ground truth is high, as demonstrated in detailed analysis of answer quality. The results demonstrate a breakthrough in creating AI agents capable of conducting complex research tasks with a high degree of accuracy and reliability.

Autonomous Research Agent Achieves State-of-the-Art Results

PokeeResearch-7B represents a significant advance in the development of deep research agents, demonstrating the potential of large language models to perform complex research tasks autonomously. The team successfully constructed a 7-billion-parameter agent within a unified reinforcement learning framework, prioritising reliability, alignment, and scalability. By employing Reinforcement Learning from AI Feedback, the model optimises for crucial qualities such as factual accuracy, faithful citation, and adherence to instructions, achieving this without relying on extensive human annotation. The agent’s performance across ten open-domain benchmarks establishes state-of-the-art results among similarly sized models, validating the effectiveness of its design in both reasoning quality and operational resilience. A key feature is the chain-of-thought-driven reasoning scaffold, which enables robust and adaptive tool use, allowing the agent to diagnose, recover from, and mitigate common failures encountered in dynamic research environments. The researchers acknowledge that further work is needed to refine these systems and explore the principles of scalable, self-correcting reasoning in large language models, paving the way for a new generation of autonomous and verifiable research agents.

👉 More information

🗞 PokeeResearch: Effective Deep Research via Reinforcement Learning from AI Feedback and Robust Reasoning Scaffold

🧠 ArXiv: https://arxiv.org/abs/2510.15862