The efficiency of deep recommender models, a core component of modern artificial intelligence systems, significantly impacts the performance of data-intensive applications. These models, responsible for personalised suggestions in areas such as e-commerce and social media, currently account for a substantial proportion of AI workloads in large data centres. Giuseppe Ruggeri, Renzo Andri, and colleagues from Huawei Technologies, alongside Daniele Jahier Pagliari from Politecnico di Torino and Lukas Cavigelli, present research detailed in their article, ‘Deep Recommender Models Inference: Automatic Asymmetric Data Flow Optimization’, which focuses on optimising the inference process of these models by addressing bottlenecks within the embedding layers, and proposes a framework for automatically mapping data flows across multi-core systems.

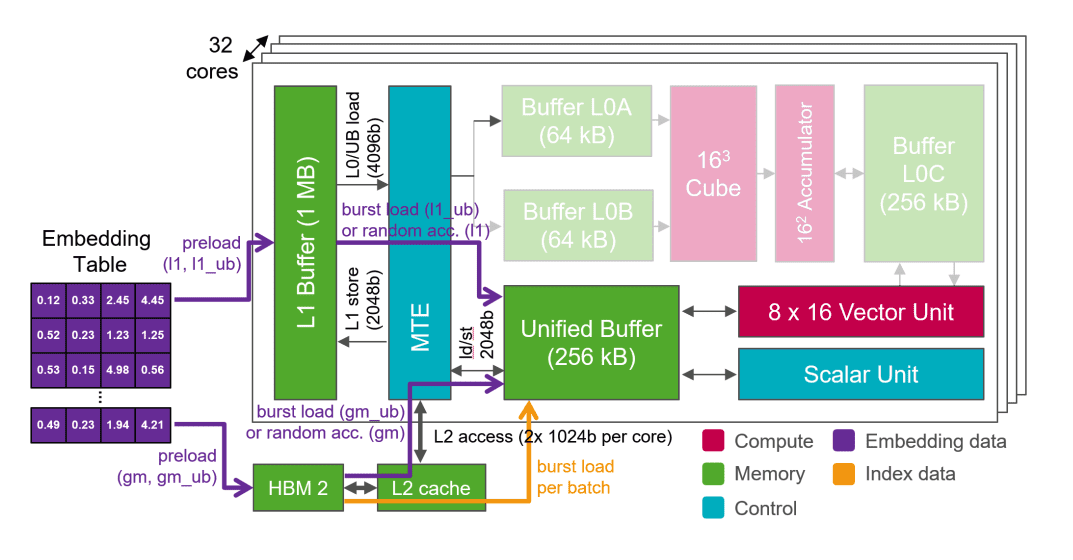

Deep Recommender Models (DLRMs) constitute a significant portion of Meta’s artificial intelligence workload, yet performance frequently suffers from latency within embedding layers. Researchers address this critical bottleneck by proposing tailored data flows designed to accelerate embedding look-ups, focusing on optimising access patterns and data organisation for improved efficiency. Embedding layers transform categorical data, such as user IDs or product categories, into numerical vectors, enabling machine learning algorithms to process this information. Crucially, the methodology incorporates a framework that automatically and asymmetrically maps these tables across multiple cores within a System on a Chip (SoC), enhancing data locality and reducing contention. A System on a Chip integrates all components of a computer onto a single integrated circuit.

The core of the approach involves four distinct strategies implemented on a single core to enhance embedding table access, delivering substantial performance gains. These strategies work in concert to minimise latency and maximise throughput, enabling faster and more responsive recommender systems. Experiments utilise Huawei’s Ascend AI accelerators to evaluate the effectiveness of the proposed method, comparing its performance against both the default Ascend compiler and Nvidia’s A100 processor, demonstrating significant improvements across various benchmarks.

Results demonstrate a substantial reduction in latency, achieving speed-ups ranging from 1.5x to 6.5x when processing real-world workload distributions, showcasing the practical benefits of the optimisation. Notably, the method exhibits even greater improvements—exceeding 20x—when applied to extremely unbalanced distributions, highlighting its robustness and adaptability. An unbalanced distribution refers to scenarios where some categories within the embedding tables are far more frequent than others.

Evaluations encompass a diverse range of large-scale datasets, including Huawei-25MB, Criteo-1TB, Avazu-CTR, KuaiRec-big, Taobao, and TenRec-QB-art, each containing between 11 and 84 embedding tables. These datasets facilitate a comprehensive assessment of the method’s scalability and generalisability across different recommender system scenarios, confirming its broad applicability.

Asymmetric sharding accelerates deep recommender model inference performance, addressing a fundamental challenge in contemporary AI infrastructure. The study identifies embedding table access as the primary limitation, particularly due to random memory accesses required to retrieve vectors from tables of varying sizes, creating a significant bottleneck. Consequently, researchers propose an asymmetric sharding strategy, intelligently distributing these tables across multiple cores of a System on a Chip (SoC), optimising resource utilisation and minimising communication overhead. Sharding involves dividing a large table into smaller, more manageable parts.

The core innovation lies in a hybrid approach, tailoring data flow to optimise embedding look-ups, combining replication and sharding techniques. Smaller embedding tables replicate across all cores, minimising communication overhead and enabling local access, while larger tables undergo sharding, distributing the load and reducing contention. This asymmetric allocation, informed by table size, demonstrably improves performance compared to uniform distribution strategies, delivering substantial gains in efficiency.

Experiments utilise Huawei’s Ascend AI accelerators, demonstrating significant improvements across various benchmarks. The research highlights the method’s resilience to variations in query distribution, demonstrating superior performance consistency compared to the baseline, ensuring reliable performance under diverse conditions.

Further work could investigate optimisation for diverse hardware platforms, beyond the Ascend architecture, broadening the applicability of the research. Investigating the application of these principles to other machine learning models reliant on large embedding tables could broaden the impact of this research, extending its benefits to a wider range of applications.

👉 More information

🗞 Deep Recommender Models Inference: Automatic Asymmetric Data Flow Optimization

🧠 DOI: https://doi.org/10.48550/arXiv.2507.01676