Accurate assessment of human movement outside of clinical settings is becoming increasingly important for advances in telemedicine, sports science, and rehabilitation, and researchers are now exploring the potential of machine learning and wearable sensors to achieve this. Mario Medrano-Paredes, Carmen Fernández-González, and Francisco-Javier Díaz-Pernas, alongside colleagues including Hichem Saoudi, Javier González-Alonso, and Mario Martínez-Zarzuela, present a preclinical benchmark comparing the accuracy of deep learning-based 3D human pose estimation from standard video with established inertial measurement unit (IMU) technology. The team evaluated several state-of-the-art models, including MotionAGFormer, MotionBERT, MMPose, and BodyTrack, against IMU data collected during thirteen everyday activities, utilising the VIDIMU dataset. Their work demonstrates that both video-based and sensor-based technologies offer viable options for assessing movement outside of the laboratory, while also identifying crucial considerations regarding cost, accessibility, and precision, and importantly, MotionAGFormer achieved the highest levels of accuracy in this comparison. This research establishes a valuable foundation for developing robust and cost-effective solutions for telehealth and remote patient monitoring, clarifying the current capabilities of off-the-shelf video models and highlighting areas for future improvement.

Accurate assessment of human movement under real-world conditions is essential for telemedicine, sports science, and rehabilitation. This preclinical benchmark compares monocular video-based 3D human pose estimation models with inertial measurement units (IMUs), leveraging the VIDIMU dataset. The VIDIMU dataset contains a total of 13 clinically relevant daily activities which were captured using both commodity video cameras and five IMUs. During this initial study, only healthy subjects were recorded, therefore results cannot be generalised to clinical populations at this time.

Video and IMU Pose Estimation Comparison

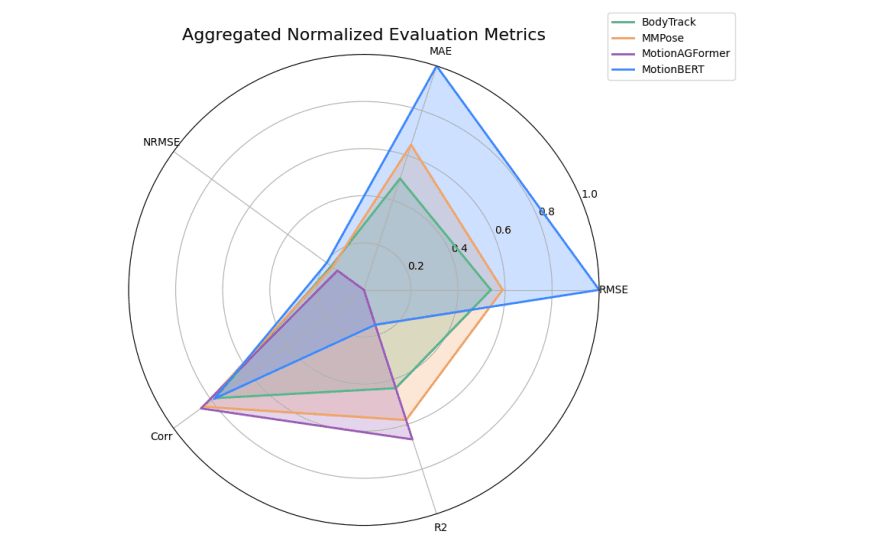

This research details a study comparing methods for recognising human activity and estimating joint angles using both video and inertial measurement units (IMUs). The study used video and sensor data collected from subjects performing 13 different activities, assessing performance using metrics such as Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), correlation, and the coefficient of determination (R²). Results indicate that MotionAGFormer generally performed well, achieving low error rates and high correlation values across many activities. However, performance varied depending on the specific activity, with some movements proving more challenging to estimate accurately.

MotionAGFormer Excels in Real-World Pose Estimation

This research presents a comprehensive benchmark comparing video-based 3D human pose estimation with inertial measurement units (IMUs) for assessing human movement outside of laboratory settings. The study utilized the VIDIMU dataset, capturing 13 clinically relevant daily activities from healthy subjects using both standard video cameras and five IMUs. MotionAGFormer demonstrated superior performance, achieving the lowest overall Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE), and the highest Pearson correlation and coefficient of determination (R²).

These results demonstrate the potential of video-based pose estimation to provide clinically relevant kinematic data, approaching the precision of IMU-based systems. The study clarifies the strengths and limitations of both technologies, highlighting trade-offs between cost, accessibility, and precision. Results demonstrate that MotionAGFormer consistently achieved the highest accuracy, exhibiting the lowest error rates and strongest correlation with IMU data. The study confirms the viability of both video and sensor-based technologies for out-of-lab kinematic assessment, while also highlighting practical trade-offs between them regarding cost, accessibility, and precision. The authors acknowledge that the current evaluation was limited to healthy adults, and therefore, generalization to clinical populations requires further investigation. Future work should focus on expanding the dataset to include individuals with movement disorders and exploring the potential of these technologies for remote patient monitoring and telehealth applications.

👉 More information

🗞 Paving the Way Towards Kinematic Assessment Using Monocular Video: A Preclinical Benchmark of State-of-the-Art Deep-Learning-Based 3D Human Pose Estimators Against Inertial Sensors in Daily Living Activities

🧠 ArXiv: https://arxiv.org/abs/2510.02264