Testing deep learning models presents a significant challenge as these systems become increasingly integrated into critical applications. Researchers Xingcheng Chen, Oliver Weissl, and Andrea Stocco, all from Technical University of Munich and fortiss GmbH, address this problem with a novel feature-aware test generation framework called Detect. Unlike existing methods that often lack semantic understanding and precise control, Detect systematically generates inputs by carefully manipulating disentangled semantic attributes within a model’s latent space, essentially probing what the model really sees. This allows Detect to pinpoint the specific features driving model behaviour, revealing both generalisation strengths and hidden vulnerabilities, and even identifying bugs missed by standard accuracy measurements. Their findings, demonstrated across image classification and detection tasks, show Detect outperforms current state-of-the-art techniques and highlights crucial differences in how fully fine-tuned convolutional and weakly supervised models learn, paving the way for more reliable and trustworthy deep learning systems.

Latent space perturbations for robust AI testing offer

Scientists have developed Detect, a novel feature-aware test generation framework designed to rigorously assess the quality of deep learning models before deployment. This breakthrough addresses a critical gap in current testing methodologies, which often lack semantic insight into misbehaviours and fine-grained control over generated inputs. The research team systematically generates inputs by subtly perturbing disentangled semantic attributes within the latent space of vision-based deep learning models, allowing for precise control over feature manipulation. Detect doesn’t simply identify failures; it pinpoints the specific features driving those failures, offering a crucial step towards more reliable artificial intelligence systems.

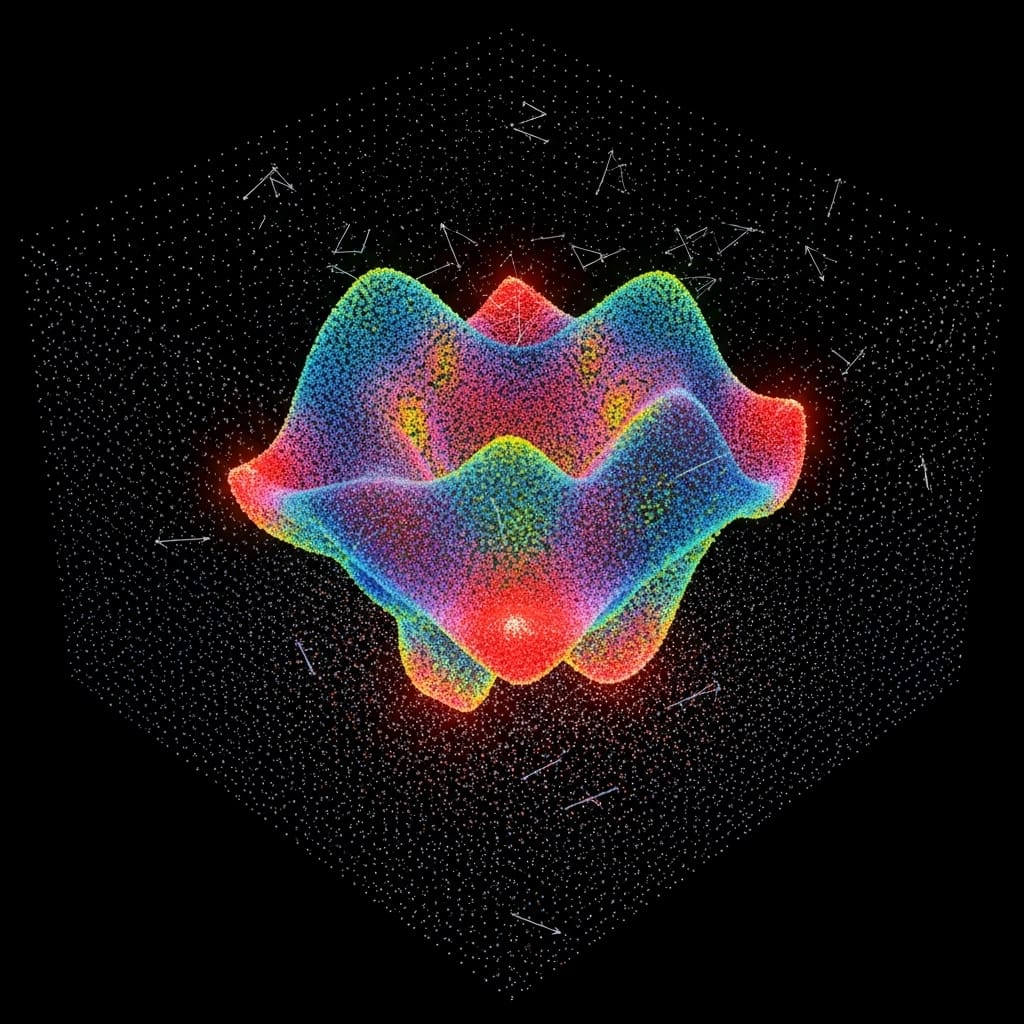

The core of Detect lies in its ability to perturb individual latent features in a controlled manner and meticulously observe the resulting impact on the model’s output. By carefully modifying these features, the framework identifies those that trigger behavioral shifts and employs a vision-language model to provide semantic attribution, effectively linking input changes to model responses. This process distinguishes between task-relevant and irrelevant features, enabling targeted perturbations designed to improve both generalization, the model’s ability to handle unseen valid inputs, and robustness, its resilience to spurious correlations. Empirical results across image classification and detection tasks demonstrate Detect’s superior performance in generating high-quality test cases with fine-grained control, revealing distinct shortcut behaviours across different model architectures.

Specifically, Detect outperforms a state-of-the-art test generator in accurately mapping decision boundaries and surpasses a leading spurious feature localization method in identifying robustness failures. The study reveals that fully fine-tuned convolutional models are prone to overfitting on localized cues, such as co-occurring visual traits, while weakly supervised transformer models tend to rely on more global features, like environmental variances. These findings underscore the importance of interpretable and feature-aware testing in enhancing the reliability of deep learning models, moving beyond simple accuracy metrics to understand how a model arrives at its conclusions. This work establishes a new paradigm for testing deep learning systems, linking input synthesis with feature diagnosis through semantically controlled perturbations in the latent space.

Detect leverages a style-based generative model to access a disentangled latent space, enabling precise manipulation of individual semantic features and assessment of both generalization and robustness. The framework incorporates a feature-aware test oracle, quantifying model response to perturbations using a logit-based criterion to flag robustness failures and test generalizability. Ultimately, Detect not only uncovers hidden vulnerabilities but also provides valuable. By differentiating between task-relevant and irrelevant features, Detect applies targeted perturbations designed to enhance both generalization and robustness.

Experiments across image classification and detection tasks demonstrate that Detect generates high-quality test cases with fine-grained control, revealing distinct shortcut behaviors across convolutional and transformer-based model architectures. Specifically, Detect surpasses a state-of-the-art test generator in decision boundary discovery and outperforms a leading spurious feature localization method in identifying robustness failures. Measurements confirm that fully fine-tuned convolutional models exhibit a propensity for overfitting on localized cues, such as co-occurring visual traits, while weakly supervised transformers tend to rely on global features, like environmental variances. The research recorded that Detect’s feature-aware perturbations allow for precise manipulation of semantic features in the latent space, enabling detailed assessment of model behavior.

Results demonstrate that Detect’s approach effectively uncovers bugs not captured by standard accuracy metrics, highlighting the value of interpretable and feature-aware testing in improving DL model reliability. The study measured model responses to controlled perturbations using a logit-based criterion, flagging robustness failures when predictions shifted significantly after modifying semantically irrelevant features. Detect’s system architecture comprises a StyleGAN generator and the system under test, operating across the latent space, image space, and output space. This framework allows for flexible applicability across model types and data domains, serving as an effective general test generator on fine-grained tasks.

The breakthrough delivers a method for systematically testing DNN behaviors under semantically controlled perturbations, addressing both generalization and robustness concerns through feature-aware changes. Tests prove that Detect’s ability to distinguish between relevant and irrelevant features provides a deeper understanding of model vulnerabilities and strengths. Data shows that the framework’s performance in decision boundary discovery and spurious feature localization represents a significant advancement in DL model testing methodologies, paving the way for more reliable and trustworthy AI systems.

Detect reveals overfitting via latent space perturbations, offering

Scientists have developed Detect, a novel feature-aware test generation framework designed to assess deep learning models by systematically perturbing disentangled semantic attributes within the latent space.This innovative approach allows for controlled input generation, enabling the identification of features that trigger changes in model behaviour and utilising a language model for semantic attribution. Researchers demonstrate that Detect surpasses existing state-of-the-art test generators in decision boundary discovery and outperforms leading spurious feature localisation methods in identifying robustness failures. Empirical results across image classification and detection tasks reveal that fully fine-tuned convolutional models are susceptible to overfitting on localised cues, while weakly supervised models tend to rely on broader, global features, such as environmental variances, to make predictions.

The authors acknowledge a limitation in the current scope of generative architectures supported, focusing primarily on StyleGAN. Future work will extend the framework to encompass other generative models and modalities like natural language and audio, broadening its applicability to a wider range of deep learning testing scenarios. These findings underscore the importance of interpretable and feature-aware testing in enhancing the reliability of deep learning models. By bridging the gap between test generation and spurious feature diagnosis, Detect offers valuable insight into model vulnerabilities and how different architectures rely on distinct feature types, a crucial step towards building more robust and trustworthy artificial intelligence systems.

👉 More information

🗞 Feature-Aware Test Generation for Deep Learning Models

🧠 ArXiv: https://arxiv.org/abs/2601.14081