Researchers at NVIDIA have developed an accelerated quantum error correction (QEC) workflow, realised in CUDA-QX 0, focusing on rapid prototyping and deployment of QEC experiments. This approach incorporates a detector error model (DEM), initially developed as part of Stim, enabling automatic generation of error models from specified QEC circuits and noise models for both circuit sampling and decoding via the CUDA-Q QEC decoder interface. A new tensor network decoder, supported from Python 3.11 onward, leverages GPU-accelerated cuQuantum libraries, requiring only a parity check matrix, logical observable, and noise model to achieve accurate and benchmarked performance comparable to Google’s tensor network decoder.

Furthermore, improvements to the GPU-accelerated Belief Propagation + Ordered Statistics Decoding (BP+OSD) implementation include adaptive convergence monitoring, message clipping, configurable BP algorithms (sum-product and min-sum), dynamic scaling, and log-likelihood ratio logging. The release also introduces an implementation of the Generative Quantum Eigensolver (GQE) within the Solvers library, utilising a transformer model to find eigenstates of quantum Hamiltonians and potentially mitigating convergence issues associated with Variational Quantum Eigensolver (VQE) approaches Quantum Error Correction.

Researchers are increasingly focused on quantum error correction (QEC) as both the greatest opportunity and the most significant challenge in realising large-scale, commercially viable quantum supercomputers. The core principle of QEC involves encoding quantum information redundantly across multiple physical qubits, allowing for the detection and correction of errors without directly measuring the fragile quantum state itself – a process fundamentally different from classical error correction.

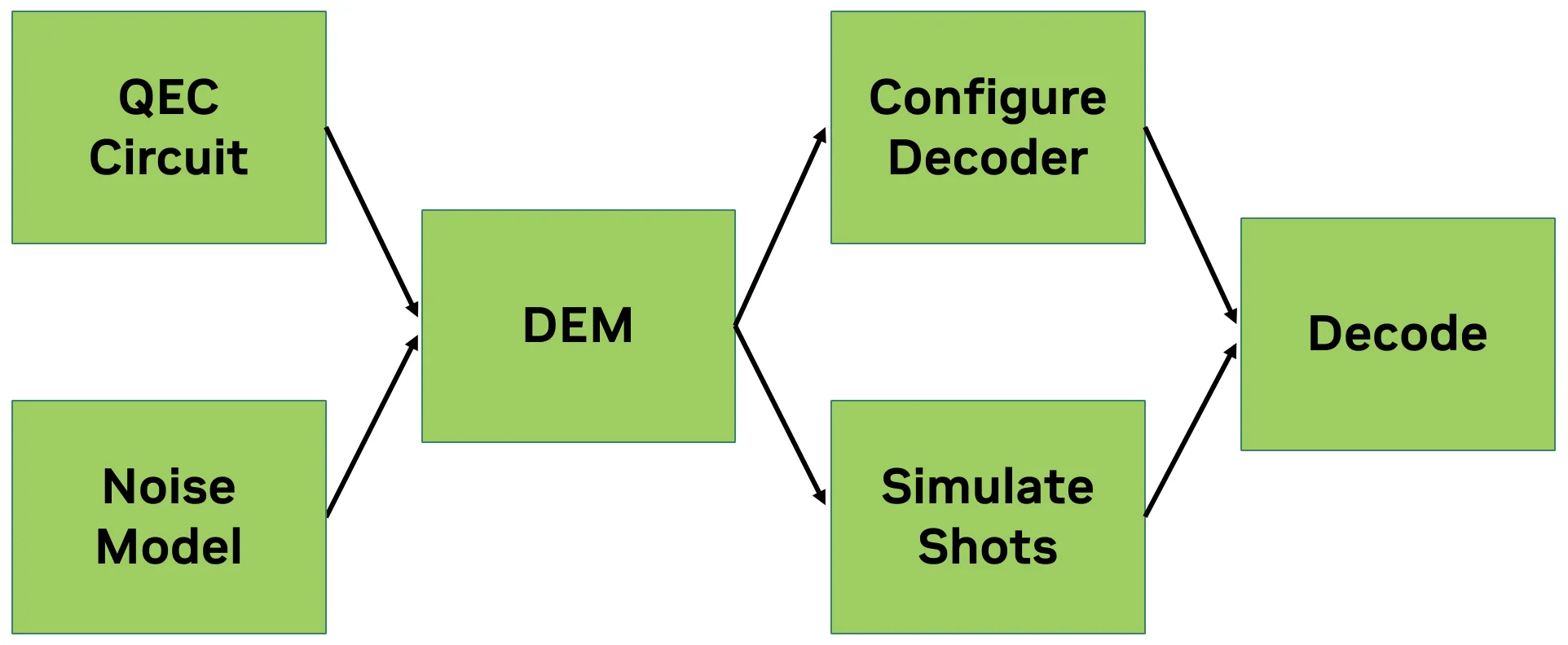

A key advancement detailed in recent research centres on accelerating QEC experimentation through the CUDA-Q framework, a platform designed to facilitate the creation of fully accelerated, end-to-end workflows. This includes defining and simulating novel QEC codes with realistic noise models, configuring decoders, and deploying them alongside physical quantum processing units (QPUs).

The framework provides a user-definable application programming interface (API), enabling researchers to rapidly prototype and evaluate different QEC strategies. A crucial initial step within this workflow is the definition of the QEC code and its associated noise model, which dictates the types and probabilities of errors that will be simulated or corrected.

Effective decoding, the process of inferring the original quantum information from noisy measurements, requires detailed knowledge of the underlying quantum circuits implementing the stabilizer measurements – the core of many QEC schemes. These circuits are themselves susceptible to noise, necessitating accurate modelling of potential errors. This decoded error model (DEM) can then be used for both circuit sampling in simulation and decoding the resulting syndromes – the indicators of mistakes – using the standard CUDA-Q QEC decoder interface. For memory circuits, a common architecture for QEC, all necessary logic is already provided behind the CUDA-Q QEC API, simplifying the implementation process.

Furthermore, the use of tensor networks for QEC decoding offers several advantages over other approaches. Tensor-network decoders are relatively easy to understand, based on the code’s Tanner graph, and can be contracted to compute the probability of a logical observable flipping – indicating a logical error. They are guaranteed to be accurate, do not require training (though can benefit from it), and can serve as benchmarks for more complex decoding algorithms. Recent work introduces a tensor network decoder with support for Python 3. 11 onward, offering flexibility, accuracy through exact contraction, and performance leveraging the GPU-accelerated cuQuantum libraries.

Benchmarking demonstrates parity with Google’s decoder, and improvements to GPU-accelerated Belief Propagation + Ordered Statistics Decoding (BP+OSD) have been made, enhancing flexibility and monitoring capabilities.

These include adaptive convergence monitoring via configurable iteration intervals, message clipping for numerical stability, a choice of BP algorithms (sum-product and min-sum), dynamic scaling for min-sum optimisation, and detailed result monitoring through logging of log-likelihood ratios. The latest release also introduces an out-of-the-box implementation of the Generative Quantum Eigensolver (GQE) to the Solvers library.

GQE is a novel hybrid algorithm for finding eigenstates of quantum Hamiltonians using generative AI models, potentially alleviating convergence issues associated with traditional Variational Quantum Eigensolver (VQE) approaches. The implementation utilises a transformer model, generating candidate quantum circuits, evaluating their performance, and updating the generative model until convergence.

More information

External Link: Click Here For More