Researchers have developed a novel framework called DRAWER that converts static indoor scene videos into photorealistic, interactive digital environments in real time, as detailed in their April 21, 2025 publication focused on advancing robotics through computer vision innovations.

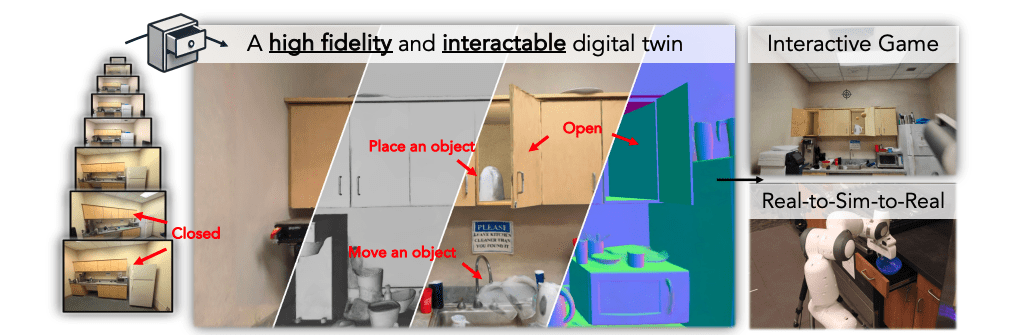

The paper introduces DRAWER, a framework converting static indoor scene videos into photorealistic, interactive digital environments. It features a reconstruction module with dual scene representation for fine geometric details and an articulation module identifying movement types, reconstructing shapes, and integrating them into the scene. The resulting environment runs in real time, compatible with game engines and simulation platforms. Applications include automatically generating interactive games in Unreal Engine and enabling real-to-sim-to-real transfers for practical uses.

Robotics is undergoing a transformative phase, driven by advancements in artificial intelligence, computer vision, and machine learning. Recent research has focused on improving robots’ ability to perceive their environments, manipulate objects with precision, and interact with the physical world in more intuitive ways. These innovations are paving the way for robots that can perform complex tasks in diverse settings, from industrial automation to human-robot collaboration.

One of the most significant advancements in robotics is the integration of neural networks into perception systems. By leveraging deep learning techniques, robots can now better interpret sensory data, enabling them to navigate dynamic environments and interact with objects more effectively. For instance, RGB-D cameras, which capture both color and depth information, have become a cornerstone of modern robotic vision systems. These devices allow robots to create detailed 3D maps of their surroundings, enhancing their ability to identify and locate objects in real time.

Manipulation remains one of the most challenging aspects of robotics, requiring precise control over robotic limbs and grippers. Recent breakthroughs in machine learning have significantly improved a robot’s ability to handle complex tasks, such as picking up small components or manipulating fragile items. By training robots on large datasets of real-world interactions, researchers have developed systems capable of adapting to new scenarios with minimal human intervention. These advancements are particularly valuable in industries like manufacturing and logistics, where robots must perform repetitive tasks with high precision.

Researchers have turned to physics-based simulations to enhance a robot’s ability to interact with its environment. These virtual environments allow robots to practice and refine their behaviors in a risk-free setting before being deployed in the real world. By simulating how objects respond to external forces, robots can develop an understanding of cause and effect, enabling them to predict outcomes and adjust their actions accordingly. This approach is particularly useful for tasks like assembly work, where precise control over object manipulation is critical.

Another key area of progress in robotics is the development of multi-task learning algorithms. These systems enable robots to perform a wide range of tasks by leveraging knowledge gained from previous experiences. For example, a robot trained to recognize objects in one setting can apply that knowledge to identify similar items in a different context. This ability to generalize across tasks is essential for creating adaptable robots capable of operating in dynamic and unpredictable environments.

👉 More information

🗞 DRAWER: Digital Reconstruction and Articulation With Environment Realism

🧠 DOI: https://doi.org/10.48550/arXiv.2504.15278