The stability of quantum computations using trapped ions faces a significant challenge from the loss of individual ions, an event that can cascade and destroy the entire quantum state. Nolan J. Coble from the University of Maryland, College Park, Min Ye, and Nicolas Delfosse from IonQ Inc, now demonstrate a method to correct for these chain losses in long sequences of trapped ions. Their work addresses a critical problem, as even rare ion loss events destabilise the entire chain, effectively erasing all quantum information. The team proposes a distributed error correction code, incorporating ‘beacon’ qubits to detect chain loss and a decoder to convert these losses into correctable errors, thereby safeguarding quantum computations against this pervasive source of instability.

Ion Loss Limits Quantum Computation Fidelity

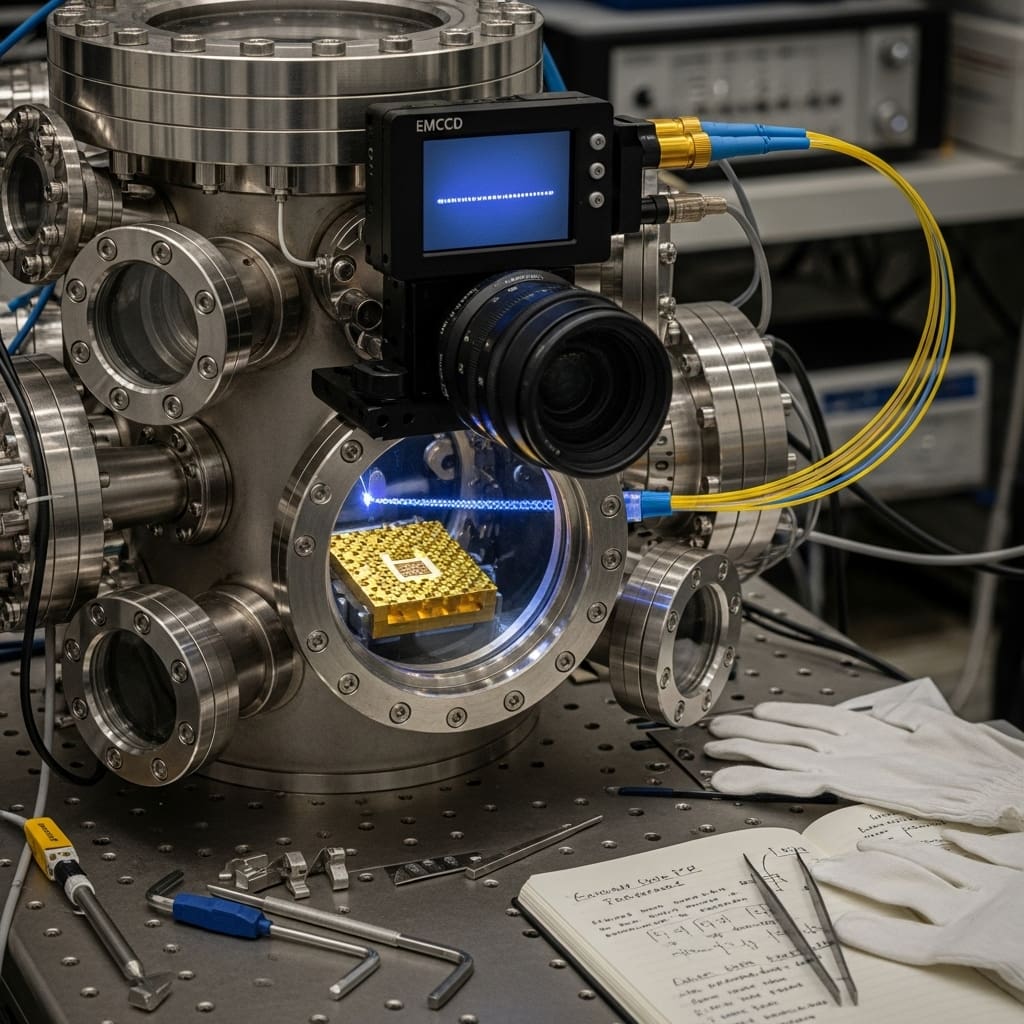

Researchers are investigating how the loss of ions impacts the accuracy of quantum computations in trapped ion systems. While relatively uncommon, ion loss in long chains fundamentally limits the size and reliability of quantum processors. The team developed a theoretical framework to model the effects of ion loss during quantum gate operations, accounting for how the remaining ions rearrange and introduce errors. This framework quantifies the degradation of quantum states caused by these losses and identifies strategies to minimize their impact.

The research demonstrates that errors accumulate with increasing chain length and gate duration, posing a significant challenge for scaling trapped ion quantum computers. To address this, the team proposes and analyzes a novel error correction scheme specifically designed for ion loss. This scheme distributes a quantum error correction code across multiple long chains, using ‘beacon’ qubits within each chain to detect losses. A decoder then corrects a combination of circuit faults and erasures resulting from the beacon qubits signaling a chain loss. Simulations using this scheme verify its ability to correct chain losses.

Simulating Chain Loss in Surface Code Modules

Detailed simulations explore the impact of chain loss on quantum error correction, specifically within a surface code-like architecture. The simulations utilize a [[72, 12, 6]] BB code, dividing the system into 36 modules consisting of data and ancilla qubits, representing groups of physical qubits encoding logical qubits. The simulations model complete chain loss, where an entire row or column of qubits fails, and focus on six rounds of syndrome extraction, the process of detecting errors without directly measuring the quantum information.

Researchers explored two scenarios for beacon qubit measurements, which detect chain loss: instantaneous detection and normal measurement times. The simulations varied the two-qubit gate error rate to assess its influence on the overall system performance. Results demonstrate that chain loss can be a dominant source of errors, especially at higher physical error rates. The simulations reveal a threshold behavior, where the logical error rate decreases exponentially with decreasing physical error rate, but this threshold is affected by chain loss. Fast beacon qubit measurements benefit performance when chain loss probability is low, though this benefit diminishes as loss probability increases.

The simulations also observed ‘spiking’ behavior in the logical error rate when a data module is lost during specific stabilizer measurements, related to the timing of the loss relative to the measurements and consistent regardless of measurement order. Simulating random chain loss events confirms that the logical error rate decreases exponentially with decreasing physical error rate. These findings highlight the importance of addressing chain loss in practical quantum error correction systems, potentially through improved qubit connections or mitigation strategies. The research suggests that code parameters and qubit layout can affect vulnerability to chain loss, and that developing decoding algorithms robust to chain loss is a crucial research direction.

Distributed Error Correction Stabilizes Ion Chains

This research presents a strategy to mitigate the impact of chain loss in trapped ion quantum computing. Recognizing that losing a single ion within a long chain destabilizes the entire system, the team developed a quantum error correction protocol distributed across multiple chains. This protocol incorporates ‘beacon’ qubits within each chain to detect losses, converting them into detectable ‘erasures’ within the quantum computation. Through simulations using a distributed BB code and beacon qubits, the researchers demonstrate the viability of correcting these chain losses.

The results indicate that the proposed method maintains performance within a factor of three compared to an ideal system without chain loss, even with relatively frequent loss events. The team acknowledges that the speed of beacon qubit measurements is crucial for optimal performance and suggests that exploiting their unique properties, specifically their lack of interaction with other qubits, could lead to faster measurements and reduced cooling times. Future work will focus on optimizing beacon qubit measurement frequency and location to minimize overhead, as well as exploring the design of a reservoir system for rapidly replacing lost chains. The researchers also note that while this work concentrates on chain loss, standard techniques likely suffice for addressing leakage errors, a related source of noise in these systems.

👉 More information

🗞 Correction of chain losses in trapped ion quantum computers

🧠 ArXiv: https://arxiv.org/abs/2511.16632