Artificial intelligence increasingly demands computational power, creating a significant energy bottleneck for traditional electronic chips, and researchers are now exploring photonics as a potential solution. Shuiying Xiang, Chengyang Yu, and Yizhi Wang, alongside their colleagues, present a comprehensive overview of this emerging field, known as photonic neuromorphic computing, which uses light instead of electricity to perform calculations. Their work details the fundamental principles and novel devices, such as photonic neurons and synapses, that underpin this technology, alongside the network architectures and integrated chips required to build functional systems. By leveraging the speed and efficiency of light, this research demonstrates the potential to overcome the limitations of current computing hardware and pave the way for more powerful and energy-efficient artificial intelligence.

With recent years witnessing substantial growth in artificial intelligence, large models have been widely applied in various fields, revolutionizing technological paradigms across multiple industries. Nevertheless, the substantial data processing demands during model training and inference result in a computing power bottleneck. Traditional electronic chips struggle to meet the growing demands for computing power and power efficiency amid the continuous development of AI. Photonic neuromorphic computing, an emerging solution, exhibits significant development potential by harnessing the properties of light.

Photonic Neural Networks and Neuromorphic Approaches

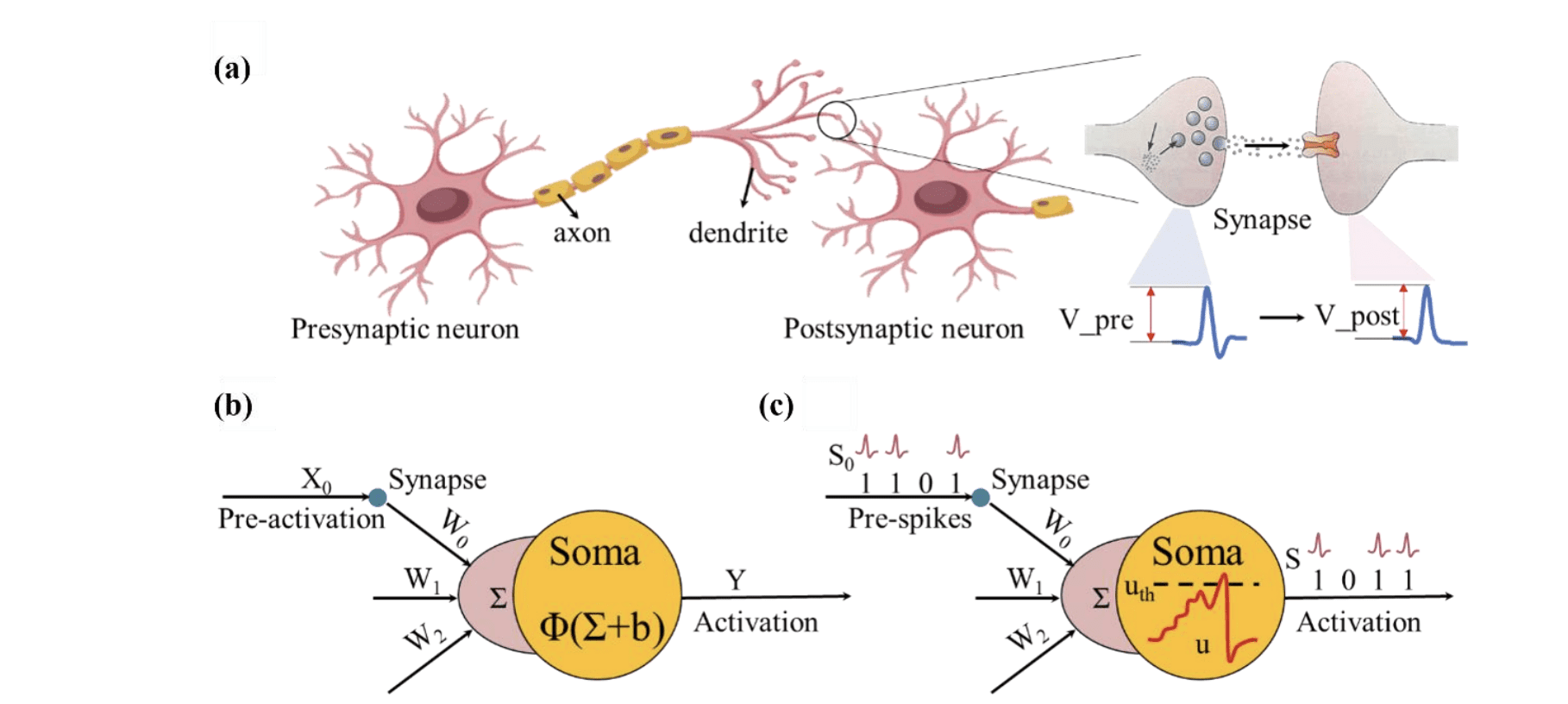

Photonic neural networks (PNNs) and neuromorphic photonics represent a promising new direction in computing. PNNs leverage light to perform computations traditionally done by electronic neural networks, offering potential advantages in speed, energy efficiency, and parallelism. This includes replicating synaptic plasticity and the behavior of spiking neurons. The primary drivers for this approach are the inherent speed of light, the potential for lower energy consumption, the ability to perform many computations simultaneously, and the higher bandwidth offered by photonics for data transmission and processing.

PNN architectures commonly employ matrix multiplication, implemented using interferometers and wavelength division multiplexing for efficient processing. Synapses are being realized using optical weighting, where the amplitude or phase of light represents synaptic strength, and through the use of phase-change materials and micro-ring resonators to store and modulate weights. Photonic neurons mimic biological neurons, incorporating nonlinear activation functions and, in some cases, replicating the spiking behavior of biological neurons. Various network topologies are being explored, including fully connected networks, convolutional neural networks for image processing, recurrent neural networks, and reservoir computing.

Training PNNs presents unique challenges, as adapting traditional backpropagation algorithms is difficult. Researchers are exploring alternative methods such as direct feedback alignment, in-situ backpropagation, and hybrid training, combining electronic and photonic components. Genetic algorithms, reinforcement learning, physics-aware training, and quantization-aware training are also being investigated to optimize network weights. Despite the progress, several challenges remain. Building large-scale photonic networks is difficult due to fabrication complexity and signal loss.

Integrating photonic devices with electronic control circuitry is crucial for practical applications. Obtaining strong and controllable nonlinearities in photonic devices is also a significant hurdle, as is minimizing signal loss and noise. Variations in fabrication can affect device performance, and training PNNs can be more complex than training electronic networks. Efficiently storing synaptic weights in photonic devices also requires further development. Future research is focusing on 3D integration to increase density and reduce signal path lengths, utilizing silicon photonics to leverage existing CMOS fabrication infrastructure, and employing microcombs to generate multiple wavelengths for parallel processing.

Spiking photonics, which mimics the energy efficiency of the brain, and neuromorphic computing architectures, such as memristive photonic synapses, are also being explored. Potential applications include accelerating AI tasks, deploying AI on edge devices, reducing energy consumption in data centers, improving biomedical imaging, building all-optical computers, and implementing ultrafast decision-making systems. This is a rapidly evolving field, and overcoming current challenges will unlock the full potential of photonic computing.

Photonic Reservoir Computing Surpasses Electronic Limits

Researchers are demonstrating that photonic computing offers a compelling solution to the power and efficiency limitations of traditional computing architectures. Driven by the increasing demands of artificial intelligence, this emerging field is rapidly advancing, with recent breakthroughs showcasing significant performance gains and novel device designs. Photonic systems leverage the speed and bandwidth of light to overcome bottlenecks inherent in electronic chips. In 2023, a silicon photonic reservoir computing (RC) structure, optimized with a particle swarm optimization algorithm, achieved a significant reduction in bit error rate, processing 25 Gb/s on-off keying signals with high fidelity.

Simultaneously, researchers minimized chip size, with a system utilizing cascaded dual-ring resonators maintaining performance in a smaller footprint. Further advancements in 2024 yielded systems capable of processing information at speeds exceeding 60 GHz, with a simplified silicon photonic RC system successfully processing chaotic time prediction tasks at this remarkable rate. Time delay RC systems, which utilize delayed feedback loops, are also demonstrating impressive capabilities. Researchers have achieved 100% recognition accuracy in Iris flower classification tasks using a four-channel system, significantly outperforming single-channel counterparts.

Parallel processing is a key focus, with systems now leveraging multiple polarization modes and longitudinal modes of lasers to handle multiple input signals simultaneously. A deep photonic recurrent reservoir system, tested on a real-world optical fiber system in 2023, minimized the bit error rate. These innovations, encompassing device integration, algorithmic optimization, and expanded application scenarios, position photonic computing as a transformative technology with the potential to revolutionize data processing and artificial intelligence.

Photonic Neural Networks for Efficient Computing

This review examines the emerging field of photonic neuromorphic computing, a promising approach to overcome the limitations of traditional electronic computing architectures. The work details fundamental photonic devices, including both linear and non-linear components, and how these building blocks enable the creation of photonic neural networks. These networks, mirroring the structure and function of biological brains, offer potential advantages in speed, bandwidth, and energy efficiency for artificial intelligence and machine learning applications. The authors highlight recent progress in photonic neural network architectures, such as multi-layer perceptrons, convolutional networks, and spiking networks, alongside the training algorithms used to optimize their performance. While acknowledging the significant advancements, the review also points to existing challenges and suggests future research directions, including improved device integration, collaborative optimization of algorithms, and expansion into diverse application scenarios. This work serves as a valuable resource for researchers seeking to understand the current state and future potential of photonic neuromorphic computing.

👉 More information

🗞 Integrated photonic neuromorphic computing: device, architecture, chip, algorithm

🧠 ArXiv: https://arxiv.org/abs/2509.01262